- Mailing Lists

- in

- 1.8 Trillion Events Per Day with Kafka: How Agoda Handles it

Archives

- By thread 5286

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 98

The McKinsey Quarterly is turning 60, and you’re invited to the party

New Sales Order - Ref[4] - (Maqabim Distributors & British Columbia Liquor Distribution Branch)

1.8 Trillion Events Per Day with Kafka: How Agoda Handles it

1.8 Trillion Events Per Day with Kafka: How Agoda Handles it

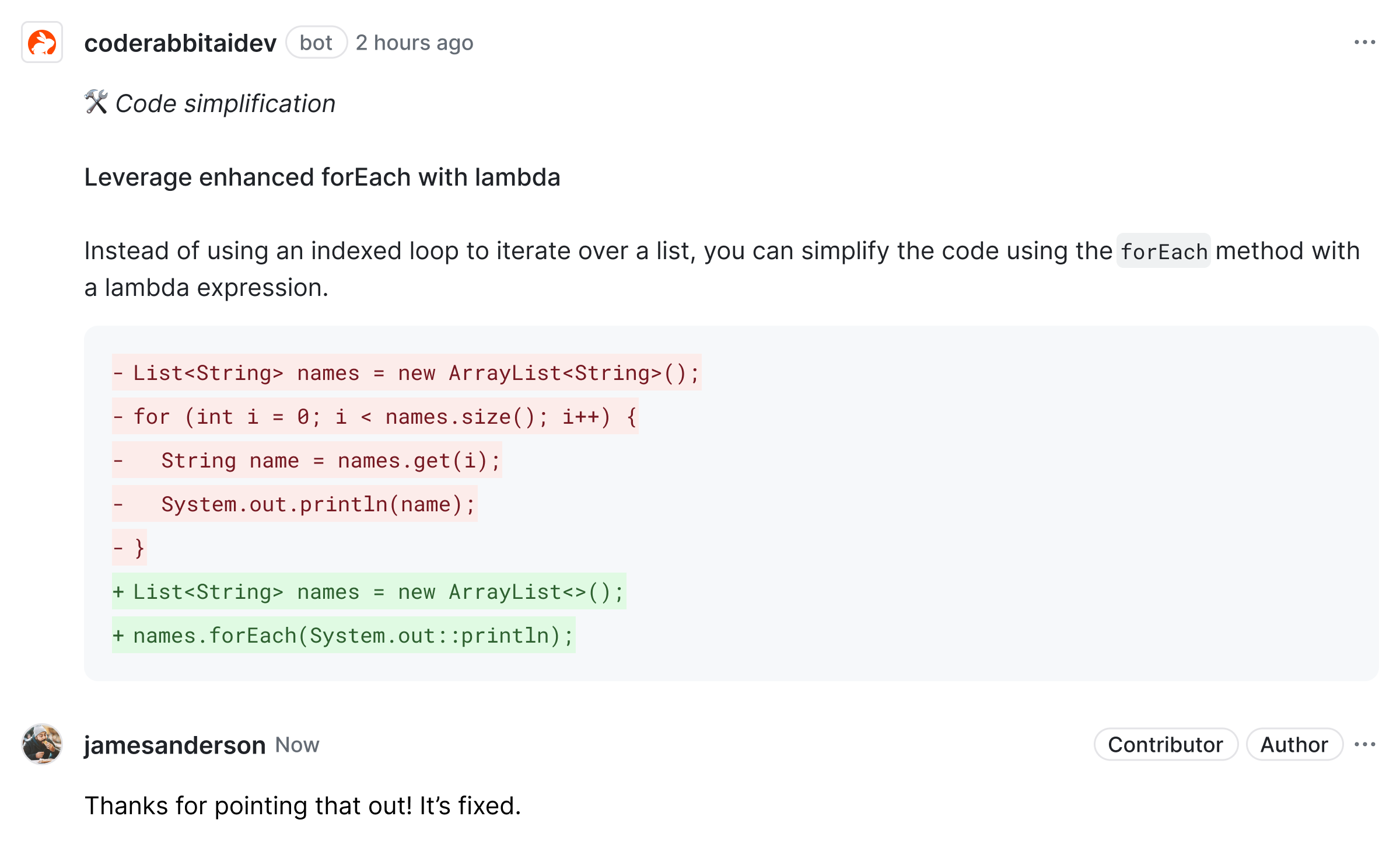

Cut Code Review Time & Bugs into Half with CodeRabbit (Sponsored)CodeRabbit is an AI Code Reviewer that helps you or your team merge your code changes faster with superior code quality. CodeRabbit doesn’t just point out issues; it suggests fixes and explains the reasoning behind the suggestions. Elevate code quality with AI-powered, context-aware reviews and 1-click fixes. CodeRabbit provides: • Automatic PR summaries and file-change walkthroughs. • Runs popular linters like Biome, Ruff, PHPStan, etc. • Highlights code and configuration security issues. • Enables you to write custom code review instructions and AST grep rules. To date, CodeRabbit has reviewed more than 5 million PRs, is installed on a million repositories, has 15k+ daily developer interactions, and is used by 1000+ organizations. PS: CodeRabbit is free for open-source. Disclaimer: The details in this post have been derived from the Agoda Engineering Blog. All credit for the technical details goes to the Agoda engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Agoda sends around 1.8 trillion events per day through Apache Kafka. Since 2015, the Kafka usage at Agoda has grown tremendously with a 2x growth YOY on average. Kafka supports multiple use cases at Agoda, which are as follows:

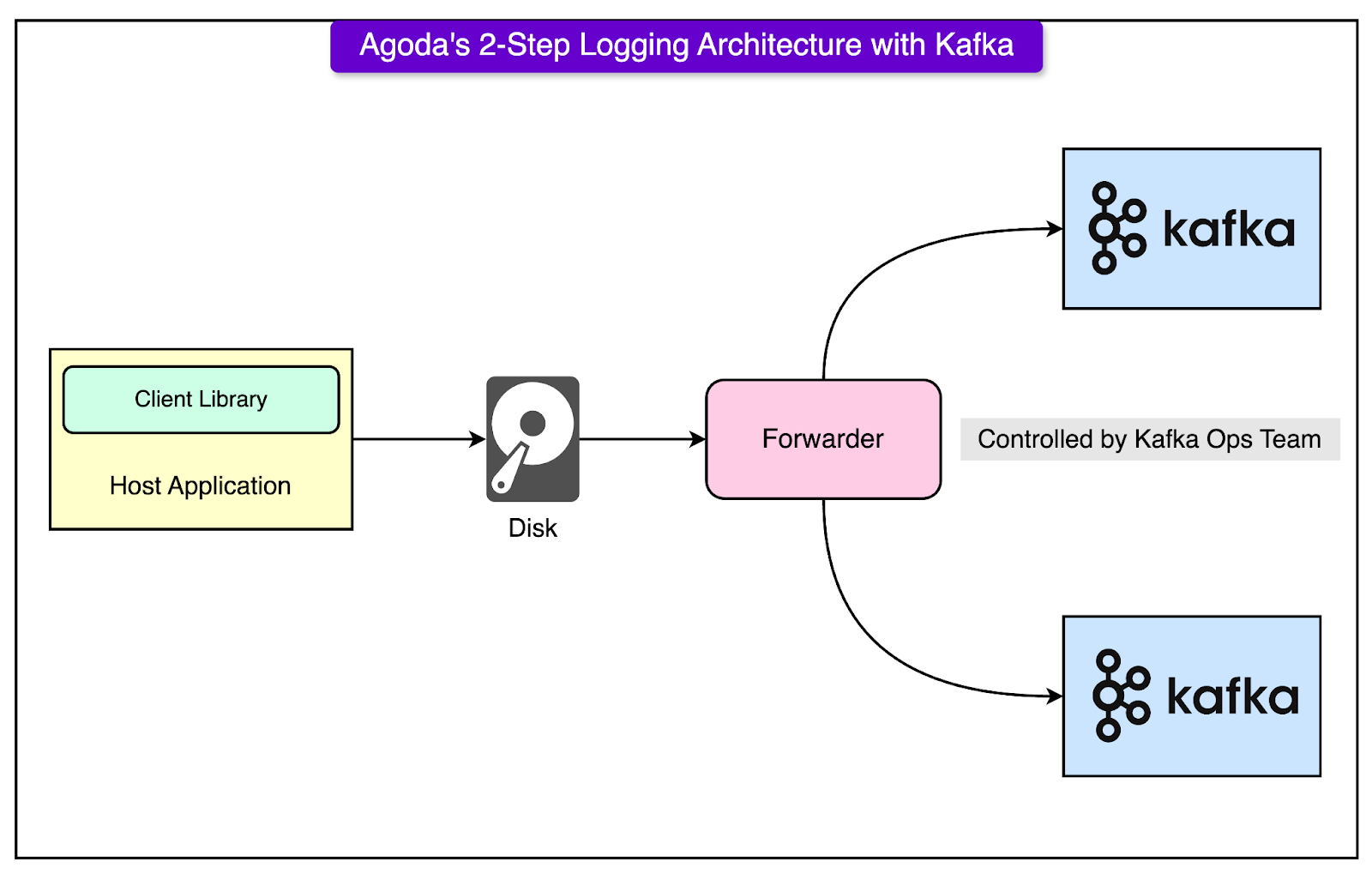

As the scale and Kafka usage grew, multiple challenges forced Agoda’s engineering team to develop solutions. In this post, we’ll examine some key challenges that Agoda faced and the solutions they implemented. Simplifying how Developers Send Data to KafkaOne of the first changes Agoda made was around sending data to Kafka. Agoda built a 2-step logging architecture:

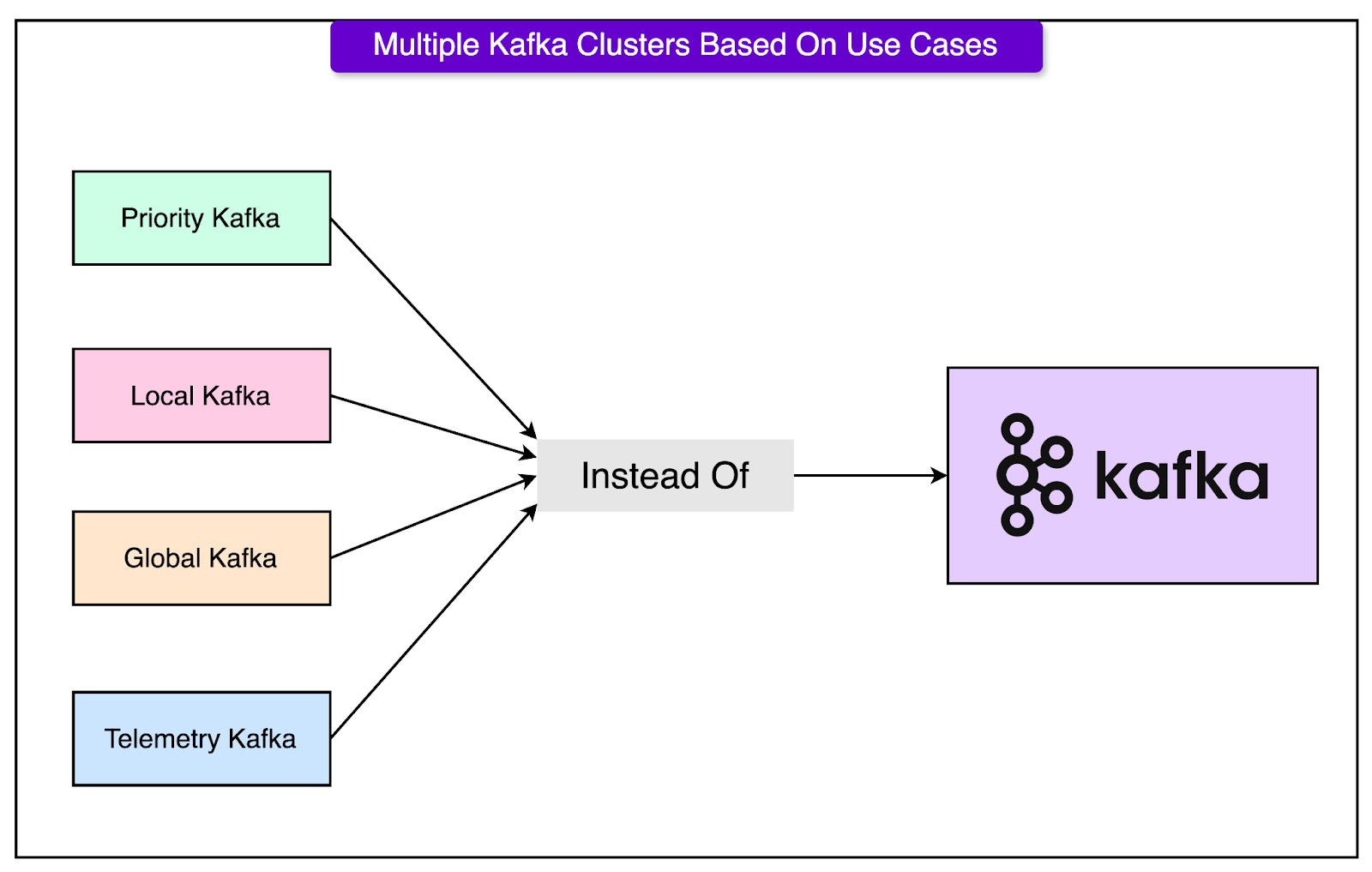

See the diagram below: The architecture separates operational concerns away from development teams, allowing the Kafka team to perform tasks like dynamic configuration, optimizations, and upgrades independently. The client library has a simplified API for producers, enforces serialization standards, and adds a layer of resiliency. The tradeoff is increased latency for better resiliency and flexibility, with a 99-percentile latency of 10s for analytics workloads. For critical and time-sensitive use cases requiring sub-second latency, applications can bypass the 2-step logging architecture and write to Kafka directly. Splitting Kafka Clusters Based On Use CasesAgoda made a strategic decision early on to split their Kafka clusters based on use cases instead of having a single large Kafka cluster per data center. This means that instead of having one massive Kafka cluster serving all kinds of workloads, they have multiple smaller Kafka clusters, each dedicated to a specific use case or set of use cases. The main reasons for this approach are:

For example, a cluster used for real-time data processing might be configured with lower data retention periods and higher network throughput to handle the high volume of data. In addition to splitting Kafka clusters by use case, Agoda also provisions dedicated physical nodes for Zookeeper, separate from the Kafka broker nodes. Zookeeper is a critical component in a Kafka cluster, responsible for managing the cluster's metadata, coordinating broker leader elections, and maintaining configuration information. Stop renting auth. Make it yours instead.(Sponsored)

FusionAuth is a complete auth & user platform that has 15M+ downloads and is trusted by industry leaders. Monitoring and Auditing KafkaFrom a monitoring point of view, Agoda uses JMXTrans to collect Kafka broker metrics. JMXTrans is a tool that connects to JMX (Java Management Extensions) endpoints and collects metrics. These metrics are then sent to Graphite, a time-series database that stores numeric time-series data. The collected metrics include things like broker throughput, partition counts, consumer lag, and various other Kafka-specific performance indicators. The metrics stored in Graphite are visualized using Grafana, a popular open-source platform for monitoring and observability. Grafana allows the creation of customizable dashboards that display real-time and historical data from Graphite. For auditing, Agoda implemented a custom Kafka auditing system. The primary goal of this auditing system is to ensure data completeness, reliability, accuracy, and timeliness across the entire Kafka pipeline. Here’s how it works:

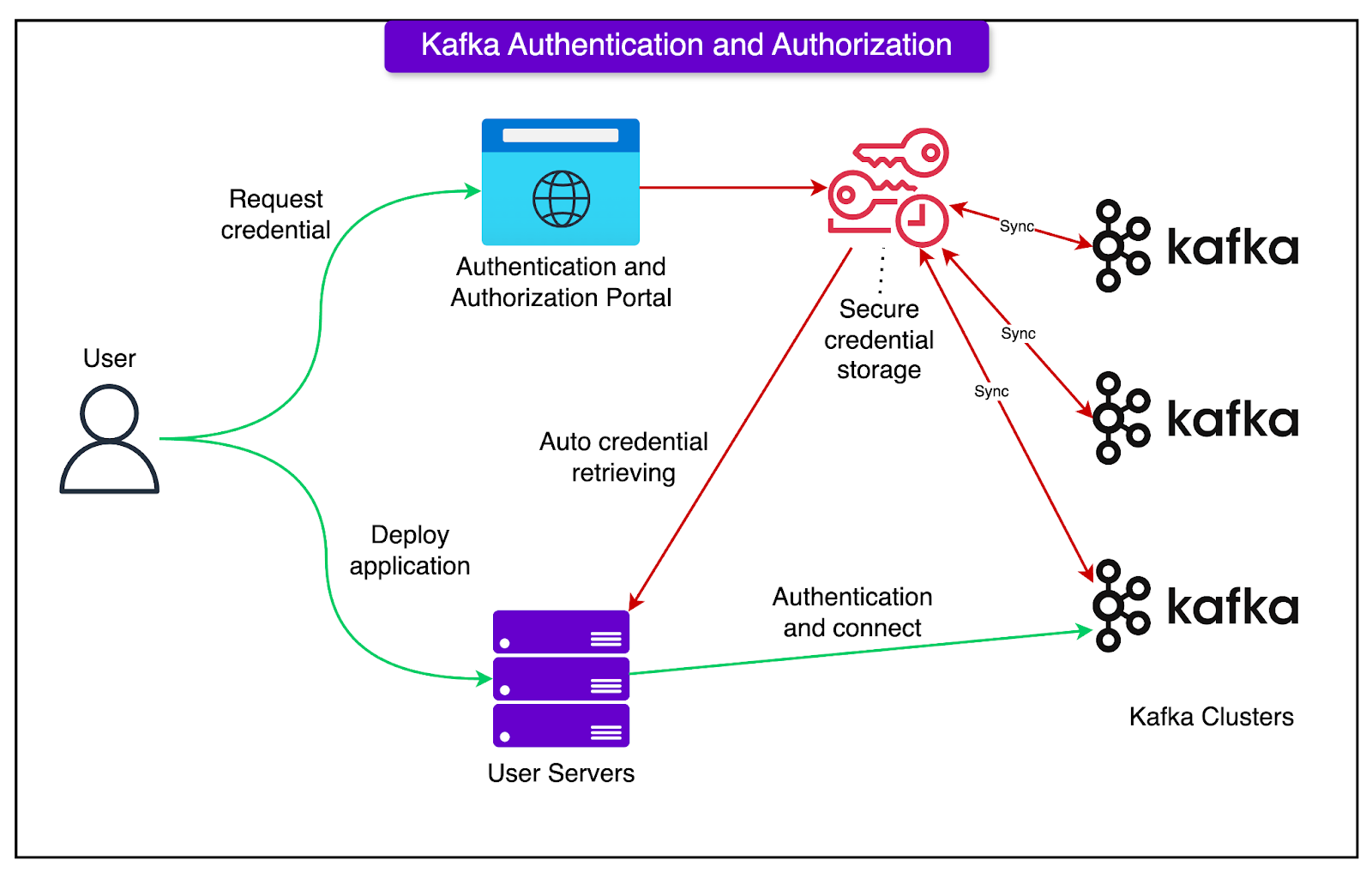

Authentication and ACLsInitially, Agoda’s Kafka clusters were used primarily for application telemetry data, and authentication wasn’t deemed necessary. As Kafka usage grew exponentially, concerns arose about the inability to identify and manage users who might be abusing or negatively impacting Kafka cluster performance. Agoda completed and released its Kafka Authentication and Authorization system in 2021. The Authentication and Authorization system consists of the following components:

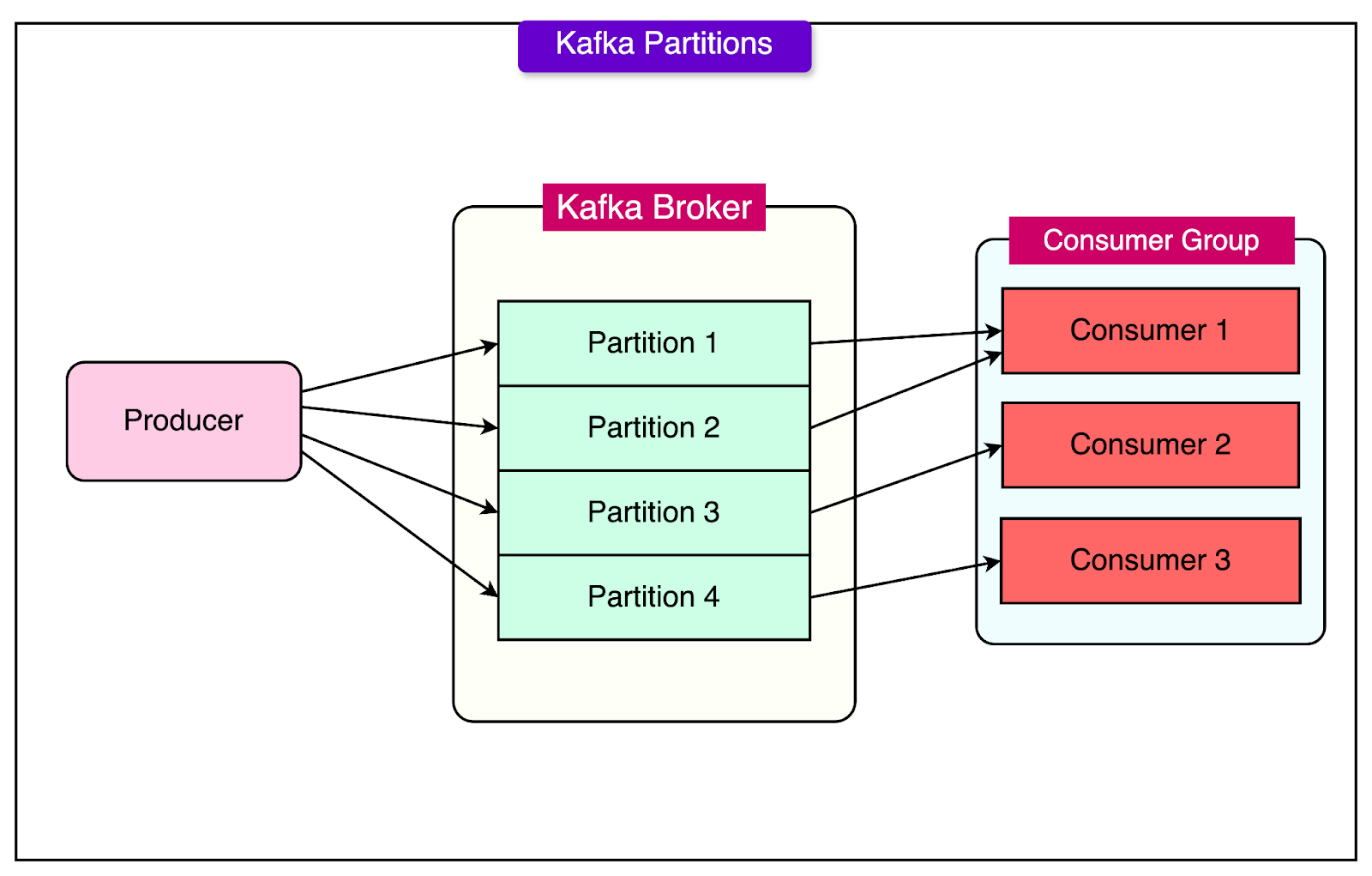

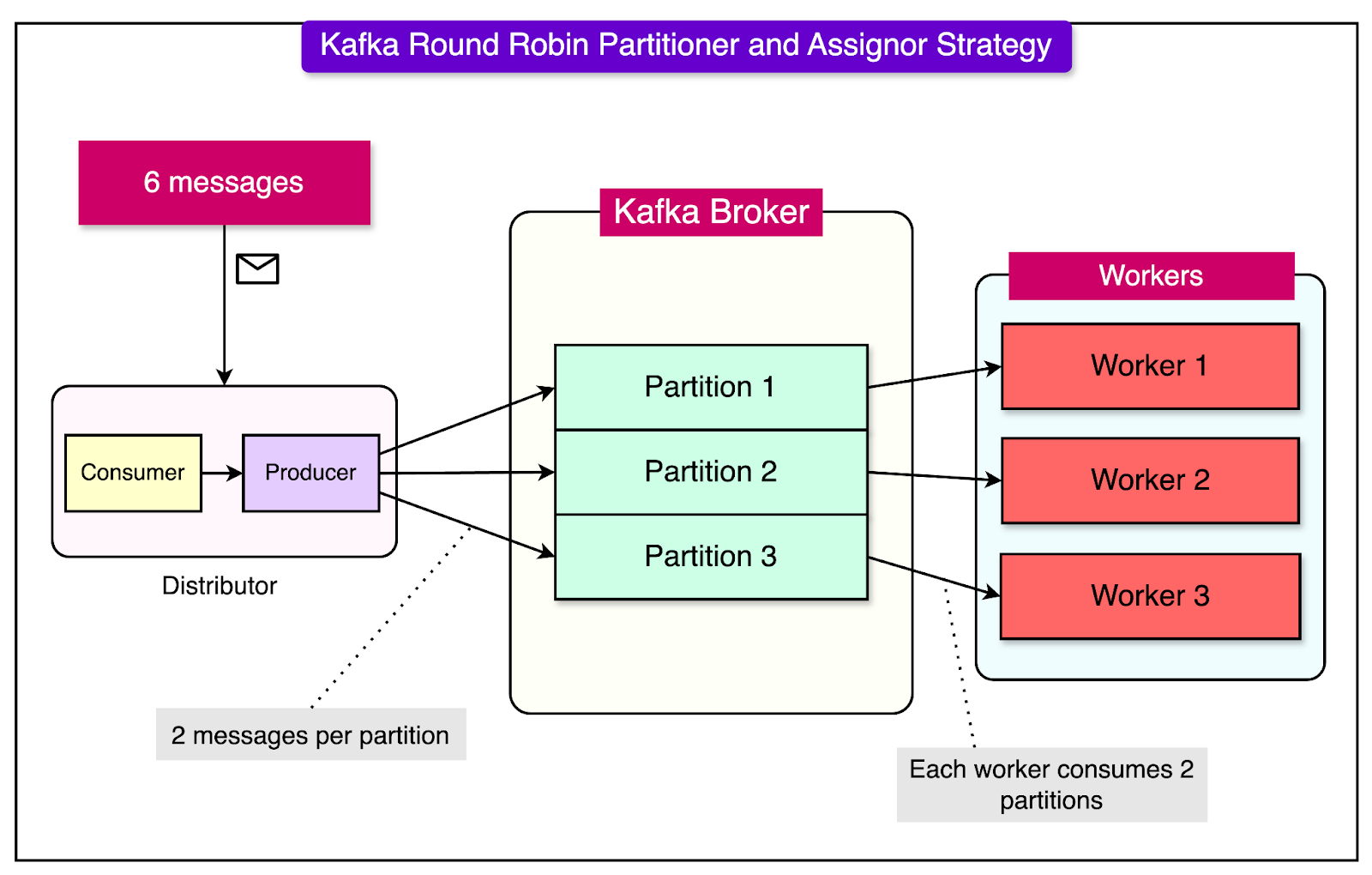

Kafka Load BalancingAgoda, as an online travel booking platform, aims to offer its customers the most competitive and current prices for accommodations and services from a wide range of external suppliers, including hotels, restaurants, and transportation providers. To achieve this, Agoda's supply system is designed to efficiently process and incorporate a vast number of real-time price updates received from these suppliers. A single supplier can provide 1.5 million price updates and offer details in just one minute. Any delays or failures in reflecting these updates can lead to incorrect pricing and booking failures. Agoda uses Kafka to handle these incoming price updates. Kafka partitions help them achieve parallelism by distributing the workload across mple partitions and consumers. See the diagram below: The Partitioner and Assignor StrategyApache Kafka's message distribution and consumption are heavily influenced by two key strategies: the partitioner and the assignor.

See the diagram below for reference: Traditionally, these strategies were designed with the assumption that all consumers have similar processing capabilities and that all messages require roughly the same amount of processing time. However, Agoda's real-world scenario deviated from these assumptions, leading to significant load-balancing challenges in their Kafka implementation. There were two primary challenges:

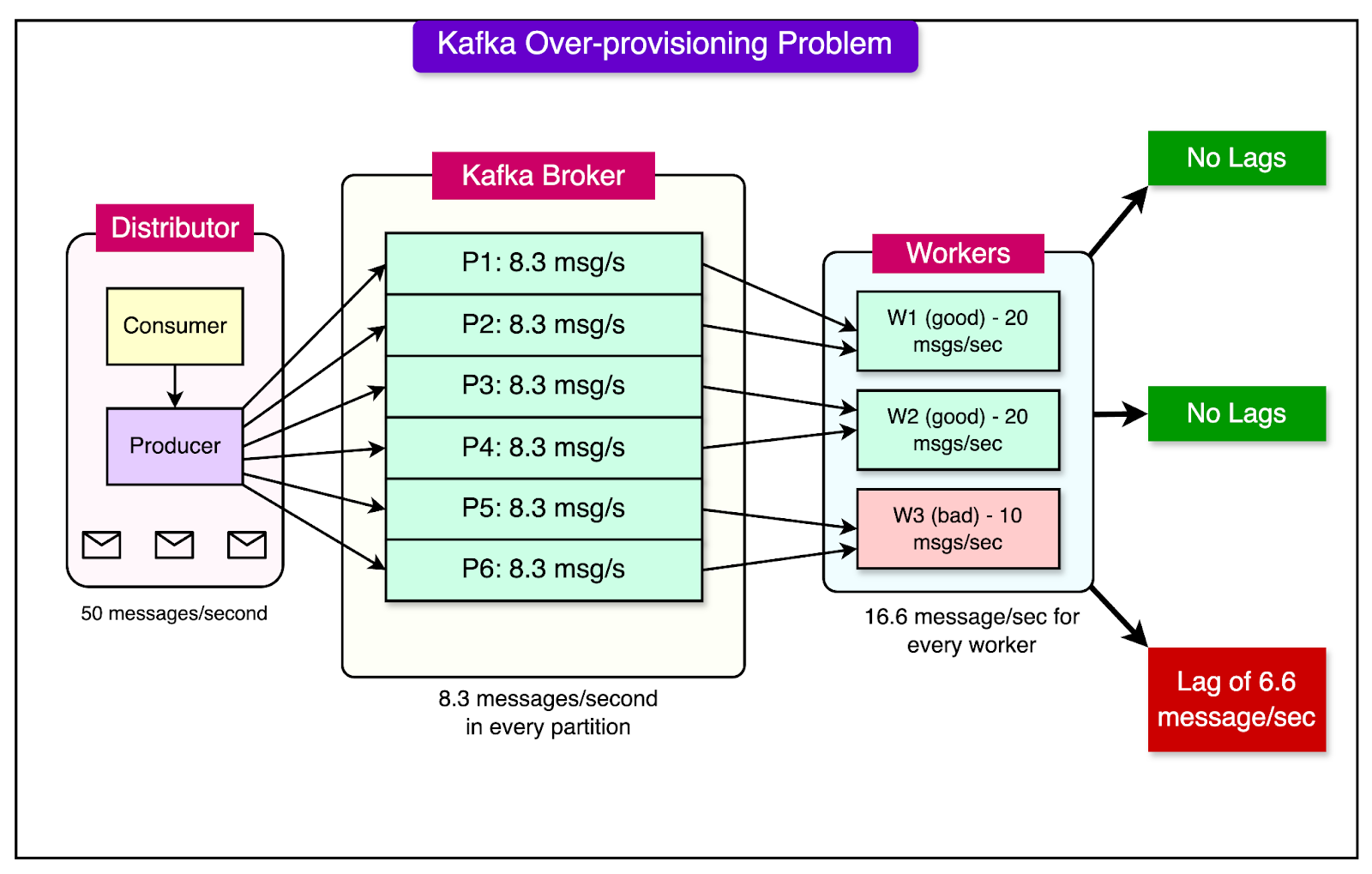

These challenges ultimately resulted in an over-provisioning problem, where resources were inefficiently allocated to compensate for the load imbalances caused by hardware differences and varying message processing demands. Overprovisioning Problem at AgodaThe over-provisioning involves allocating more resources than necessary to handle the expected peak workload efficiently. To illustrate this, let's consider a scenario where Agoda's processor service employs Kafka consumers running on heterogeneous hardware:

Theoretically, this setup should be able to process a total of 50 messages per second (20 + 20 + 10). However, when using a round-robin distribution strategy, each worker receives an equal share of the messages, regardless of their processing capabilities. If the incoming message rate consistently reaches 50 messages per second, the following issues arise:

See the diagram below To maintain acceptable latency and meet processing SLAs, Agoda would need to allocate additional resources to this setup. In this example, they would have to scale out to five machines to effectively process 50 messages per second. This means that they are overprovisioning by two extra machines due to the inefficient distribution logic that fails to consider the varying processing capabilities of the hardware. A similar scenario can occur when the processing workload for each message varies, even if the hardware is homogeneous. In both cases, this leads to several negative consequences:

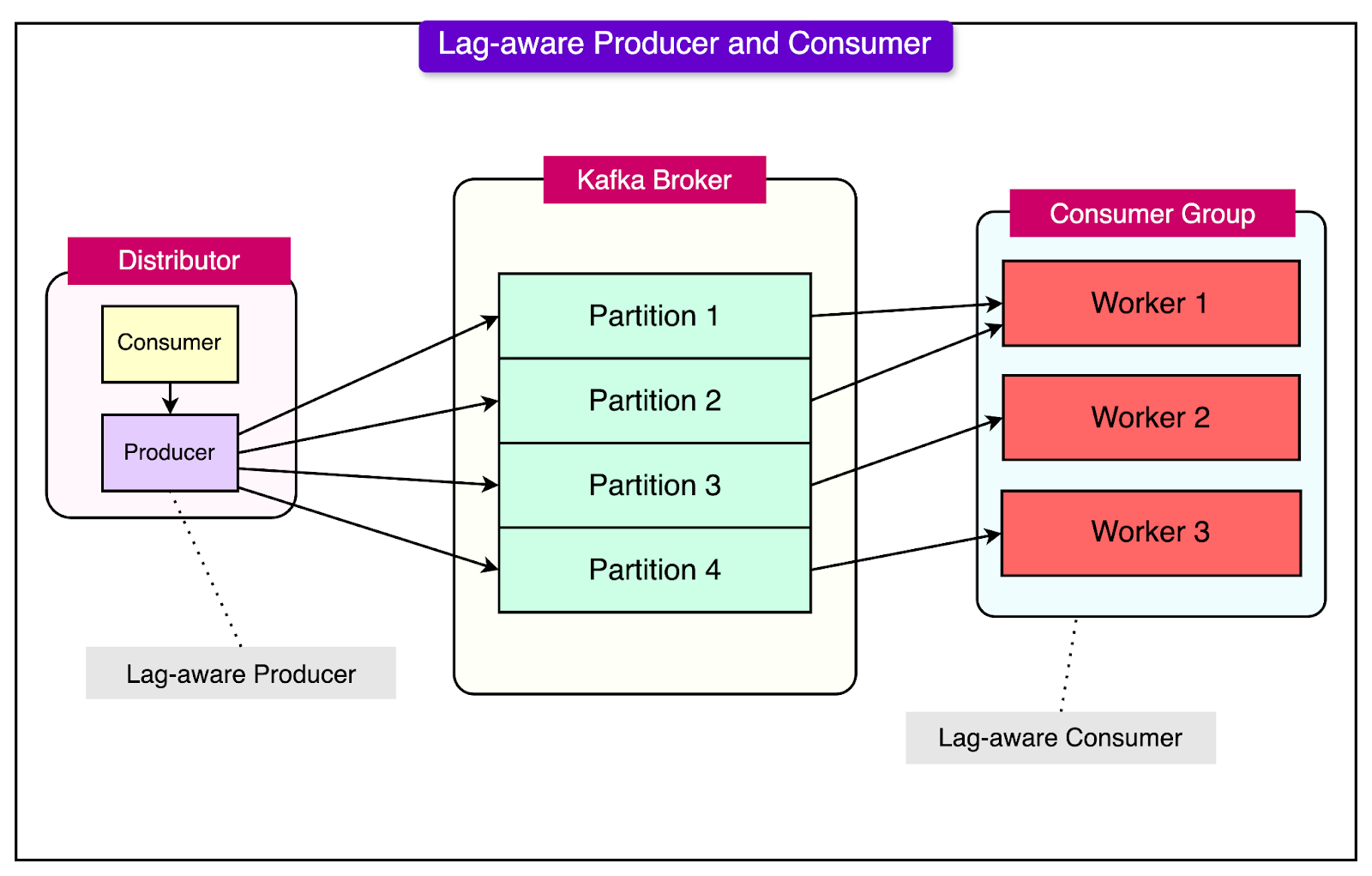

The round-robin distribution strategy, while ensuring an equal distribution of messages across consumers, fails to account for the heterogeneity in hardware performance and message processing workload. Agoda’s Dynamic Lag-Aware SolutionTo solve this, Agoda adopted a dynamic, lag-aware approach to solving the Kafka load balancing challenges. They didn’t opt for static balancing solutions like weighted load balancing due to messages having non-uniform workloads. They implemented two main strategies:

Lag-Aware ProducerA lag-aware producer is a dynamic approach to load balancing in Apache Kafka that adjusts message partitioning based on the current lag information of the target topic. It works as follows:

Let's consider an example scenario in Agoda's supply system, where an internal producer publishes task messages to a processor. The target topic has 6 partitions with the following lag distribution:

In this situation, the lag-aware producer would identify that partitions 4 and 6 have significantly higher lag compared to the other partitions. As a result, it would adapt its partitioning strategy to send fewer messages to partitions 4 and 6 while directing more messages to the partitions with lower lag (partitions 1, 2, 3, and 5). By dynamically adjusting the message distribution based on the current lag state, the lag-aware producer helps to rebalance the workload across partitions, preventing further lag accumulation on the already overloaded partitions. Lag-Aware ConsumerLag-aware consumers are a solution employed when multiple consumer groups are subscribed to the same Kafka topic, making lag-aware producers less effective. The process works as follows:

Let's illustrate this with an example. Suppose Agoda's Processor service has three consumer instances (workers) that are consuming messages from six partitions of a topic:

If Worker 3 happens to be running on older, slower hardware compared to the other workers, it may struggle to keep up with the message influx in Partitions 5 and 6, resulting in higher lag. In this situation, Worker 3 can proactively unsubscribe from the topic, triggering a rebalance event. During the rebalance, the custom Assigner evaluates the current lag and processing capacity of each worker and redistributes the partitions accordingly. For example, it may assign Partition 5 to Worker 1 and Partition 6 to Worker 2, effectively relieving Worker 3 of its workload until the lag is reduced to an acceptable level. ConclusionIn conclusion, Agoda's journey with Apache Kafka has been one of continuous growth, learning, and adaptation. By implementing strategies such as the 2-step logging architecture, splitting Kafka clusters based on use cases, developing robust monitoring and auditing systems, and Kafka load balancing Agoda has successfully managed the challenges that come with handling 1.8 trillion events per day. As Agoda continues to evolve and grow, its Kafka setup will undoubtedly play a crucial role in supporting the company's ever-expanding needs. The various solutions also provide great learning for other software developers in the wider community when it comes to adapting Kafka to their organizational needs. References: SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 29 Oct 2024