- Mailing Lists

- in

- A Brief History of Scaling Netflix

Archives

- By thread 5229

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 41

What does it take to succeed with digital and AI?

Taskeye - Field Employee Task Tracking Software Offering Real-Time Visibility into Employees’ Activities on the Field.

A Brief History of Scaling Netflix

A Brief History of Scaling Netflix

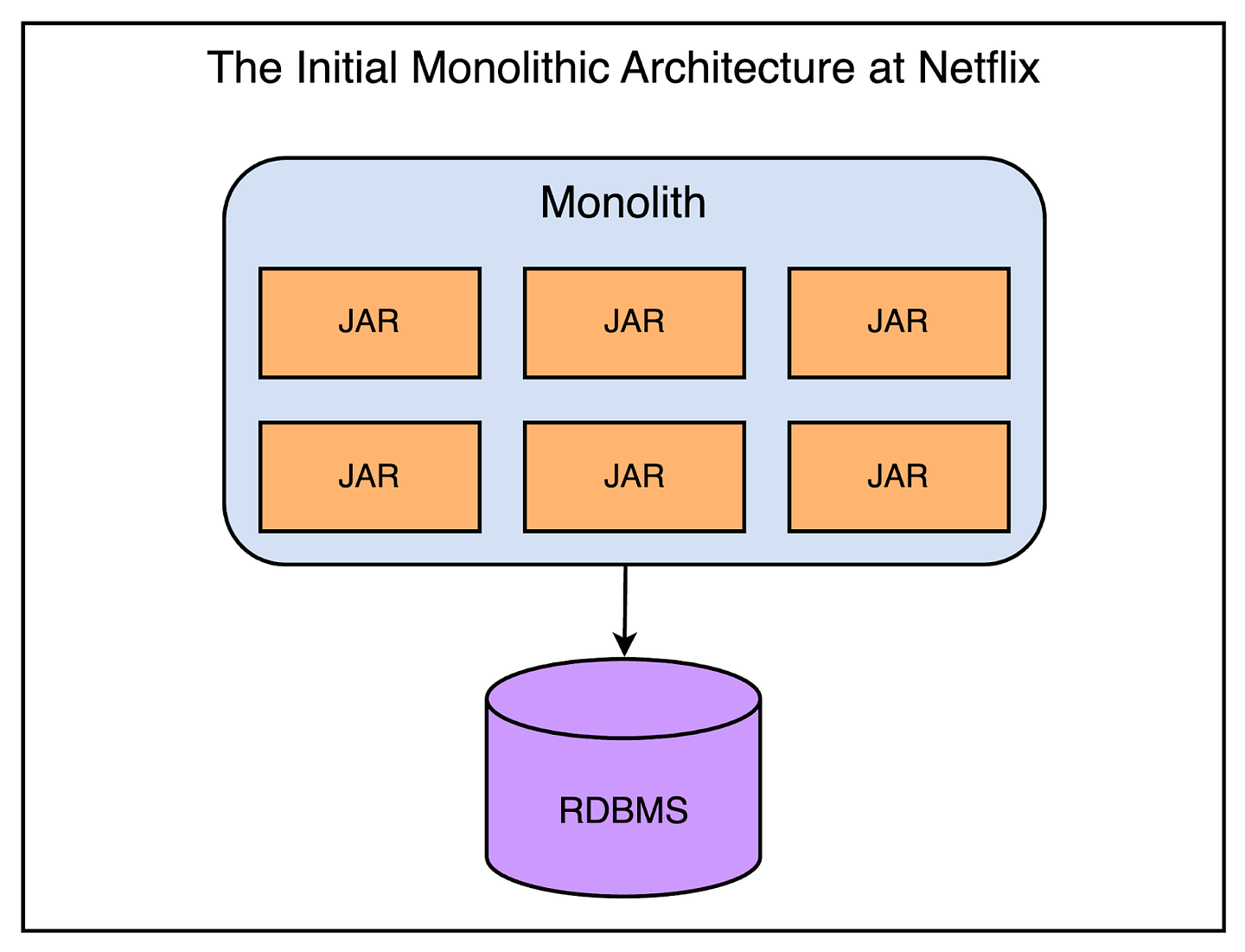

Latest articlesIf you’re not a subscriber, here’s what you missed this month. To receive all the full articles and support ByteByteGo, consider subscribing: Netflix began its life in 1997 as a mail-based DVD rental business. Marc Randolph and Reed Hastings got the idea of Netflix while carpooling between their office and home in California. Hastings admired Amazon and wanted to emulate their success by finding a large category of portable items to sell over the Internet. It was around the same time that DVDs were introduced in the United States and they tested the concept of selling or renting DVDs by mail. Fast forward to 2024, Netflix has evolved into a video-streaming service with over 260 million users from all over the world. Its impact has been so humongous that “Netflix being down” is often considered an emergency. To support this amazing growth story, Netflix had to scale its architecture on multiple dimensions. In this article, we attempt to pull back the curtains on some of the most significant scaling challenges they faced and how those challenges were overcome. The Architectural Origins of NetflixLike any startup looking to launch quickly in a competitive market, Netflix started as a monolithic application. The below diagram shows what their architecture looked like a long time ago. The application consisted of a single deployable unit with a monolithic database (Oracle). As you can notice, the database was a possible single point of failure. This possibility turned into reality in August 2008. There was a major database corruption issue due to which Netflix couldn’t ship any DVDs to the customers for 3 days. It suddenly became clear that they had to move away from a vertically scaled architecture prone to single points of failure. As a response, they made two important decisions:

The move to AWS was a crucial decision. When Netflix launched in 2007, EC2 was just getting started and they couldn’t leverage it at the time. Therefore, they built two data centers located right next to each other. However, building a data center is a lot of work. You’ve to order equipment, wait for the equipment to arrive and install it. Before you finish, you’ve once again run out of capacity and need to go through the whole cycle again. To cut through this cycle, Netflix went for a vertical scaling strategy that led to their early system architecture being modeled as a monolithic application. However, the outage we talked about earlier taught Netflix one critical lesson - building data centers wasn’t their core capability. Their core capability was delivering video to the subscribers and it would be far better for them to get better at delivering video. This prompted the move to AWS with a design approach that can eliminate single points of failure. It was a mammoth decision for the time and Netflix adopted some basic principles to guide them through this change: Buy vs Build

Stateless Services

Scale-out vs scale up

Redundancy and Isolation

Automate Destructive Testing

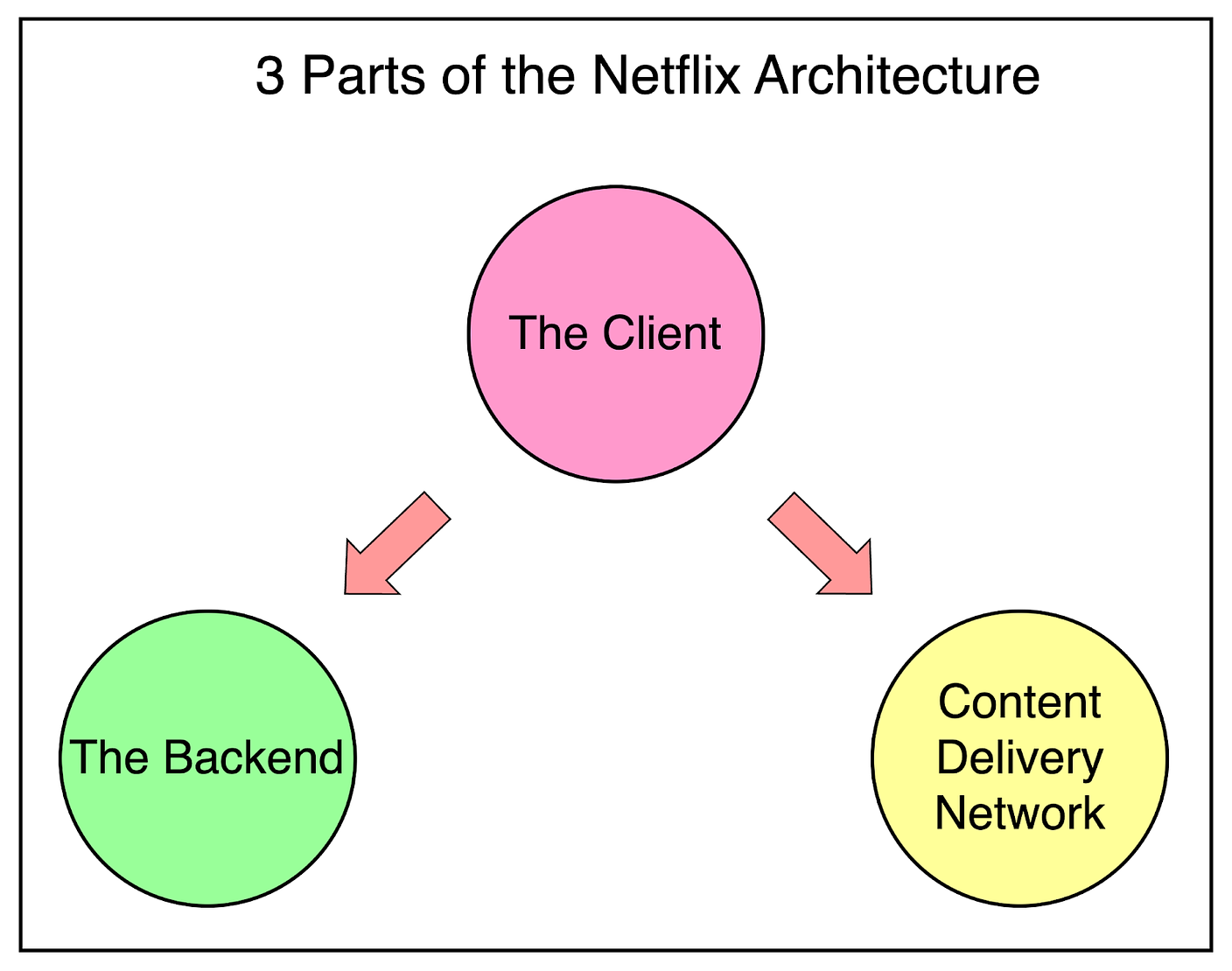

These guiding principles acted as the North Star for every transformational project Netflix took up to build an architecture that could scale according to the demands. The Three Main Parts of Netflix ArchitectureThe overall Netflix architecture is divided into three parts:

The client is the Netflix app on your mobile, a website on your computer or even the app on your Smart TV. It includes any device where the users can browse and stream Netflix videos. Netflix controls each client for every device. The backend is the part of the application that controls everything that happens before a user hits play. It consists of multiple services running on AWS and takes care of various functionalities such as user registration, preparing incoming videos, billing, and so on. The backend exposes multiple APIs that are utilized by the client to provide a seamless user experience. The third part is the Content Delivery Network also known as Open Connect. It stores Netflix videos in different locations throughout the world. When a user plays a video, it streams from Open Connect and is displayed on the client. The important point to note is that Netflix controls all three areas, thereby achieving complete vertical integration over their stack. Some of the key areas that Netflix had to scale if they wanted to succeed were as follows:

Let’s look at each of these areas in more detail. Scaling the Netflix CDNImagine you’re watching a video in Singapore and the video is being streamed from Portland. It’s a huge geographic distance broken up into many network hops. There are bound to be latency issues in this setup resulting in a poorer user experience. If the video content is moved closer to the people watching it, the viewing experience will be a lot better. This is the basic idea behind the use of CDN at Netflix. Put the video as close as possible to the users by storing copies throughout the world. When a user wants to watch a video, stream it from the nearest node. Each location that stores video content is called a PoP or point of presence. It’s a physical location that provides access to the internet and consists of servers, routers and other networking equipment. However, it took multiple iterations for Netflix to scale their CDN to the right level. Iteration 1 - Small CDNNetflix debuted its streaming service in 2007. At the time, it had over 35 million members across 50 countries, streaming more than a billion hours of video each month To support this usage, Netflix built its own CDN in five different locations within the United States. Each location contained all of the content. Iteration 2 - 3rd Party CDNIn 2009, Netflix started to use 3rd party CDNs. The reason was that 3rd-party CDN costs were coming down and it didn’t make sense for Netflix to invest a lot of time and effort in building their own CDN. As we saw, they struggled a lot with running their own data centers. Moving to a 3rd-party solution also gave them time to work on other higher-priority projects. However, Netflix did spend a lot of time and effort in developing smarter client applications to adapt to changing network conditions. For example, they developed techniques to switch the streaming to a different CDN to get a better result. Such innovations allowed them to provide their users with the highest quality experience even in the face of errors and overloaded networks. Iteration 3 - Open ConnectSometime around 2011, Netflix realized that they were operating at a scale where a dedicated CDN was important to maximize network efficiency and viewing experience. The streaming business was now the dominant source of revenue and video distribution was a core competency for Netflix. If they could do it with extremely high quality, it could turn into a huge competitive advantage. Therefore, in 2012, Netflix launched its own CDN known as Open Connect. To get the best performance, they developed their own computer system for video storage called Open Connect Appliances or OCAs. The below picture shows an OCA installation:

An OCA installation was a cluster of multiple OCA servers. Each OCA is a fast server that is highly optimized for delivering large files. They were packed with lots of hard disks or flash drives for storing videos. Check the below picture of a single OCA server:

The launch of Open Connect CDN had a lot of advantages for Netflix:

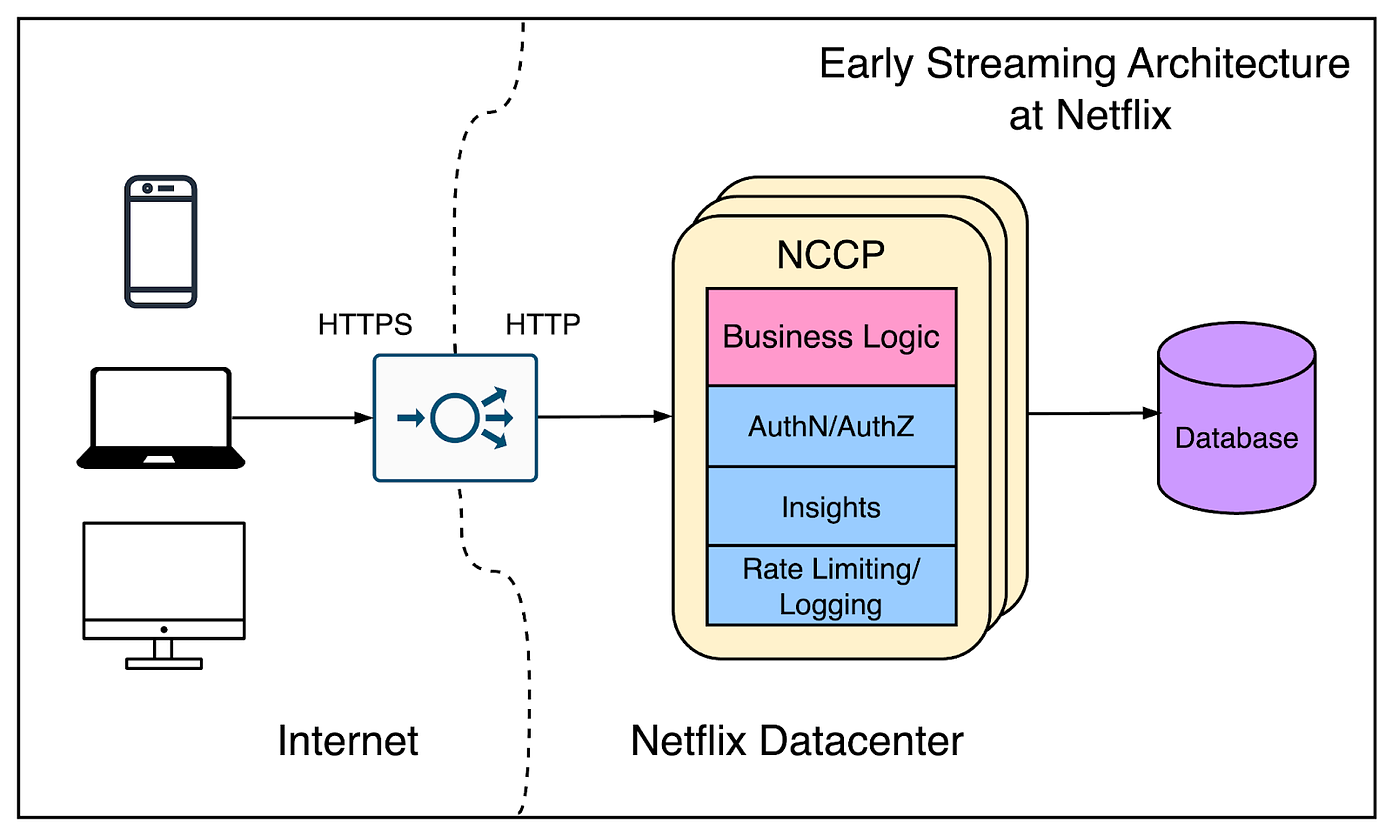

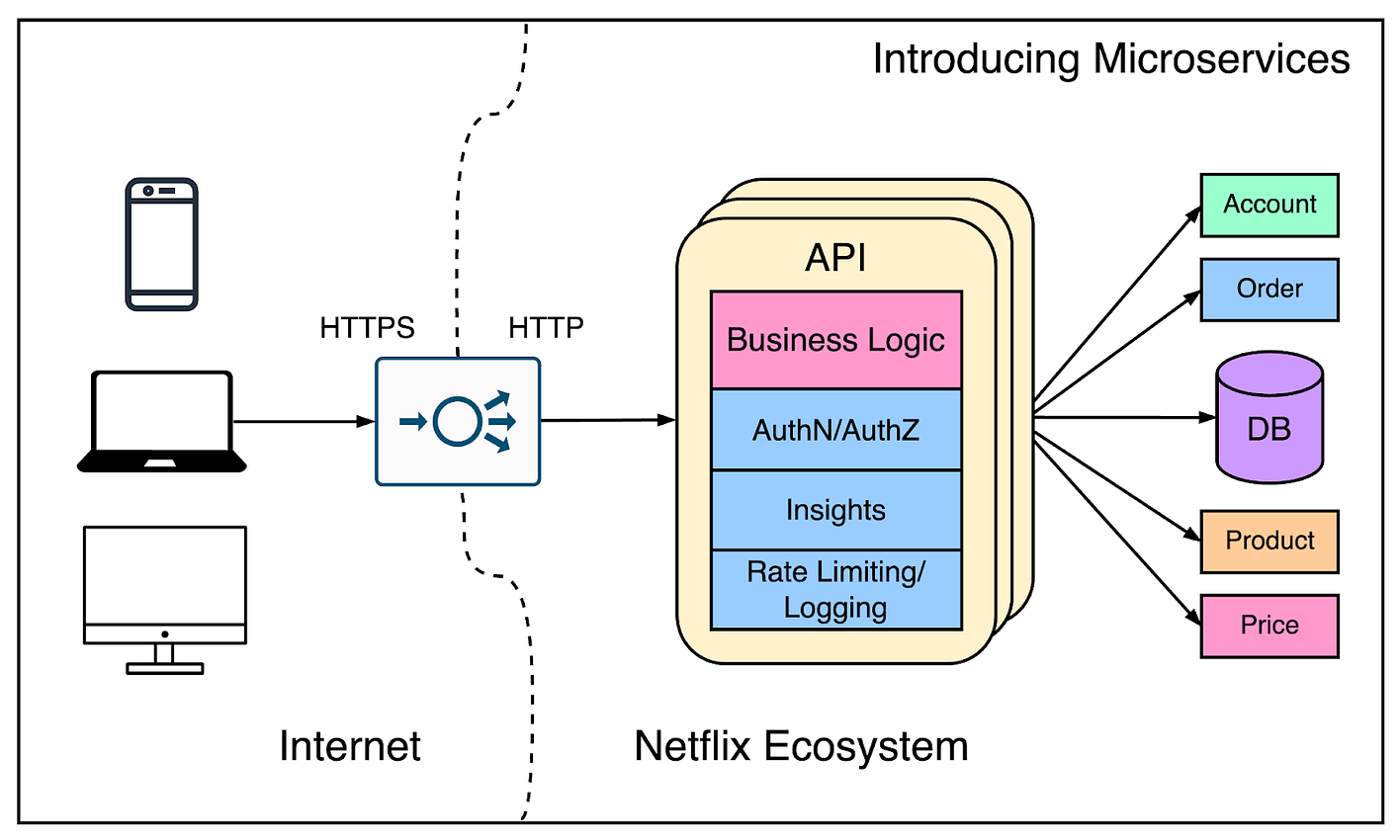

Scaling the Netflix EdgeThe next critical piece in the scaling puzzle of Netflix was the edge. The edge is the part of a system that’s close to the client. For example, out of DNS and database, DNS is closer to the client and can be thought of as edgier. Think of it as a degree rather than a fixed value. Edge is the place where data from various requests enters into the service domain. Since this is the place where the volume of requests is highest, it is critical to scale the edge. The Netflix Edge went through multiple stages in terms of scaling. Early ArchitectureThe below diagram shows how the Netflix architecture looked in the initial days. As you can see, it was a typical three-tier architecture. There is a client, an API, and a database that the API talks to. The API application was named NCCP (Netflix Content Control Protocol) and it was the only application that was exposed to the client. All the concerns were put into this application. The load balancer terminated the TLS and sent plain traffic to the application. Also, the DNS configuration was quite simple. The idea was that clients should be able to find and reach the Netflix servers. Such a design was dictated by the business needs of the time. They had money but not a lot. It was important to not overcomplicate things and optimize for time to market. The Growth PhaseAs the customer base grew, more features were added. With more features, the company started to earn more money. At this point, it was important for them to maintain the engineering velocity. This meant breaking apart the monolithic application into microservices. Features were taken out of the NCCP application and developed as separate apps with separate data. However, the logic to orchestrate between the services was still within the API. An incoming request from a client hits the API and the API calls the underlying microservices in the right order. The below diagram shows this arrangement:  Continue reading this post for free, courtesy of Alex Xu.A subscription gets you:

© 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:39 - 21 Mar 2024