- Mailing Lists

- in

- A Crash Course in Docker

Archives

- By thread 5343

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 156

[Online Workshop] Dashboard Techniques to Visualise System and Business Performance

Where will you travel next? The tourism industry can learn to cope with staff shortages.

A Crash Course in Docker

A Crash Course in Docker

This is a sneak peek of today’s paid newsletter for our premium subscribers. Get access to this issue and all future issues - by subscribing today. Latest articlesIf you’re not a subscriber, here’s what you missed this month.

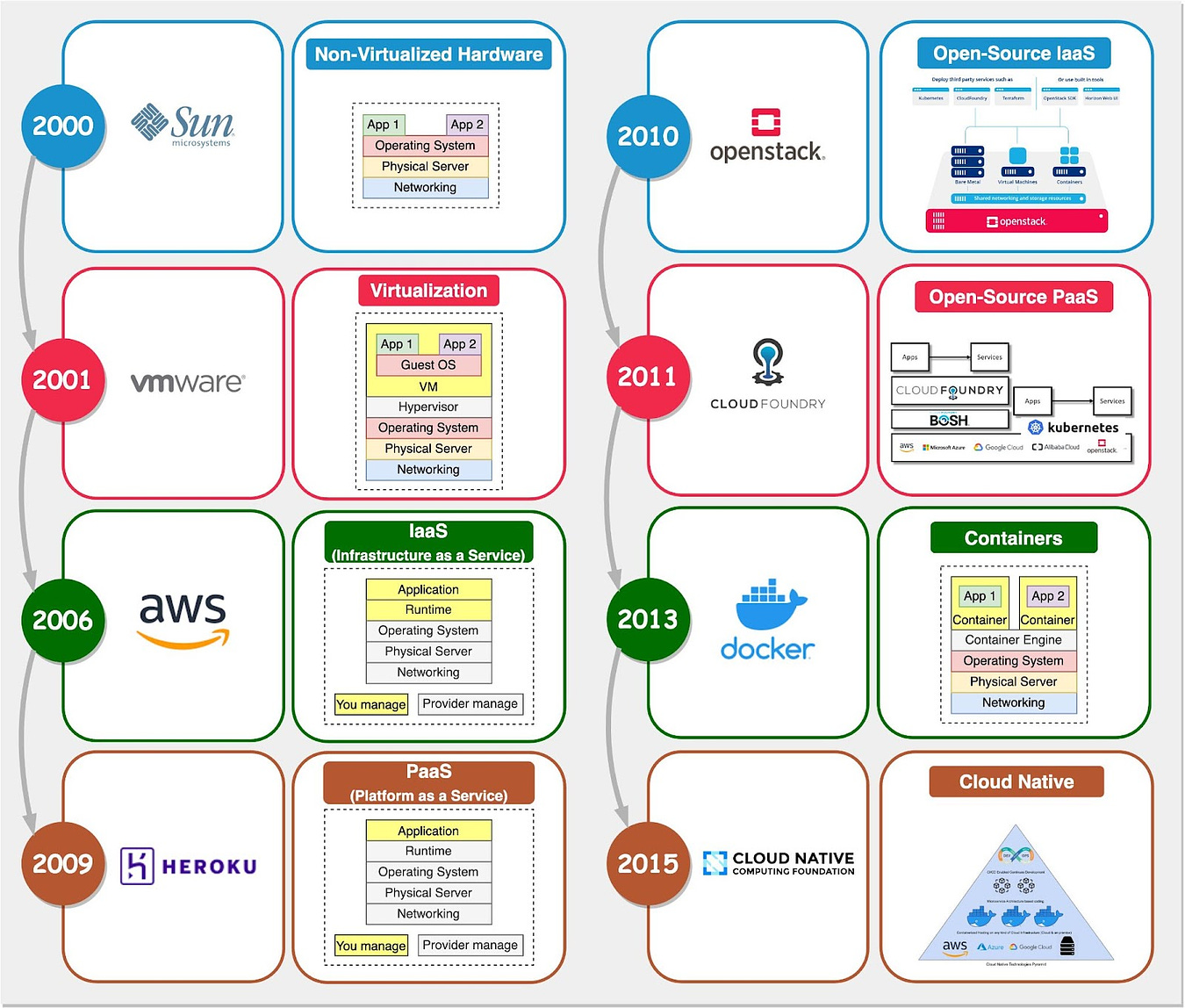

To receive all the full articles and support ByteByteGo, consider subscribing: In the old days of software development, getting an application from code to production was slow and painful. Developers struggled with dependency hell as test and production environments differ in subtle ways, leading to code mysteriously working on one environment but not the other. Then along came Docker in 2013, originally created within dotCloud as an experiment with container technology to simplify deployment. Docker was open-sourced that March, and over the next 15 months it emerged as a leading container platform. In this newsletter, we’ll explore the history of container technology, the specific innovations that powered Docker's meteoric rise, and the Linux fundamentals enabling its magic. We’ll explain what Docker images are, how they differ from virtual machines, and whether you need Kubernetes to use Docker effectively. By the end, you’ll understand why Docker has become the standard for packaging and distributing applications in the cloud. Tracing the Path from Bare Metal to DockerIn the past two decades, backend infrastructure evolved rapidly, as illustrated in the timeline below:

In the early days of computing, applications ran directly on physical servers (“bare metal”). Teams purchased, racked, stacked, powered on, and configured every new machine. This was very time-consuming just to get started. Then came hardware virtualization. It allowed multiple virtual machines to run on a single powerful physical server. This enabled more efficient utilization of resources. But provisioning and managing VMs still required heavy lifting. Next was infrastructure-as-a-service (IaaS) like Amazon EC2. IaaS removed the need to set up physical hardware and provided on-demand virtual resources. But developers still had to manually configure VMs with libraries, dependencies, etc. Platform-as-a-service (PaaS) like Cloud Foundry and Heroku was the next big shift. PaaS provides a managed development platform to simplify deployment. But inconsistencies across environments led to “works on my machine” issues. This brought us to Docker in 2013. Docker improved upon PaaS through two key innovations. Lightweight ContainerizationContainer technology is often compared to virtual machines, but they use very different approaches. A VM hypervisor emulates underlying server hardware such as CPU, memory, and disk, to allow multiple virtual machines to share the same physical resources. It installs guest operating systems on this virtualized hardware. Processes running on the guest OS can’t see the host hardware resources or other VMs. In contrast, Docker containers share the host operating system kernel. The Docker engine does not virtualize OS resources. Instead, containers achieve isolation through Linux namespaces and control groups (cgroups). Namespaces provide separation of processes, networking, mounts, and other resources. cgroups limit and meter usage of resources like CPU, memory, and disk I/O for containers. We’ll visit this in more depth later. This makes containers more lightweight and portable than VMs. Multiple containers can share a host and its resources. They also start much faster since there is no bootup of a full VM OS. Docker is not “lightweight virtualization” as some would describe it. It uses Linux primitives to isolate processes, not virtualize hardware like a hypervisor. This OS-level isolation is what enables lightweight Docker containers. Application PackagingBefore Docker’s release in 2013, Cloud Foundry was a widely used open-source PaaS platform. Many companies adopted Cloud Foundry to build their own PaaS offerings. Compared to IaaS, PaaS improves developer experience by handling deployment and application runtimes. Cloud Foundry provided these key advantages:

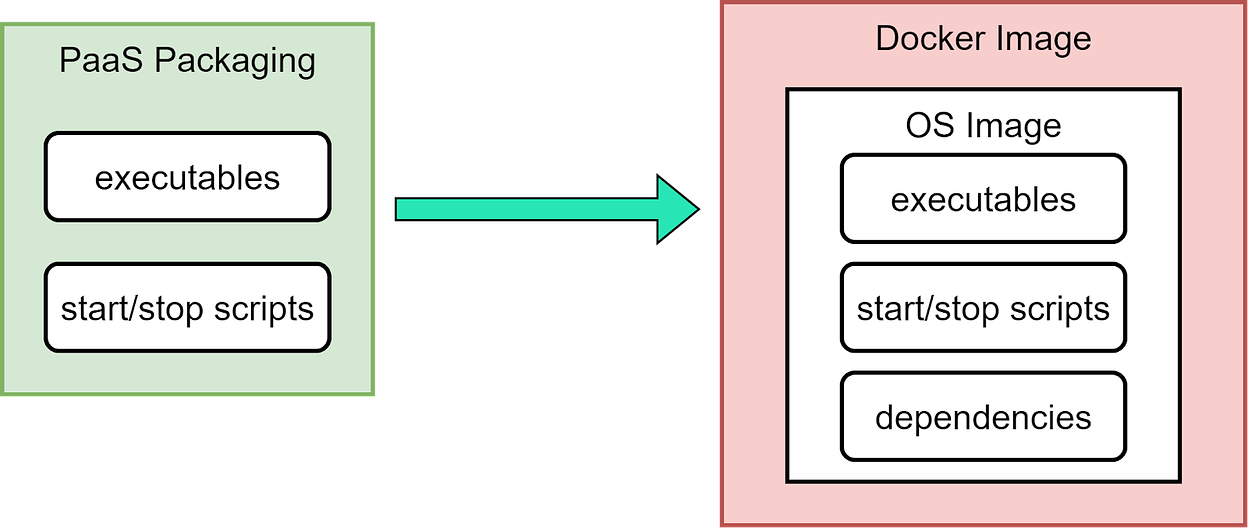

Cloud Foundry relied on Linux containers under the hood to provide isolated application sandbox environments. However, this core container technology powering Cloud Foundry was not exposed as a user-facing feature or highlighted as a key architectural component. The companies offering Cloud Foundry PaaS solutions overlooked the potential of unlocking containers as a developer tool. They failed to recognize how containers could be transformed from an internal isolation mechanism to an externalized packaging format. Docker became popular by solving two key PaaS packaging problems with container images:

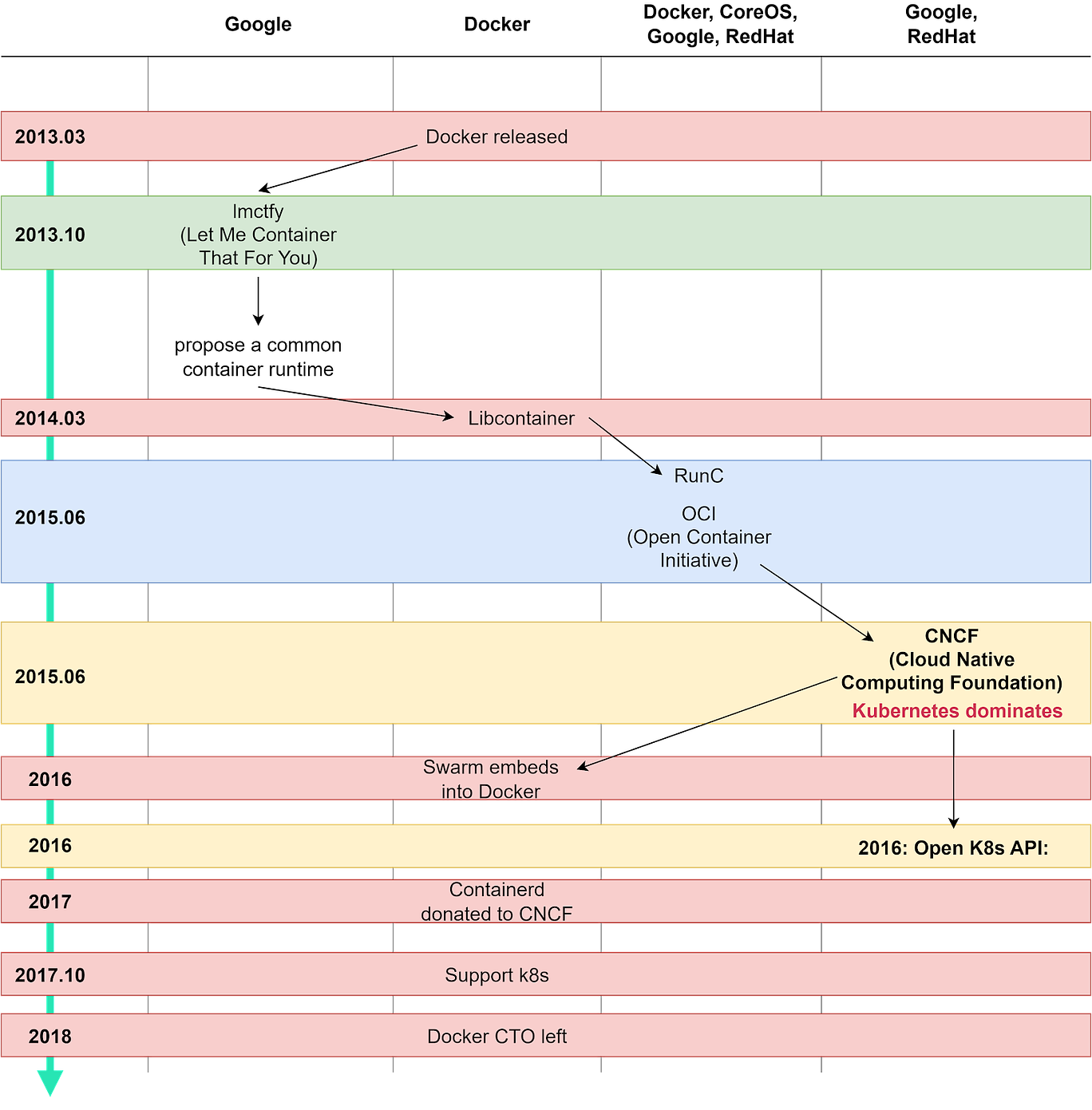

The diagram below shows a comparison. This elegantly addressed dependency and compatibility issues that plagued PaaS. But Cloud Foundry did not adapt to support Docker images fast enough. This allowed Docker images to proliferate in the cloud computing environment. From Docker to KubernetesDocker won early popularity because it innovated in application packaging and deployment. Its initial success was largely due to this novel method of isolating applications in lightweight containers. As Docker's popularity grew, the company sought to expand its offerings beyond containerization. It ventured to expand into a full PaaS platform. This led to the development of Docker Swarm for cluster management and the acquisition of Fig (later Docker Compose) to enhance orchestration capabilities. Docker’s aspirations caught the attention of some tech giants. Companies like Google, RedHat, and other PaaS companies wanted in on this hot new technology. Let’s see what happened between 2013 and 2018 with the diagram below: Keep reading with a 7-day free trialSubscribe to ByteByteGo Newsletter to keep reading this post and get 7 days of free access to the full post archives.A subscription gets you:

© 2023 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 9 Nov 2023