- Mailing Lists

- in

- EP88: Linux Boot Process Explained

Archives

- By thread 5360

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 173

Net zero must also be net affordable, net reliable, net competitive

Securing a successful net-zero transition

EP88: Linux Boot Process Explained

EP88: Linux Boot Process Explained

This week’s system design refresher:

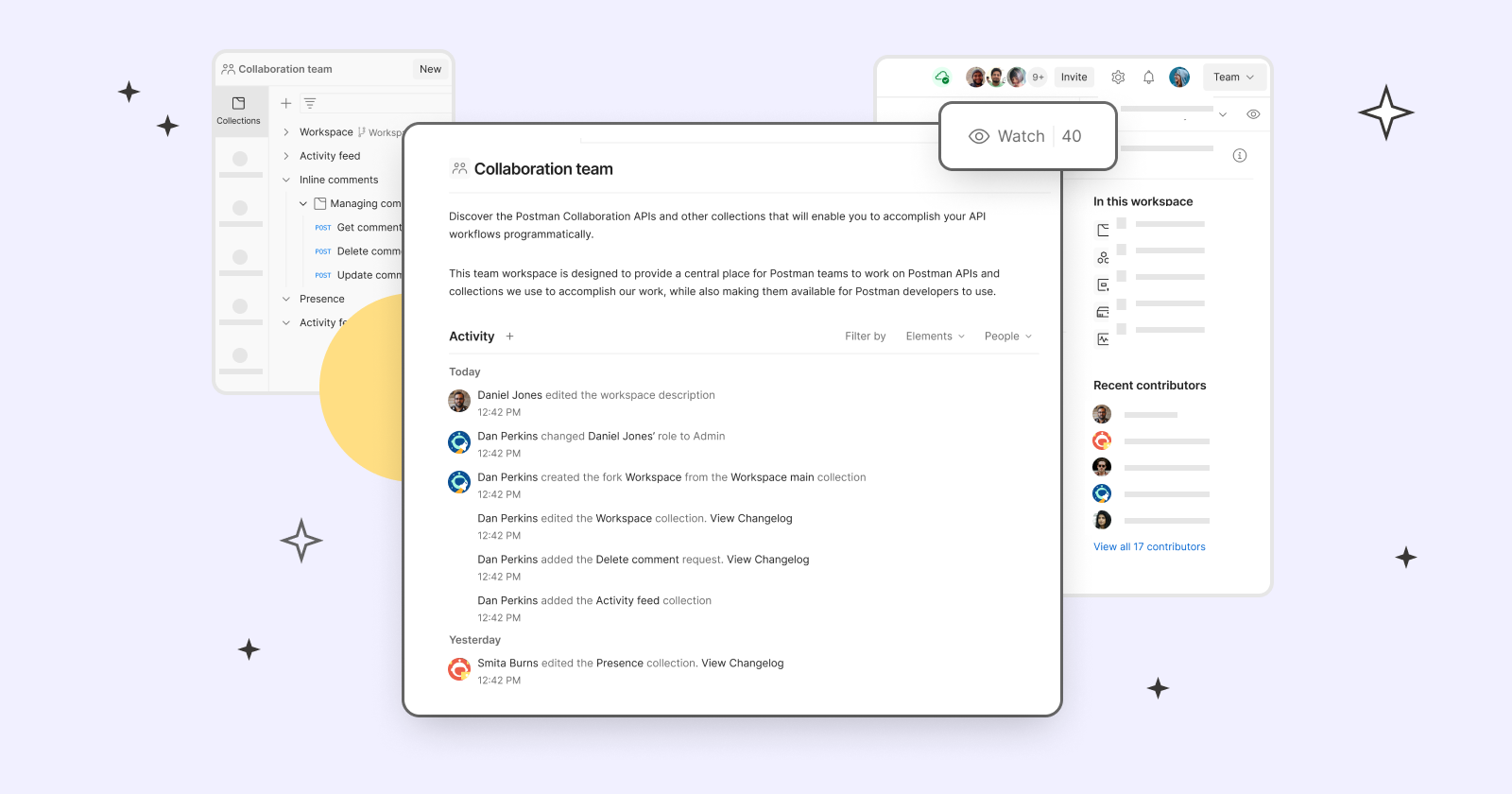

Streamline API Development With Postman Workspaces (Sponsored)Solve problems together. They are the go-to place for development teams to collaborate and move quickly while staying on the same page. With workspaces, teams can:

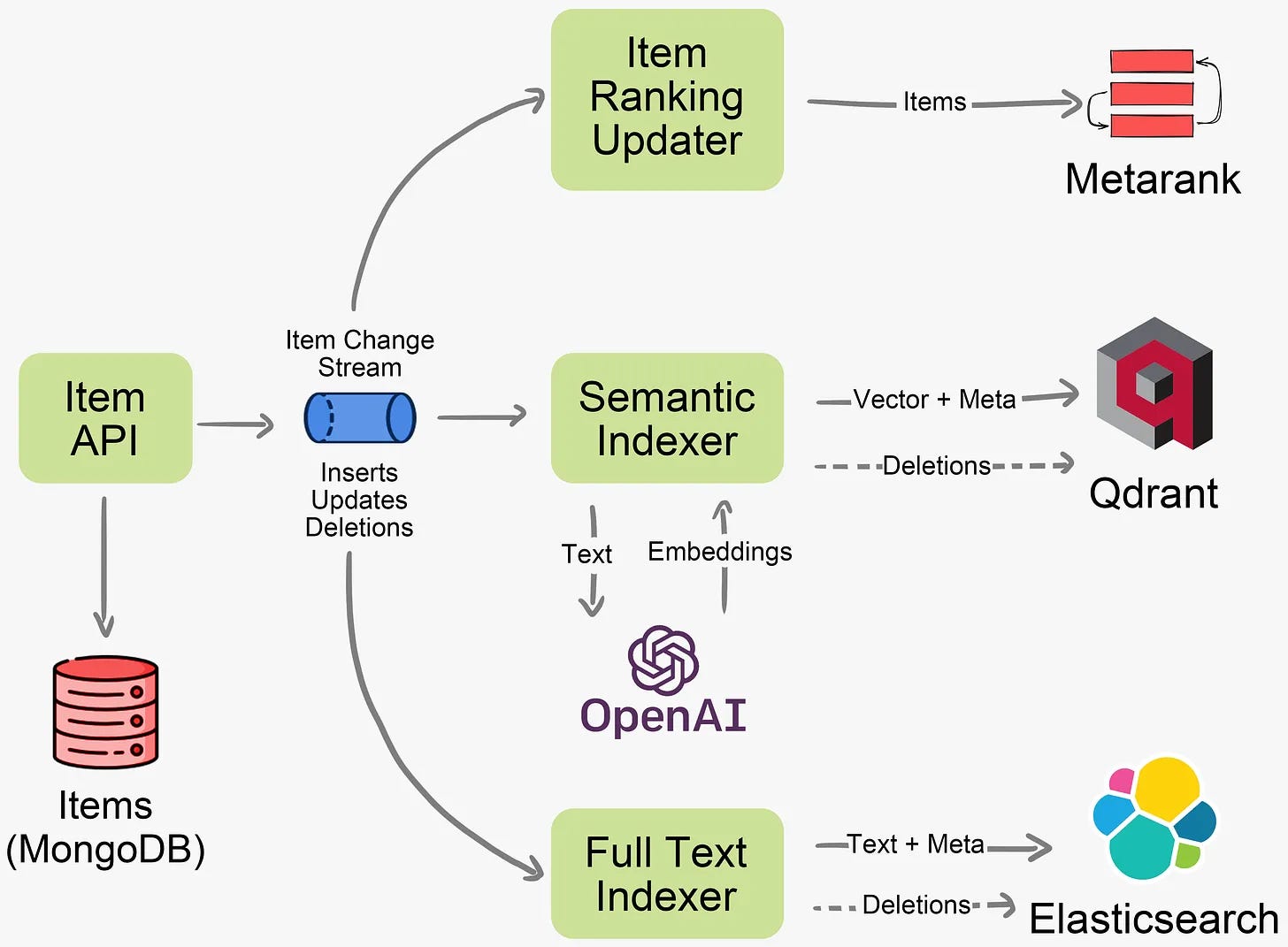

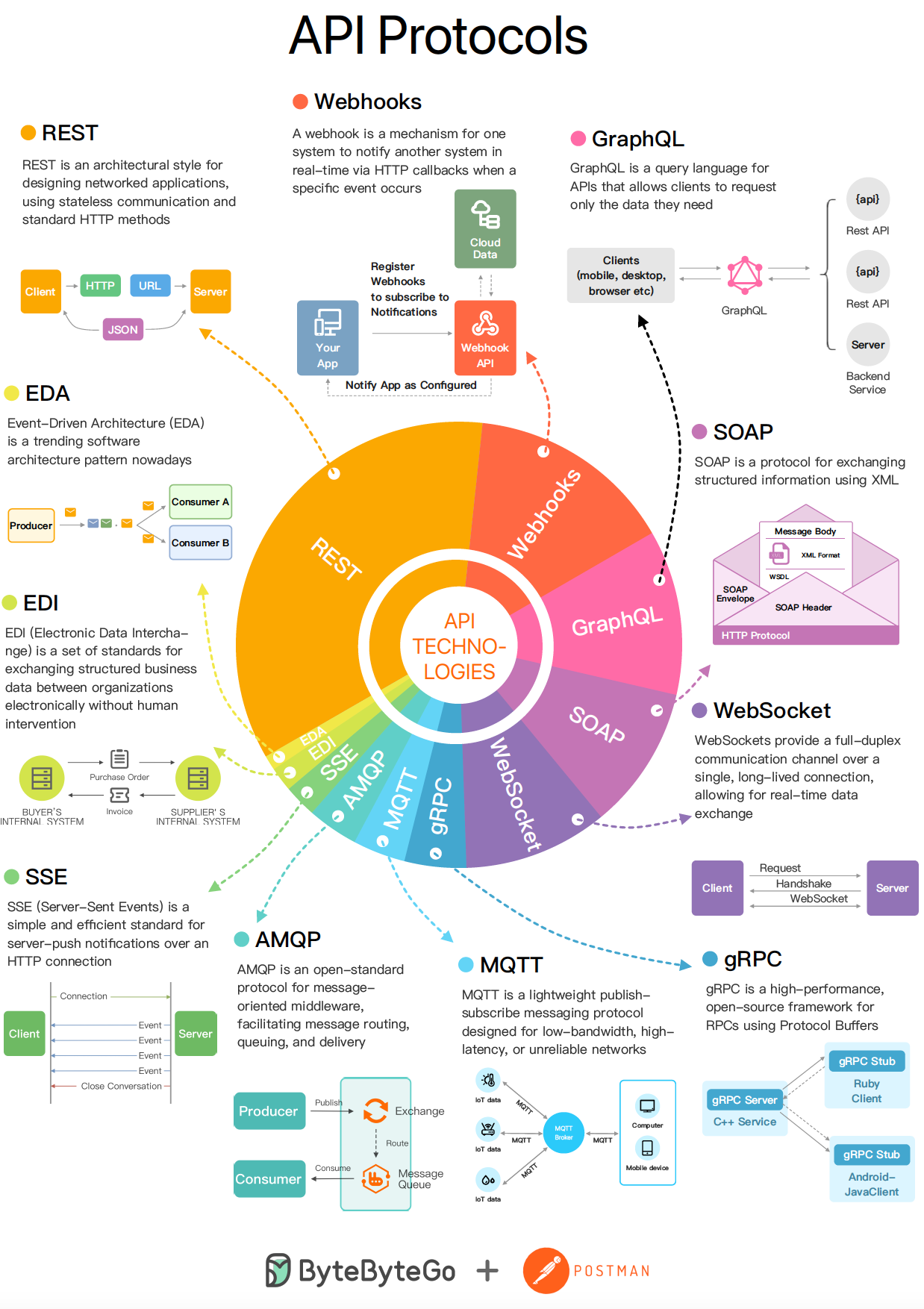

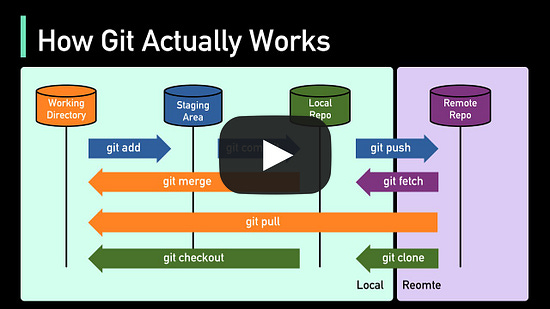

How Git Works: Explained in 4 Minutes Linux Boot Process ExplainedAlmost every software engineer has used Linux before, but only a handful know how its Boot Process works :) Let's dive in. Step 1 - When we turn on the power, BIOS (Basic Input/Output System) or UEFI (Unified Extensible Firmware Interface) firmware is loaded from non-volatile memory, and executes POST (Power On Self Test). Latest articlesIf you’re not a paid subscriber, here’s what you missed this month. To receive all the full articles and support ByteByteGo, consider subscribing: The Evolving Landscape of API Protocols in 2023This is a brief summary of the blog post I wrote for Postman. In this blog post, I cover the six most popular API protocols: REST, Webhooks, GraphQL, SOAP, WebSocket, and gRPC. The discussion includes the benefits and challenges associated with each protocol. Explaining the 4 Most Commonly Used Types of Queues in a Single DiagramQueues are popular data structures used widely in the system. The diagram below shows 4 different types of queues we often use.

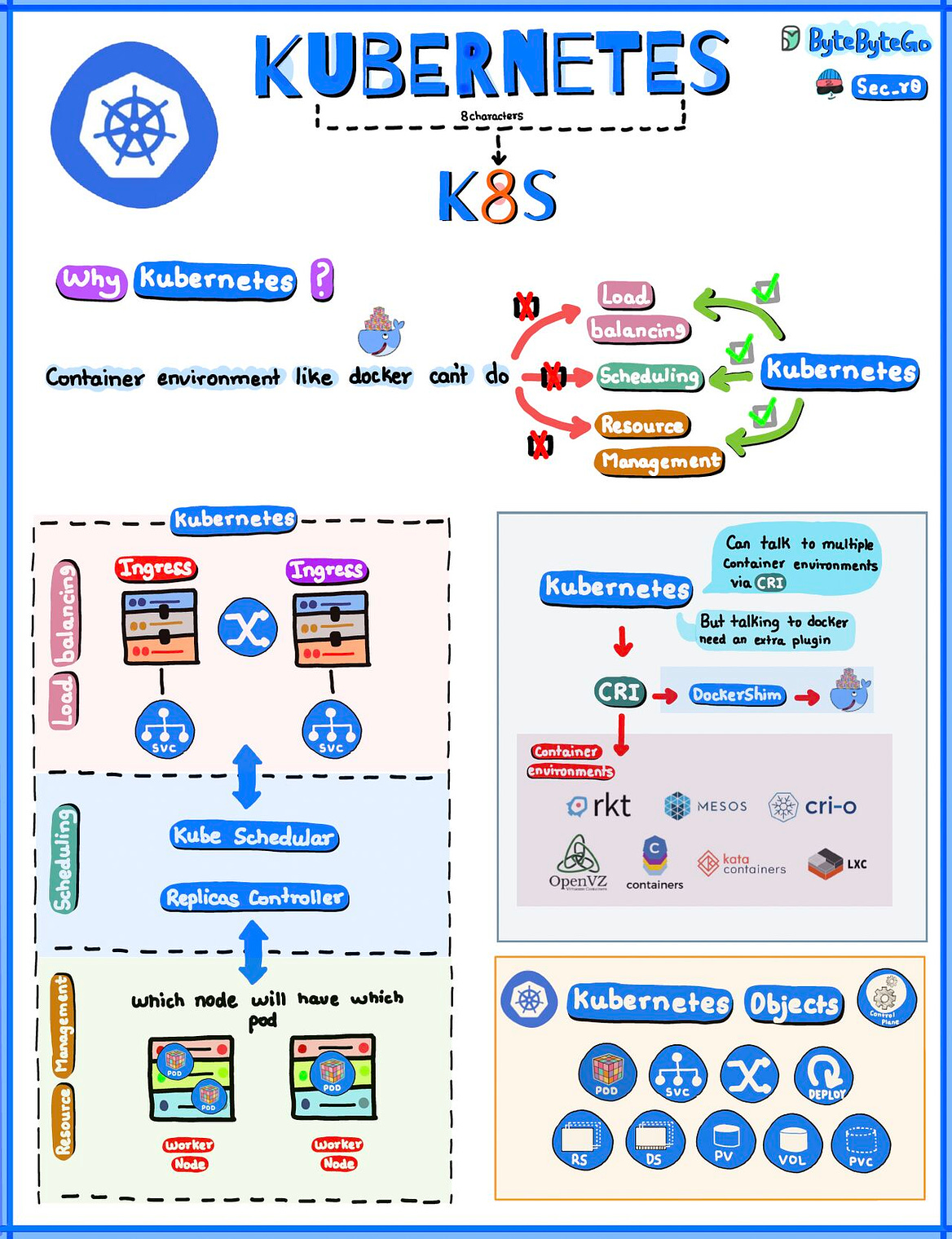

Over to you: Which type of queue have you used? A Brief Overview of KubernetesKubernetes, often referred to as K8S, extends far beyond simple container orchestration. It's an open-source platform designed to automate deploying, scaling, and operating application containers.

Kubernetes' Container Runtime Interface (CRI) is a significant leap forward, enabling users to plug in different container runtimes without recompiling Kubernetes. This flexibility means organizations can choose from a variety of runtimes like Docker, containerd, CRI-O, and others, depending on their specific needs. © 2023 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:39 - 2 Dec 2023