- Mailing Lists

- in

- Evolution of Java Usage at Netflix

Archives

- By thread 5189

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

How is the Fourth Industrial Revolution transforming manufacturing?

You’re invited! Join us for a discussion on productivity growth.

Evolution of Java Usage at Netflix

Evolution of Java Usage at Netflix

Stop releasing bugs with fully automated end-to-end test coverage (Sponsored)Bugs sneak out when less than 80% of user flows are tested before shipping. But how do you get that kind of coverage? You either spend years scaling in-house QA — or you get there in just 4 months with QA Wolf. How's QA Wolf different?

Netflix is predominantly a Java shop. Every backend application at Netflix is a Java application. This includes:

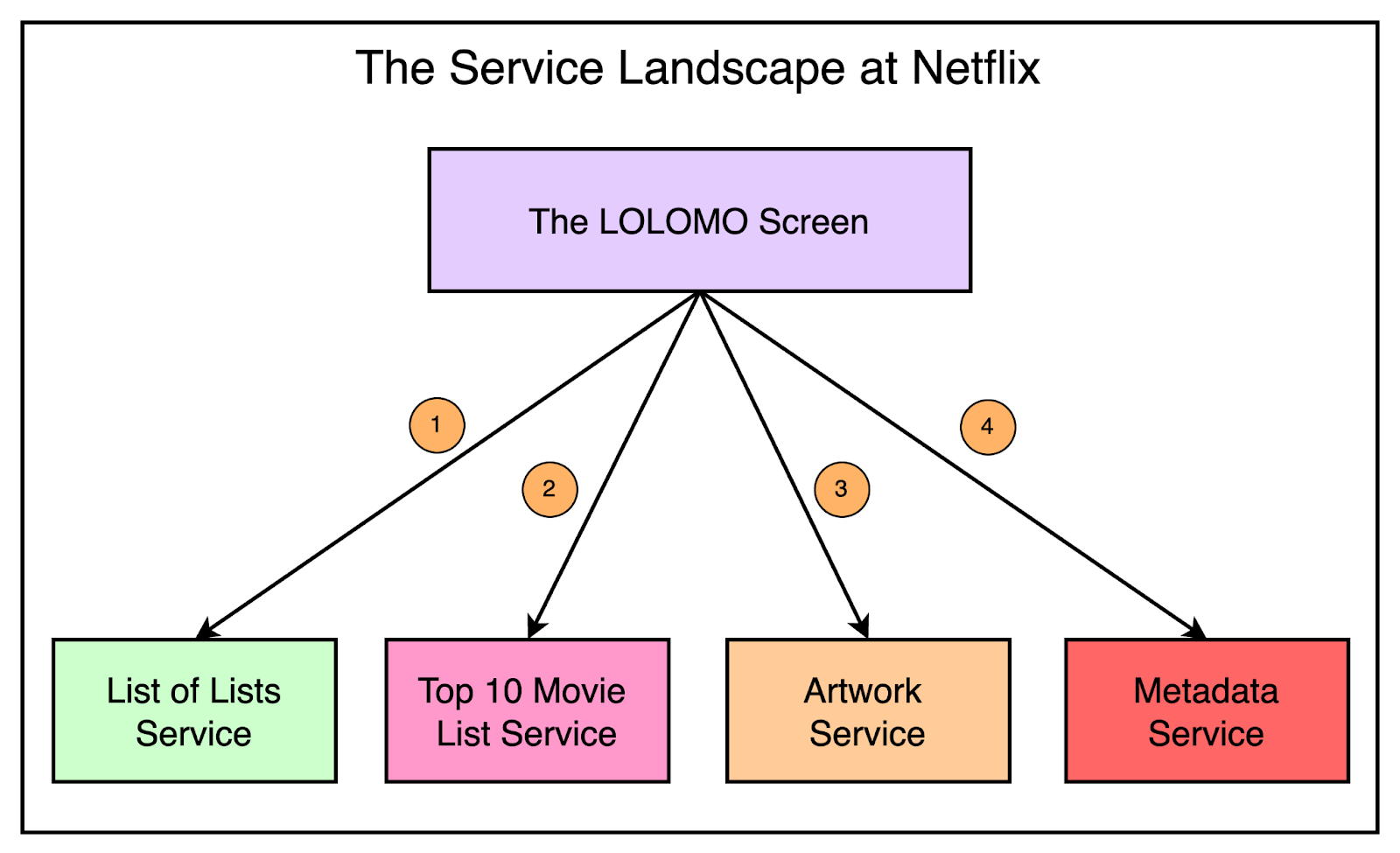

However, this doesn’t mean that the Java stack at Netflix is static. Over the years, it has evolved significantly. In this post, we will look at the evolution of Java usage at Netflix in light of the overall architectural changes that have taken place to support the changing requirements. The Groovy Era with BFFsIt’s common knowledge that Netflix has a microservice architecture. Every piece of functionality and data is owned by a microservice and there are thousands of microservices. Also, multiple microservices communicate with each other to realize some of the more complex functionalities. For example, when you open the Netflix application, you see the LOLOMO screen. Here, LOLOMO stands for list-of-list-of-movies and it is essentially built by fetching data from many microservices such as:

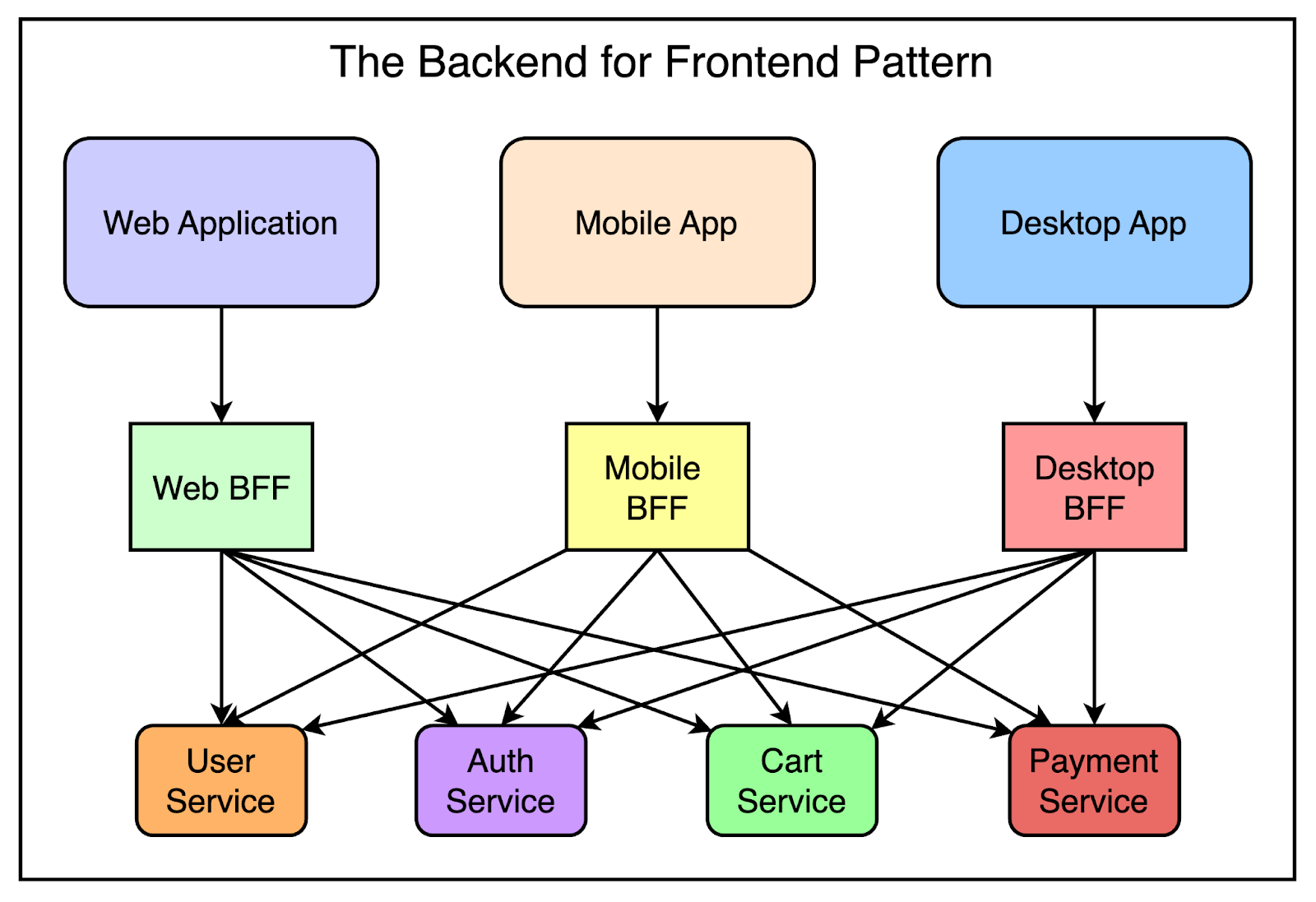

The below diagram shows this situation. It’s quite possible that rendering just one screen on the Netflix app may involve calling 10 services. However, calling so many services from your device (such as the television) or mobile app is typically inefficient. Making 10 network calls doesn’t scale and results in a poor customer experience. Many streaming apps suffer from such performance issues. To avoid these issues, Netflix used a single front door for the various APIs. The device makes a call to this front door that performs the fanout to all the different microservices. The front door acts as a gateway and Netflix used Zuul for this purpose. This approach works because the call to the multiple microservices takes place on the internal network which is very fast, thereby eliminating the performance implications. However, there was another problem to solve. All of the different devices users can use to access Netflix have different requirements in subtle ways. While Netflix tried to keep a consistent look and feel for the UI and its behavior on every device, each device still has different limitations when it comes to memory or network bandwidth and therefore, loads data in slightly different ways. It’s hard to create a single REST API that can work on all these different devices. Some of the problems are as follows:

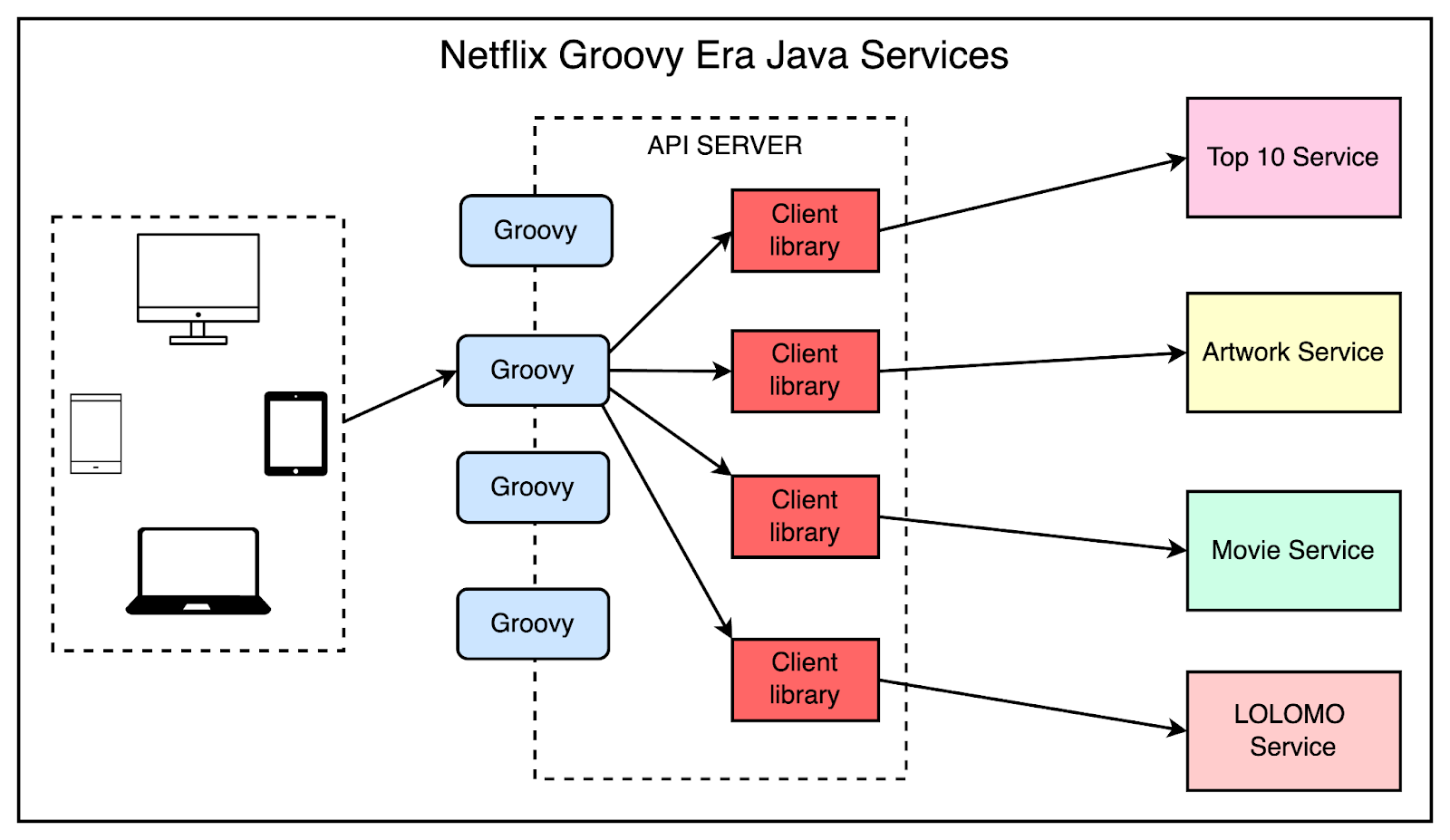

To handle this, Netflix used the backend for frontend (BFF) pattern. In this pattern, every frontend or UI gets its own mini backend. The mini backend is responsible for performing the fanout and fetching the data that the UI needs at that specific point. The below diagram depicts the concept of the BFF pattern. In the case of Netflix, the BFFs were essentially a Groovy script for a specific screen on a specific device. The scripts were written by UI developers since they knew what exact data they needed to render a particular screen. Once written, the scripts were deployed on an API server and performed the fanout to all the different microservices by calling the appropriate Java client libraries. These client libraries were wrappers for either a gRPC service or a REST client. The below diagram shows this setup. Latest articlesIf you’re not a paid subscriber, here’s what you missed this month. To receive all the full articles and support ByteByteGo, consider subscribing: The Use of RxJava and Reactive ProgrammingThe Groovy scripts helped perform the fanout. But doing such a fanout in Java is not trivial. The traditional approach was to create a bunch of threads and try to manage the fanout using minimal thread management. However, things got complicated quickly because of fault tolerance. When dealing with multiple services, you can have one of them not responding quickly enough or failing, resulting in a situation where you’ve to clean up threads and make sure things work properly. This is where RxJava and reactive programming helped Netflix handle fanouts in a better way by taking care of all the thread management complexity. On top of RxJava, Netflix created a fault-tolerant library named Hystrix that took care of failover and bulkheading. Even though reactive programming was complicated, it made a lot of sense for the time and the architecture allowed them to serve most of the traffic needs of Netflix. However, there were some important limitations to this approach:

The Move to GraphQL FederationOver the last few years, Netflix has been migrating to a completely new architecture when it comes to its Java services. The centerpiece of this new architecture is GraphQL Federation. When you compare GraphQL to REST, the major difference is that GraphQL always has a schema. This schema helps define some key aspects such as:

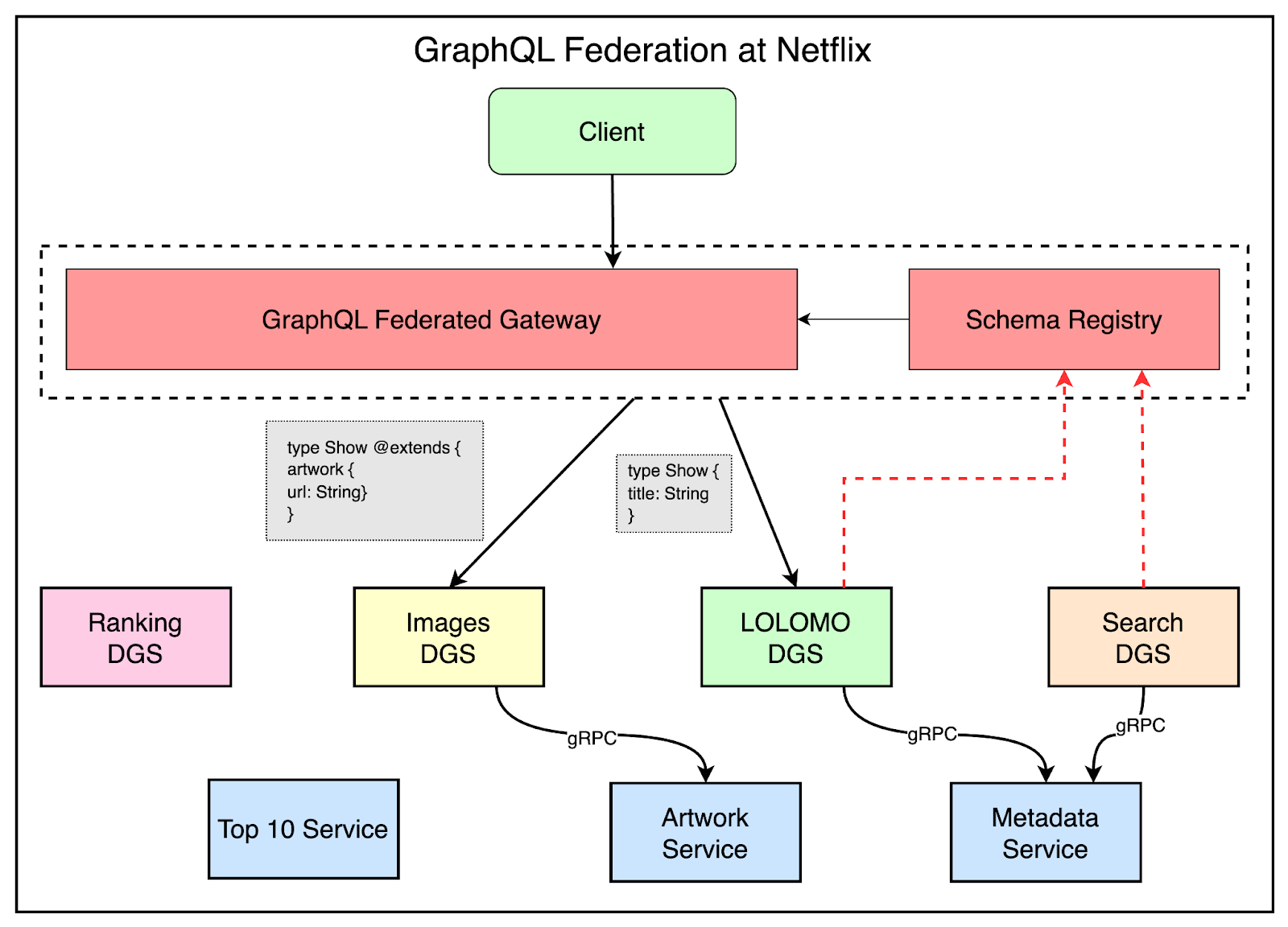

For example, in the case of Netflix, you may have a query for all the shows that return a show type. It has a show as a title and also contains reviews, which may be another type. With GraphQL, the client has to be explicit about the field selection. You can’t just ask for shows and get all the data from shows. Instead, you have to specifically mention that you want to get the title of the show and the score of various reviews. If you don’t ask for a field, you won’t get the field. With REST, this was the opposite because you get whatever the REST service decides to send. While it’s more work for the client to specify the query in GraphQL, it solves the whole problem around over-fetching where you get a lot more data than you might actually need. This paves the way to create one API that can serve all the different UIs. To augment GraphQL, Netflix went one step further and used GraphQL Federation to fit it back into their microservices architecture. The below diagram shows the setup with GraphQL Federation. As you can see, the microservices are now called DGS or Domain Graph Service. DGS is an in-house framework developed by Netflix to build GraphQL services. When they started moving to GraphQL and GraphQL Federation, there wasn’t any Java framework that was mature enough to use at the Netflix scale. Therefore, they built on top of the low-level GraphQL Java framework and augmented it with features like code generation for schema types and support for federation. At its core, a DGS is just a Java microservice with a GraphQL endpoint and a schema. While there are multiple DGSs, there’s just one big GraphQL schema from the perspective of a device such as the TV. This schema contains all the possible data that can be rendered. The device doesn’t need to worry about all the different microservices that are part of the schema in the backend. For example, the LOLOMO DGS can define a type show with just the title. Then, the images DGS can extend that type show and add an artwork URL to it. The two different DGSs don’t know anything about each other. All they need to do is publish their schema to the federated gateway. The federated gateway knows how to talk to a DGS because all of them have a GraphQL endpoint. There are several advantages to this setup:

Java Versions at NetflixRecently, Netflix has migrated from Java 8 to Java 17. After the migration, they saw about 20% better CPU usage on Java 17 versus Java 8 without any code changes. This was because of improvements in the G1 garbage collector. At the scale of Netflix, a 20% better CPU utilization is a big deal in terms of cost benefits. Contrary to popular belief, Netflix doesn’t have its own JVM. They’re just using the Azul Zulu JVM which is an OpenJDK build. Overall, Netflix has around 2800 Java applications that are mostly microservices of varying sizes. Also, they have around 1500 internal libraries. Some of them are actual libraries while many of them are just client libraries sitting in front of a gRPC or REST service. For the build system, Netflix relies on Gradle. On top of Gradle, they use Nebula which is a set of open-source Gradle plugins. The most important aspect of Nebula is in the resolution of libraries. Nebula helps with version locking that helps with reproducible builds. More recently, Netflix has been actively testing and rolling out changes with Java 21. Comparing the move from Java 8 to Java 17, it’s significantly easy to go from Java 17 to 21. Java 21 also provides a few important features such as:

Use of Spring Boot at NetflixNetflix is famous for its use of Spring Boot. In the last year or so, they have completely moved out of their homegrown Java stack based on Guice and completely standardized on Spring Boot. Why Spring Boot? It’s the most popular Java framework and has been very well maintained over the years. Netflix found a lot of benefits in leveraging the huge open-source community of the Spring framework, existing documentation, and training opportunities that are easily available. The evolution of Spring and its features align very well with the core Netflix principle of “highly aligned, loosely coupled”. Netflix uses the latest version of OSS Spring Boot and their goal is to stay as close as possible to the open source community. However, to integrate closely with the Netflix ecosystem and infrastructure, they have also created Spring Boot Netflix which is a bunch of modules built on top of Spring Boot. Spring Boot Netflix has support for several things such as:

ConclusionThere’s no singular Netflix stack. The Netflix Java stack has been evolving over the last several years, beginning from in-house frameworks to Groovy-era microservices and more recently, moving to GraphQL Federation. All the changes have been made to solve problems from the previous approach. For example, the move to RxJava was to handle fanouts in a better way and the move to GraphQL Federation was to solve the issues of complexity due to RxJava. Along with these changes, there has also been a parallel evolution in terms of Java language versions from Java 8 to 17 and now 21+. A lot of it has also been prompted by Spring Boot version 3 finally moving beyond Java 8 and forcing the entire ecosystem to upgrade. These changes have allowed them to build more performant applications that can save CPU costs and Overall, the theme has been towards standardization of the approach in building microservices across the organization. However, considering the constant challenges faced in operating at their scale while staying ahead of the competition, the evolution will continue. References: SPONSOR USGet your product in front of more than 500,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing hi@bytebytego.com. © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:35 - 2 Apr 2024