- Mailing Lists

- in

- How Airbnb Powers Personalization With 1M Events Per Second

Archives

- By thread 5243

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 55

A leader’s guide to managing tariff-related uncertainty

[Big Announcement!] ClickFunnels Dropshipping is here!!

How Airbnb Powers Personalization With 1M Events Per Second

How Airbnb Powers Personalization With 1M Events Per Second

Build Private AI Agents at Scale (Sponsored)Agentic AI is transforming how enterprises work — but building secure, auditable AI agents at scale isn’t easy. Join Redpanda Founder & CEO Alex Gallego and Senior Software Engineer Tyler Rockwood for a live Launch Stream unveiling the Agentic Runtime Platform: a new way to run private, traceable, multi-agent AI systems in your own cloud. See live demos, get insights from AI leaders, and discover how to overcome the hidden infrastructure challenges behind today’s enterprise AI. This is your first look at the infrastructure powering the agentic enterprise. Disclaimer: The details in this post have been derived from the articles written by the Airbnb engineering team. All credit for the technical details goes to the Airbnb Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Modern digital platforms rely on personalization to stay relevant. However, personalizing an experience meaningfully, especially across a product as broad as Airbnb, requires understanding users while they interact with the app. The task is difficult due to the following reasons:

User Signals Platform (USP) is Airbnb’s answer to these challenges. This platform was built to:

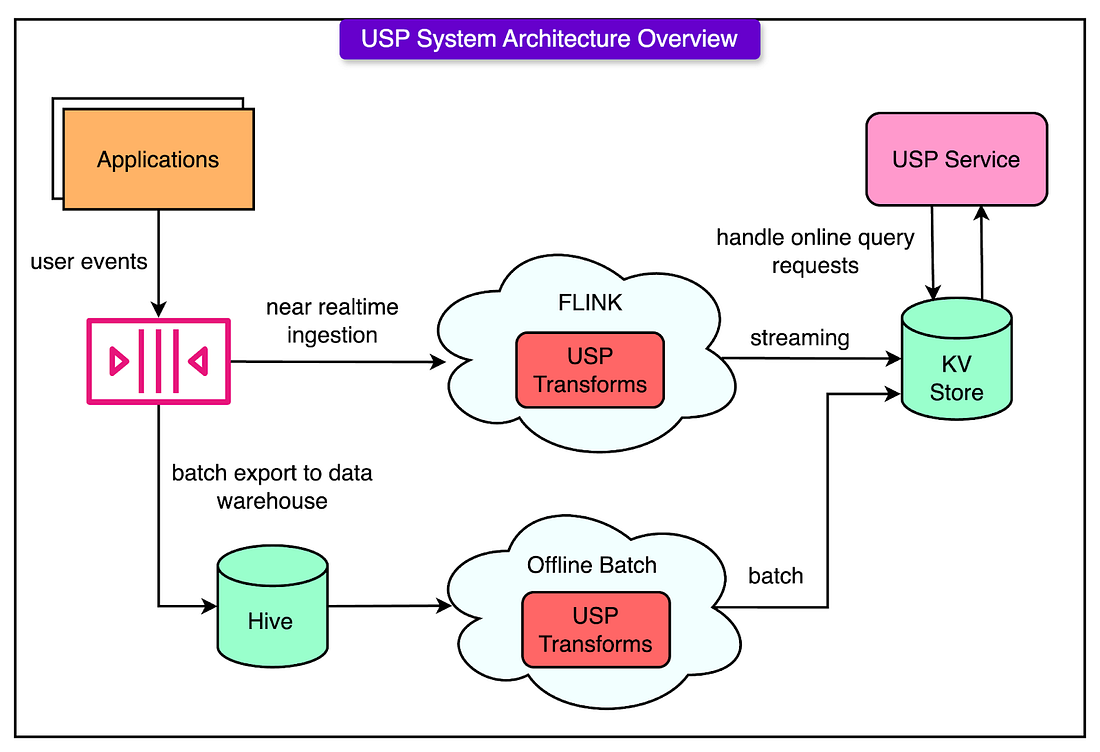

In this article, we’ll look at the architecture of the User Signals Platform along with the challenges faced by the Airbnb engineering team while trying to make it a reality. Architecture OverviewThere’s a reason most companies don’t have a robust real-time personalization platform: streaming architecture is hard to get right, especially when you care about both low latency and long-term correctness. The User Signals Platform (USP) does this by combining a Lambda-style pipeline with an online query layer, built on top of a few battle-tested primitives such as Kafka, Flink, a KV store, and some disciplined design principles. See the diagram below for the architecture overview: At a high level, USP is split into two main components. 1 - Data Pipeline LayerThis is where the heavy lifting happens. The pipeline ingests raw Kafka events, transforms them into structured user signals, and writes them into a versioned KV store. It includes:

This dual-path setup follows the Lambda Architecture model. 2 - Online Serving LayerOnce the data is processed and stored, they wanted a fast way to serve it to downstream services. The online serving layer took care of this requirement. Here’s what this layer does:

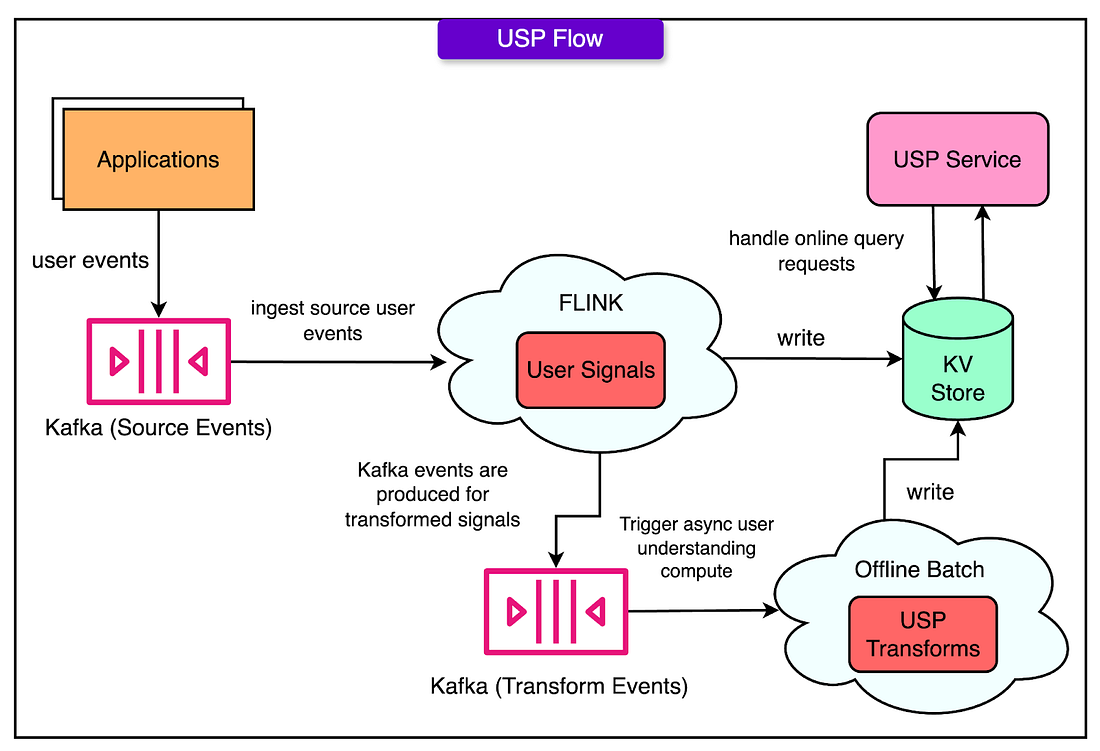

The Signal LifecycleHere’s how an individual user action flows through the system:

That’s the main loop. It sounds simple, but a lot is happening under the hood to make it safe, fast, and reliable. See the diagram below: Key Engineering DecisionsA few important architectural decisions the Airbnb engineering team took while building the USP were as follows: 1 - Flink Over SparkAirbnb chose to use Flink instead of Spark. Spark uses a micro-batch model. Instead of processing events as they arrive, it groups them into small batches (say, every 2 seconds) and runs them through the pipeline. That’s fine for dashboards. But if your downstream use case is real-time product personalization, a few seconds of delay can feel like a lifetime. Imagine opening the Airbnb app, searching for “Paris”, and seeing homepage recommendations that still reflect your last trip to Kyoto. The Spark model created delays that broke the user experience. Worse, those delays weren’t easy to tune away. Flink, by contrast, is natively event-driven. It processes each event as it arrives. That gives:

As a trade-off, Flink had a steeper operational curve. However, for use cases where personalization needs to react in-session, not post-session, it was the appropriate choice for Airbnb. 2 - Append-Only Data ModelIn stream processing, it is difficult to guarantee that an event will be processed exactly once. Even with Kafka and Flink’s best efforts, things can get retried, reordered, or replayed. So, instead of fighting that, Airbnb leaned into it. They made every write to the KV store append-only, with a processing timestamp as the version. There are no in-place updates, and idempotency is handled by versioning. This simplifies:

The trade-off was spending more on storage costs, but saving a ton on operational complexity. 3 - Config-Driven Developer WorkflowOne of the most underrated engineering challenges isn’t building the system. It’s making it usable for the rest of the company. USP tackles that head-on by giving developers a config-first interface to define their signal logic. Here’s how it works:

The script autogenerates the necessary Flink job configurations, batch backfill scripts, and monitoring YAMLs. This pattern standardizes signal definitions across teams and reduces boilerplate and manual configuration. User Signal TypesWhen a user interacts with Airbnb (searches, clicks, or saves a home), the behavior emits a bunch of raw events. Most of them are meaningless on their own. But with the right structure and filtering, they become User Signals: queryable, composable, and rich with context. USP makes it dead simple for engineers to define, transform, and consume these signals, without writing complex stream processing jobs from scratch. See the code example below for a signal definition and the transform class.

There are two core signal types:

User SignalsThese are the building blocks. Each user signal represents a stream of recent activity (searches, views, bookings, wishlists) attached to a user ID and timestamped for querying. Engineers use a config file to define a new signal, and the heavy lifting happens in the transform class. A few things to note:

Join SignalsSometimes, a single event isn’t enough. Maybe, there is a need to join them. For example:

Rather than batch-processing this later, USP supports Join Signals: real-time stateful joins between two Kafka streams using a shared key. To support this, a Join Signal configuration needs to be written. Under the hood, Flink does the join in real-time. RocksDB acts as the state store to hold intermediate join keys, and the result is a merged signal with richer context: ready to feed into ML models, personalization rules, or session-based analysis. User SegmentsA User Segment is a logical group: a cohort of users who match a behavioral pattern or intent. In most systems, user segmentation is a batch job. You run a SQL query once a day, label users as “engaged” or “likely to churn,” and hope that snapshot is still relevant tomorrow. That doesn’t cut it when your product needs to react to user intent as it forms. Airbnb’s User Signals Platform flips the script. With User Segments, engineers can define dynamic cohorts that update in near real-time, triggered by live user actions, not stale offline data. The segment is defined by:

These segments are recalculated on the fly, based on live signals flowing through the system. Let’s say they want to target users who are actively planning a trip: people who are more likely to book in the next few days. Here’s how segmentation might look:

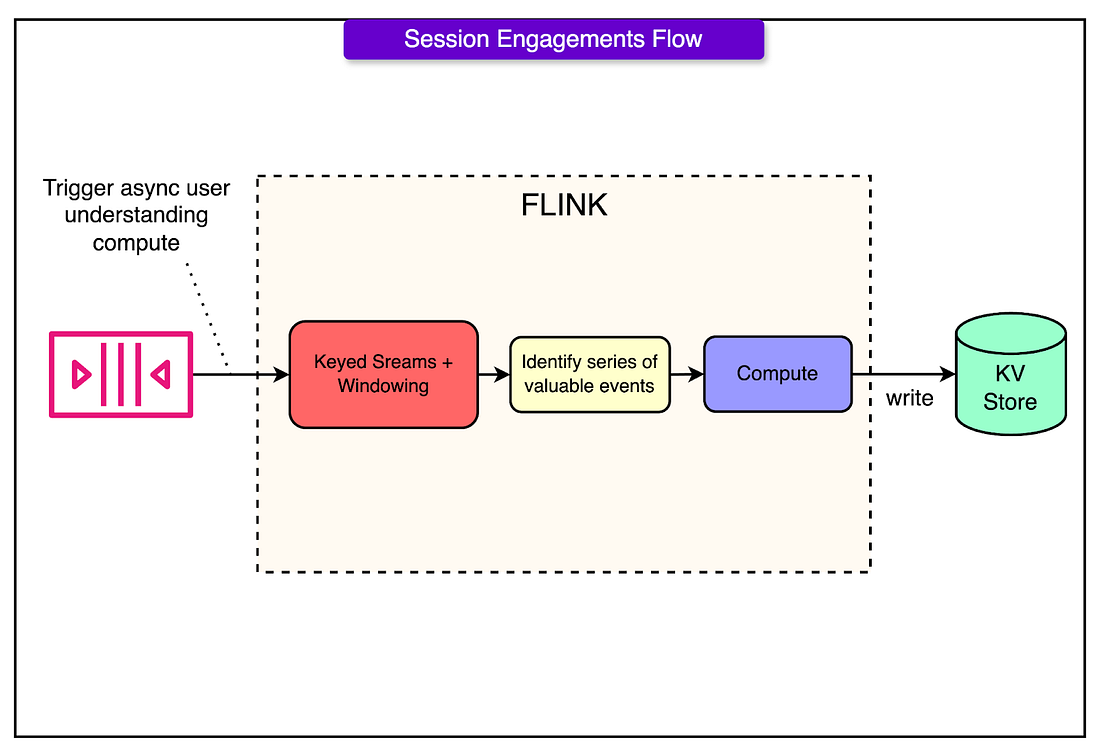

This segment powers things like trip recommendation modules, push notifications (for example: “Still looking for a beach house?”), and custom homepage experiences. Since it's built on streaming logic, updates happen within seconds of user activity, not hours later after a batch job finishes. Session EngagementsMost personalization systems are great at long-term memory: what you searched for last week, which cities you’ve favorited, and what kind of stays you usually book. But they often miss the recent stuff. Session engagements feature of the USP fixes that. It lets the platform answer queries like:

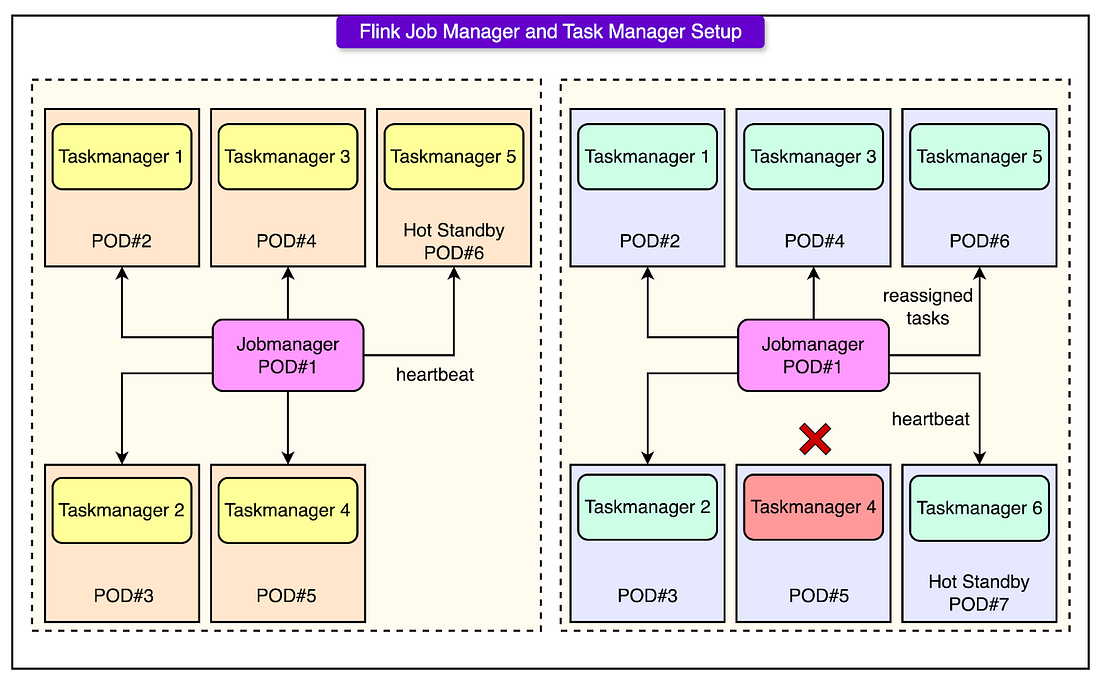

Instead of looking at user behavior in aggregate, session engagements look at bursts of activity within a session window covering short, meaningful slices of time that capture a user’s current goal or intent. See the diagram below: Session engagements are powered by Flink streaming jobs that ingest transformed signals (from upstream user actions), group them by user ID, and process them in windowed intervals using two patterns: Sliding WindowsThey are fixed-size windows that advance by a smaller step. For example, a 10-minute window sliding every 5 minutes. It is useful for rolling insight, such as “What kind of listings is this user clicking every 10 minutes?” Session WindowsThese are dynamically sized windows based on inactivity gaps. For example, start a session when the user clicks and close it after 30 minutes of silence. This is useful for natural interaction clusters, such as listings viewed in a single burst of planning. Flink Stability with Hot StandbyOne of the most critical pieces of a real-time stream processing system is operational resilience. You can have the smartest signal logic, the fastest queries, the cleanest data pipeline, but if your jobs stall when a server crashes, it will cause trouble. Airbnb hardened its Flink deployment against exactly this kind of failure, with a simple but effective strategy: hot standby Task Managers. Rather than waiting for Kubernetes to create new pods during failure, the team pre-provisions extra Task Managers that sit idle but are ready. These hot standbys are kept warm and registered with the Flink JobManager, so when failure hits, they can pick up tasks immediately. See the diagram below: This helps achieve zero cold-start lag for task reassignment and faster recovery time (seconds instead of minutes). There is also a lower event backlog risk with this setup. ConclusionAirbnb’s User Signals Platform isn’t a prototype. It’s a production-grade engine powering critical personalization across one of the largest travel platforms in the world. Here’s what the system is doing today in terms of scale:

In a way, USP is part of the core Airbnb infrastructure, running across dozens of teams, all contributing their signal definitions, segments, and use cases. Even with all this machinery in place, the team sees room for growth, especially in how they handle asynchronous compute. The plan is to go further in smarter pipeline-level orchestration, pluggable execution backends, and end-to-end compute graphs. It’s tempting to look at systems like this and focus on the streaming tech. But what makes the User Signals Platform successful isn’t just Flink or Kafka or RocksDB. It’s the design choices such as:

The philosophy also matters: real-time data isn’t valuable unless it’s usable. Teams across the company can define signals, derive insights, and deploy personalized experiences without becoming stream engineers. References: SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. © 2025 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:35 - 21 Apr 2025