- Mailing Lists

- in

- How Discord Stores Trillions of Messages with High Performance

Archives

- By thread 5320

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 132

Make your project more competitive: high-end mold materials

[TODAY] ‘Offer Secrets’ masterclass starts soon!

How Discord Stores Trillions of Messages with High Performance

How Discord Stores Trillions of Messages with High Performance

MCP Authorization in 5 Easy OAuth Specs (Sponsored)Securely authorizing access to an MCP server used to be an open question. Now there's a clear answer: OAuth. It provides a path with five key specs covering delegation, token exchange, and scoped access. Implement MCP Auth with WorkOS Disclaimer: The details in this post have been derived from the articles shared online by the Discord Engineering Team. All credit for the technical details goes to the Discord Engineering Team. The links to the original articles and sources are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Many chat platforms never reach the scale where they have to deal with trillions of messages. However, Discord does. And when that happens, a somewhat manageable data problem can quickly turn into a major engineering challenge that involves millions of users sending messages across millions of channels. At this scale, even the smallest architectural choices can have a big impact. Things like hot partitions can turn into support nightmares. Garbage collection pauses aren’t just annoying, but can lead to system-wide latency spikes. The wrong database design can lead to wastage of developer time and operational bandwidth. Discord’s early database solution (moving from MongoDB to Apache Cassandra®) promised horizontal scalability and fault tolerance. It delivered both, but at a significant operational cost. Over time, keeping Apache Cassandra® stable required constant firefighting, careful compaction strategies, and JVM tuning. Eventually, the database meant to scale with Discord had become a bottleneck. In this article, we will walk through how Discord rebuilt its message storage layer from the ground up. We will learn the issues Discord faced with Apache Cassandra® and their shift to ScyllaDB. Also, we will look at the introduction of Rust-based data services to shield the database from overload and improve concurrency handling. Go from Engineering to AI Product Leadership (Sponsored)As an engineer or tech lead, you know how to build complex systems. But how do you translate that technical expertise into shipping world-class AI products? The skills that define great AI product leaders—from ideation and data strategy to managing LLM-powered roadmaps—are a different discipline. This certification is designed for technical professionals. Learn directly from Miqdad Jaffer, Product Leader at OpenAI, in the #1 rated AI certificate on Maven. You won't just learn theory; you will get hands-on experience developing a capstone project and mastering the frameworks used to build and scale products in the real world. Exclusive for ByteByteGo Readers: Use code Initial ArchitectureDiscord's early message storage relied on Apache Cassandra®. The schema grouped messages by channel_id and a bucket, which represented a static time window. This schema allowed for efficient lookups of recent messages in a channel, and Snowflake IDs provided natural chronological ordering. A replication factor of 3 ensured each partition existed on three separate nodes for fault tolerance.

Within each partition, messages were sorted in descending order by message_id, a Snowflake-based 64-bit integer that encoded creation time. The diagram below shows the overall partitioning strategy based on the channel ID and bucket. At a small scale, this design worked well. However, scale often introduces problems that don't show up in normal situations. Apache Cassandra® favors write-heavy workloads, which aligns well with chat systems. However, high-traffic channels with massive user bases can generate orders of magnitude more messages than quiet ones. A few things started to go wrong at this point:

The diagram below shows the concept of hot partitions: Performance wasn't the only issue. Operational overhead also ballooned.

At this point, Apache Cassandra® was being scaled manually, by throwing more hardware and more engineer hours at the problem. The system was running, but it was clearly under strain. Switching to ScyllaDBScyllaDB entered the picture as a natural alternative. It preserved compatibility with the query language and data model of Apache Cassandra®, which meant the surrounding application logic could remain largely unchanged. However, under the hood, the execution model was very different. Some key characteristics were as follows:

Overall, ScyllaDB offered the same interface with a far more predictable runtime. Rust-Based Data Services LayerTo reduce direct load on the database and prevent repeated query amplification, Discord also introduced a dedicated data services layer. These services act as intermediaries between the main API monolith and the ScyllaDB clusters. They are responsible solely for data access and coordination, and no business logic is embedded here. The goal behind them was simple: isolate high-throughput operations, control concurrency, and protect the database from accidental overload. Rust was chosen for the data services for both technical and operational reasons. This is because it brings together low-level performance and modern safety guarantees. Some key advantages of choosing Rust are as follows:

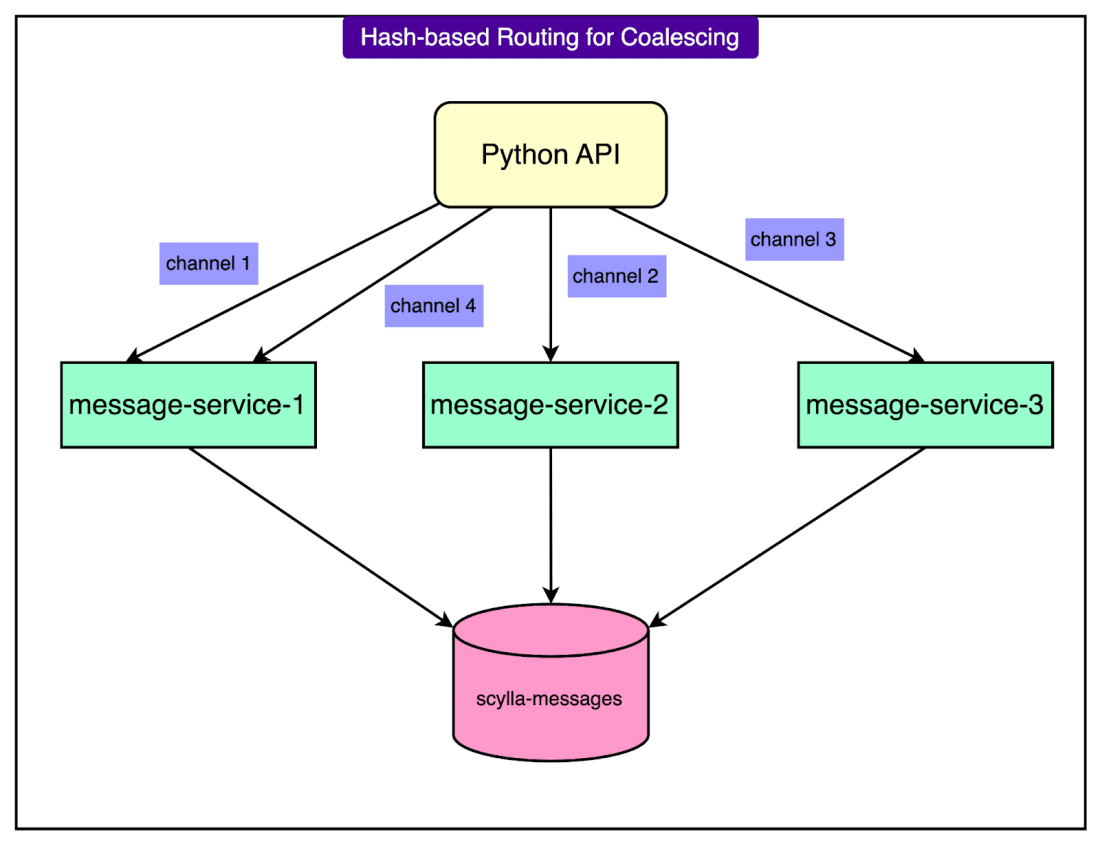

Each data service exposes gRPC endpoints that map one-to-one with database queries. This keeps the architecture clean and transparent. The services do not embed any business logic. They are designed purely for data access and efficiency. Request CoalescingOne of the most important features in this layer is request coalescing. When multiple users request the same piece of data, such as a popular message in a high-traffic channel, the system avoids hammering the database with duplicate queries.

See the diagram below: To support this pattern at scale, the system uses consistent hash-based routing. Requests are routed using a key, typically the channel_id. This allows all traffic for the same channel to be handled by the same instance of the data service. Ultimately, the Rust-based data services help offload concurrency and coordination away from the database. They flatten spikes in traffic, reduce duplicated load, and provide a stable interface to ScyllaDB. The result for Discord was higher throughput, better latency under load, and fewer emergencies during traffic surges. Migration StrategyMigrating a database that stores trillions of messages is not a trivial problem. The primary goals of this migration were clear:

The entire migration process was divided into phases: Phase 1: Dual Writes with a Cutover PointThe team began by setting up dual writes. Every new message was written to both Apache Cassandra® and ScyllaDB. A clear cutover timestamp defined which data belonged to the "new" world and which still needed to be migrated from the "old." This allowed the system to adopt ScyllaDB for recent data while leaving historical messages intact in Apache Cassandra® until the backfill completed. Phase 2: Historical Backfill Using SparkThe initial plan for historical migration relied on ScyllaDB’s Spark-based migrator. This approach was stable but slow. Even after tuning, the projected timeline was three months to complete the full backfill. That timeline wasn't acceptable, given the ongoing operational risks with Apache Cassandra®. Phase 3: A Rust-Powered RewriteInstead of accepting the delay, the team extended their Rust data service framework to handle bulk migration. This new custom migrator:

The result was a dramatic improvement. The custom migrator achieved a throughput of 3.2 million messages per second, reducing the total migration time from months to just 9 days. This change also simplified the plan. With fast migration in place, the team could migrate everything at once instead of splitting logic between "old" and "new" systems. Final Step: Validation and CutoverTo ensure data integrity, a portion of live read traffic was mirrored to both databases, and the responses were compared. Once the system consistently returned matching results, the final cutover was scheduled. In May 2022, the switch was flipped. ScyllaDB became the primary data store for Discord messages. Post-Migration ResultsAfter the migration, the system footprint shrank significantly. The Apache Cassandra® cluster had grown to 177 nodes to keep up with storage and performance demands. ScyllaDB required only 72 nodes to handle the same workload. This wasn’t just about node count. Each ScyllaDB node ran with 9 TB of disk space, compared to an average of 4 TB on Apache Cassandra® nodes. The combination of higher density and better performance per node translated into lower hardware and maintenance overhead. Latency ImprovementsThe performance gains were clear and measurable.

Operational StabilityOne of the biggest wins was operational calm.

ConclusionThe real test of any system comes when traffic patterns shift from expected to chaotic. During the 2022 FIFA World Cup Final, Discord’s message infrastructure experienced exactly that kind of stress test and passed cleanly. As Argentina and France battled through regular time, extra time, and penalties, user activity surged across the platform. Each key moment (goals by Messi, Mbappé, the equalizers, the shootout) created massive spikes in message traffic, visible in monitoring dashboards almost in real time.

Message sends surged, and read traffic ballooned. The kind of workload that used to trigger hot partitions and paging alerts during the earlier design now ran smoothly. Some key takeaways were as follows:

Note: Apache Cassandra® is a registered trademark of the Apache Software Foundation. References: SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. |

by "ByteByteGo" <bytebytego@substack.com> - 11:38 - 8 Jul 2025