- Mailing Lists

- in

- How McDonald Sells Millions of Burgers Per Day With Event-Driven Architecture

Archives

- By thread 4939

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 50

Go, teams: When teams get healthier, the whole organization benefits

Emergency Response Plan (2 Days Inhouse Program)

How McDonald Sells Millions of Burgers Per Day With Event-Driven Architecture

How McDonald Sells Millions of Burgers Per Day With Event-Driven Architecture

Cloud-scale monitoring with AWS and Datadog (Sponsored)In this eBook, you’ll learn about the benefits of migrating workloads to AWS and how to get deep visibility into serverless and containerized applications with Datadog.

Disclaimer: The details in this post have been derived from the McDonald’s Technical Blog. All credit for the technical details goes to the McDonald’s engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Over the years, McDonald’s has undergone a significant digital transformation to enhance customer experiences, strengthen its brand, and optimize overall operations. At the core of this transformation is a robust technological infrastructure that unifies processes across various channels and touchpoints throughout their global operations. The need for unified event processing emerged from McDonald's extensive digital ecosystem, where events are utilized across the technology stack. There were three key processing types:

The events were used across use cases such as mobile-order progress tracking and sending customers marketing communications (deals and promotions). Coupled with the scale of McDonald’s operations, the system needed an architecture that could handle:

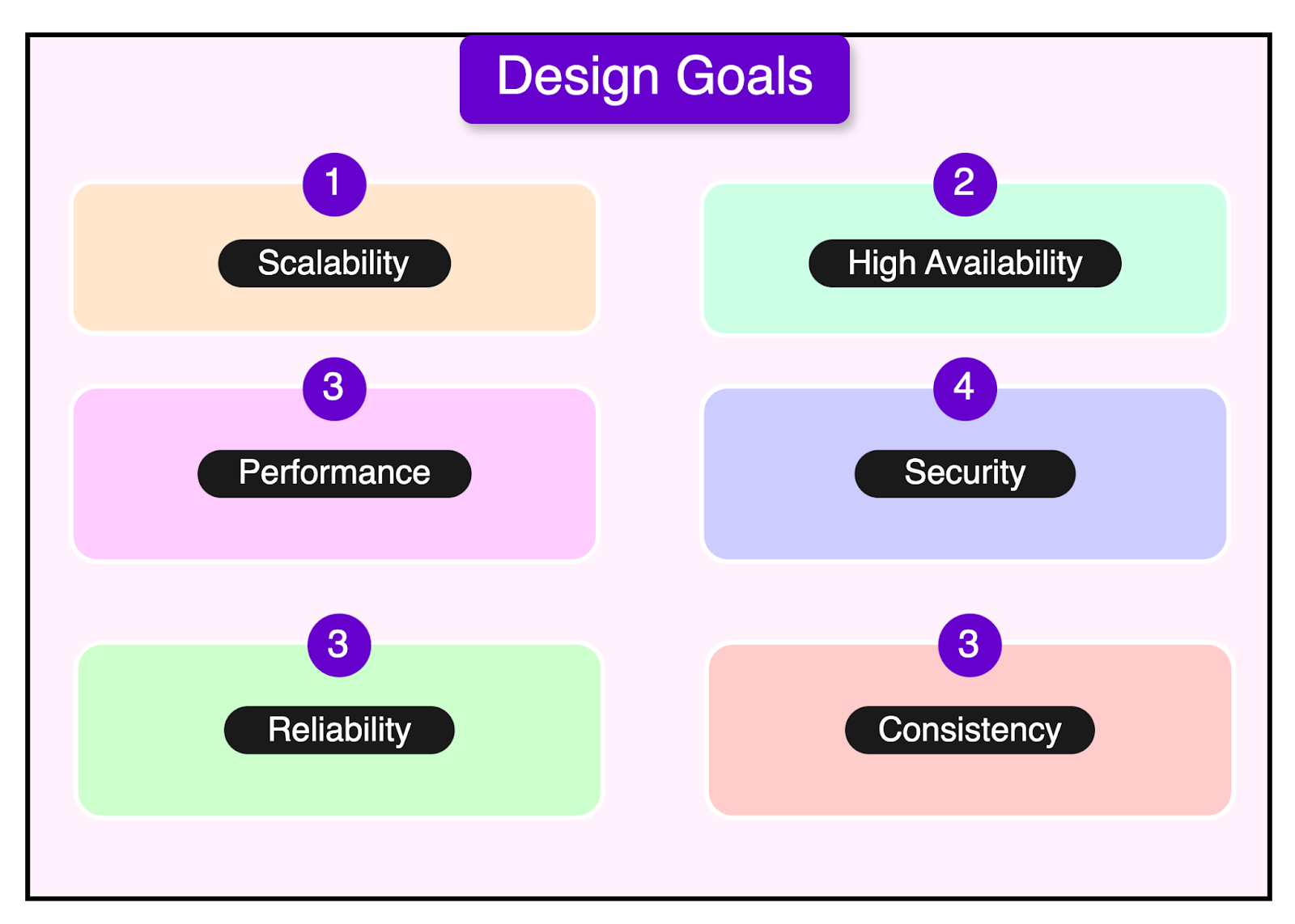

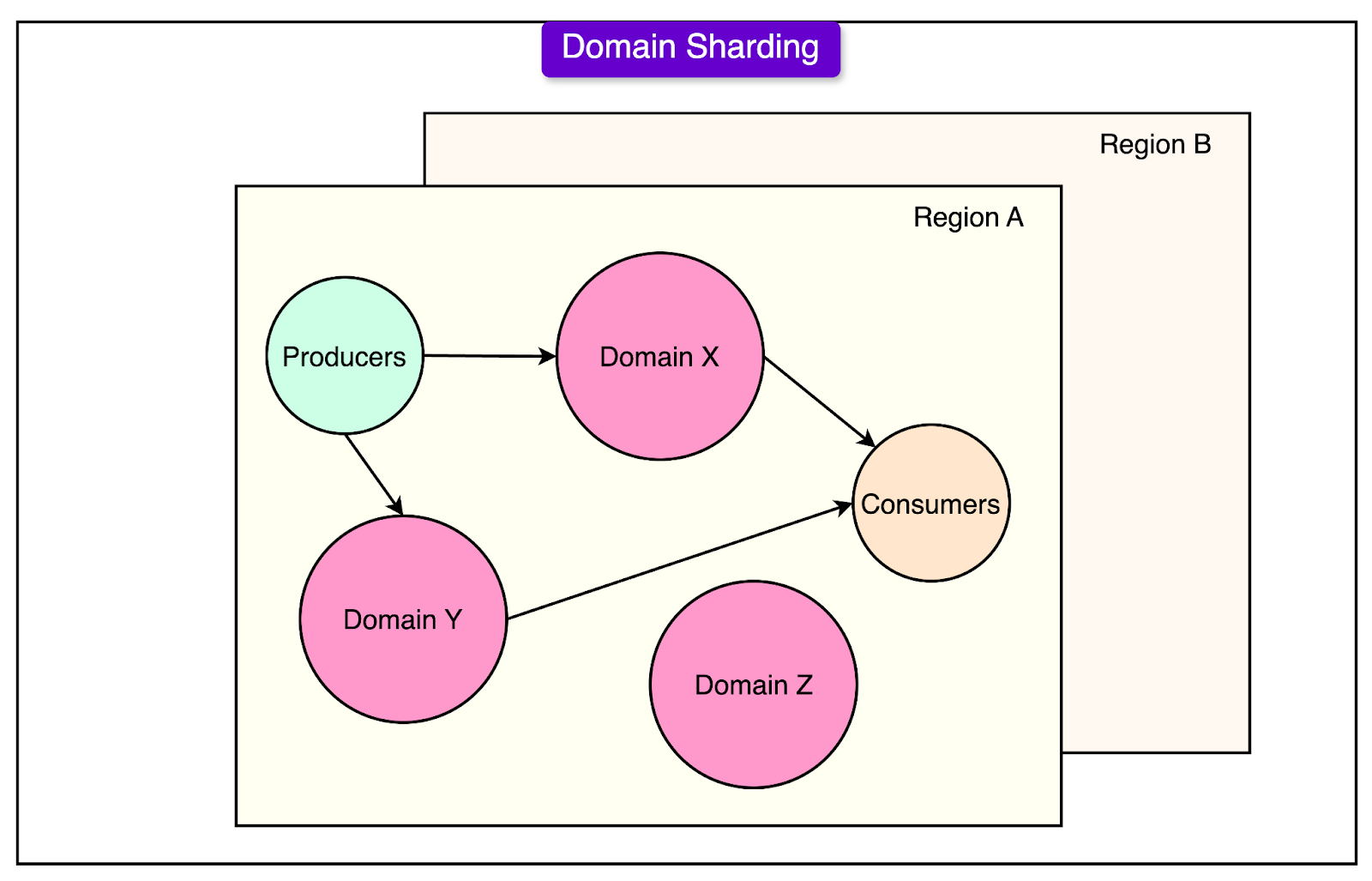

In this article, we’re going to look at McDonald’s journey of developing a unified platform enabling real-time, event-driven architectures. Design Goals of the PlatformMcDonald's unified event-driven platform was built with specific foundational principles to support its global operations and customer-facing services. Each design goal was carefully considered to ensure the platform's robustness and efficiency. Let’s look at the goals in a little more detail. ScalabilityThe platform needed the ability to auto-scale to accommodate demand. For this purpose, they engineered it to handle growing event volumes through domain-based sharding across multiple MSK clusters. This approach enables horizontal scaling and efficient resource utilization as transaction volumes increase. High AvailabilityThe platform had to be capable enough to withstand failures in components. System resilience is achieved through redundant components and failover mechanisms. The architecture includes a standby event store that maintains operation continuity when the primary MSK service experiences issues. PerformanceThe goal was to deliver events in real time with the ability to handle highly concurrent workloads. Real-time event delivery is facilitated through optimized processing paths and schema caching mechanisms. The system maintains low latency while handling high-throughput scenarios across different geographical regions. SecurityThe data needed to adhere to data security guidelines. The platform implements comprehensive security measures, including:

ReliabilityThe platform must be dependable with controls to avoid losing any events. Event loss prevention is achieved through:

ConsistencyThe platform should maintain consistency around important patterns related to error handling, resiliency, schema evolution, and monitoring. Standardization is maintained using:

SimplicityThe platform should reduce operational complexity so that teams can build on the platform with ease. Operational complexity is minimized with:

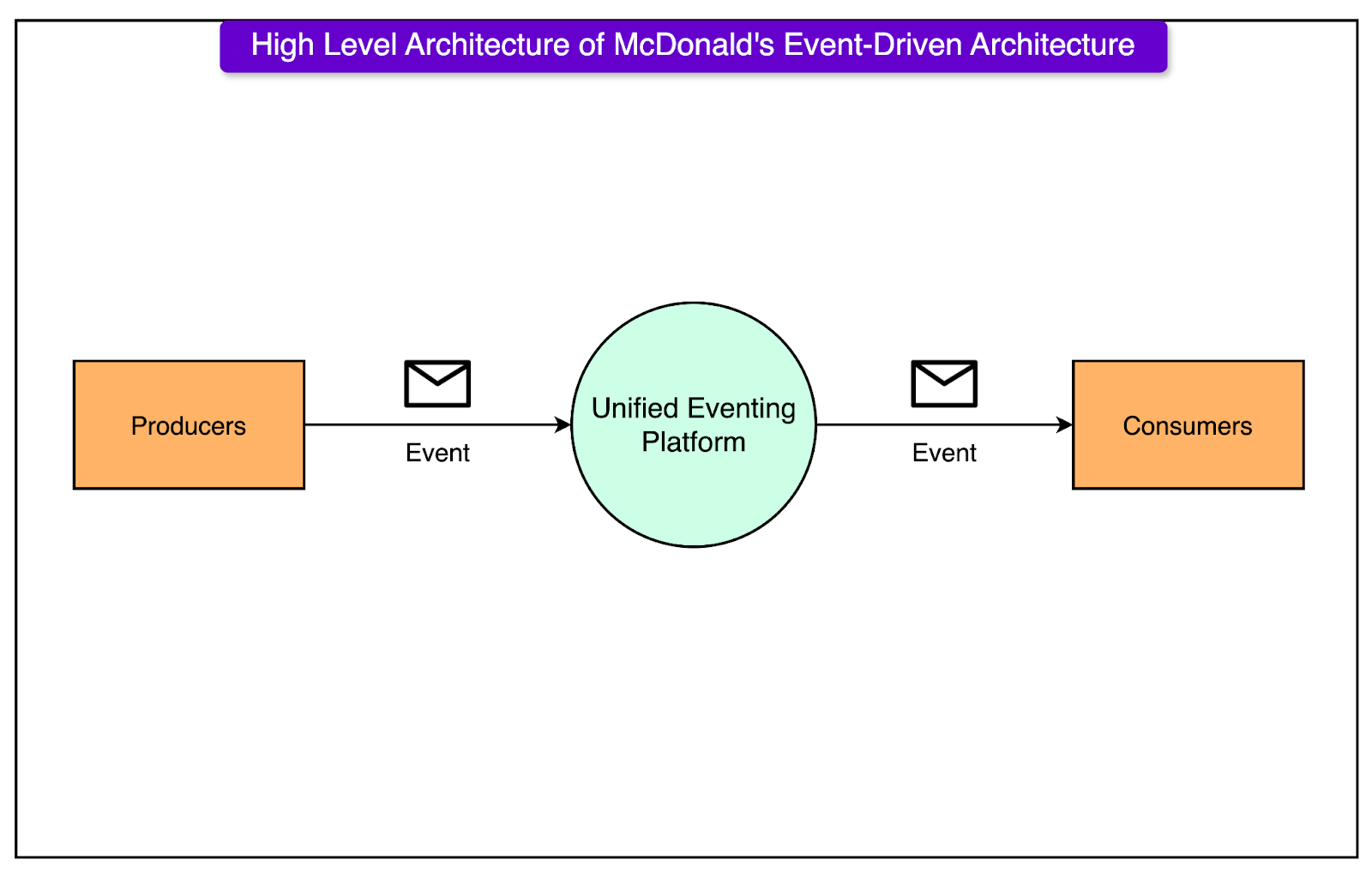

The leading open source Notion alternative (Sponsored)AppFlowy is the AI collaborative workspace where you achieve more without losing control of your data. It works offline and supports self-hosting. Own your data and embrace a smarter way to work. Get started for free! Key Components of the ArchitectureThe diagram below shows the high-level architecture of McDonald’s event-driven architecture. The key components of the architecture are as follows: Event BrokerThe core component of the platform is AWS Managed Streaming for Kafka (MSK), which handles:

Schema RegistryA schema registry is a critical component that maintains data quality by storing all event schemas. This enables schema validation for producers as well as consumers. It also allows the consumers to determine which schema to follow for message processing. Standby Event StoreThis component helps avoid the loss of messages if MSK is unavailable. It performs the following functions:

Custom SDKsThe McDonald’s engineering team built language-specific libraries for producers and consumers. Here are the features supported by these SDKs:

Event GatewayMcDonald’s event-based architecture is required to support internally generated events and events produced by external partner applications. The event gateway serves as an interface for external integrations by:

Supporting UtilitiesThese are administrative tools that offer capabilities such as:

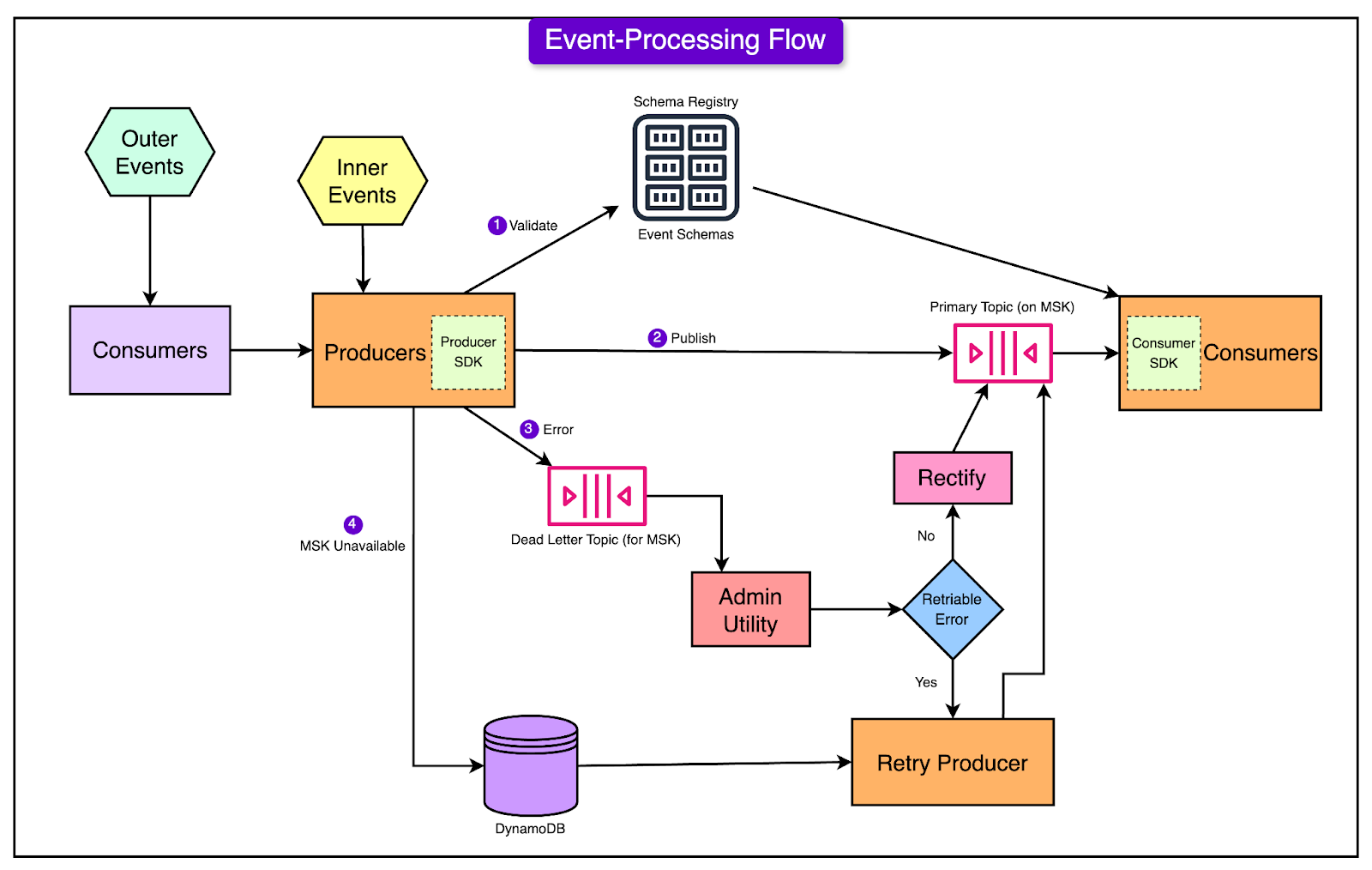

Event Processing FlowThe event processing system at McDonald's follows a sophisticated flow that ensures data integrity and efficient processing. The diagram below shows the overall processing flow. Let’s look at it in more detail by dividing the flow in two major themes - event creation and event reception. Event Creation and Sharing

Event Reception

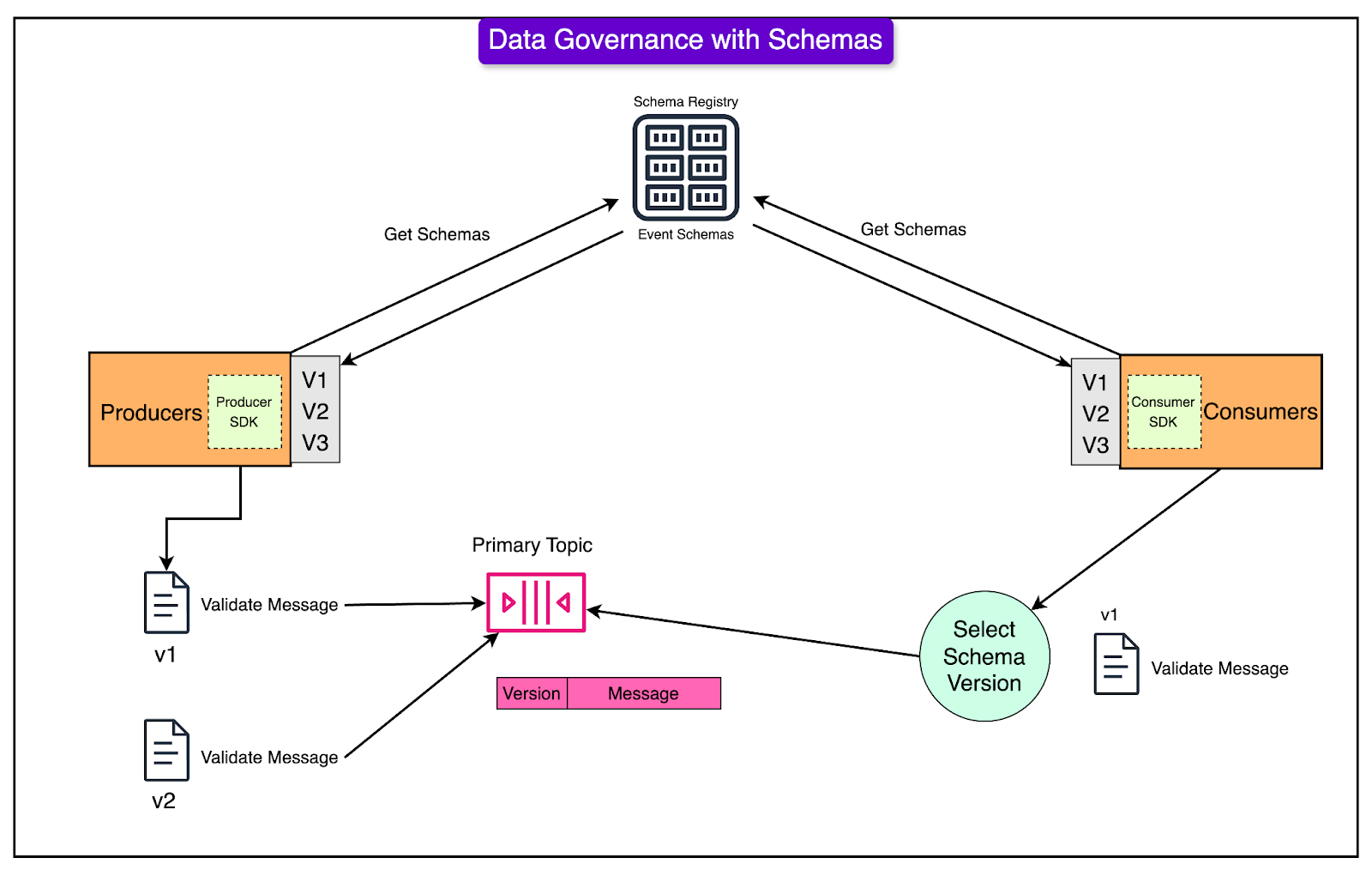

Techniques for Key ChallengesThe McDonald’s engineering team also used some interesting techniques to solve common challenges associated with the setup. Let’s look at a few important ones: Data GovernanceEnsuring data accuracy is crucial when different systems share information. If the data is reliable, it makes designing and building these systems much simpler. MSK and Schema Registry help maintain data integrity by enforcing "data contracts" between systems. A schema is like a blueprint that defines what information should be present in each message and in what format. It specifies the required and optional data fields and their types (e.g., text, number, date). Every message is checked against this blueprint in real time. If a message doesn't match the schema, it's sent to a separate area to be fixed. Here's how schemas work:

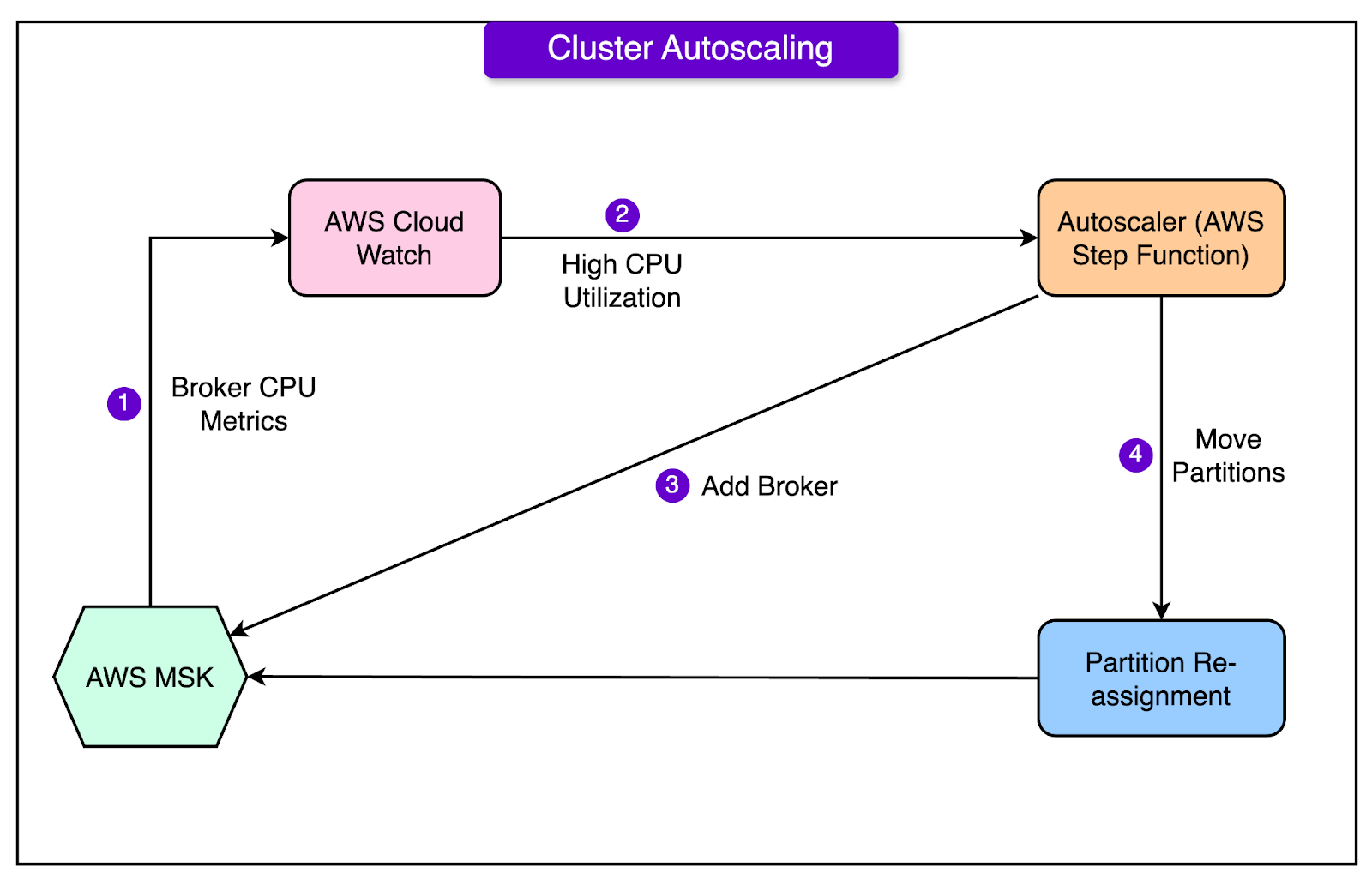

See the diagram below for reference: Using a schema registry to validate data contracts ensures that the information flowing between systems is accurate and consistent. This saves time and effort in designing and operating the systems that rely on this data, especially for analytics purposes. Cluster AutoscalingMSK is a messaging system that helps different parts of an application communicate with each other. It uses brokers to store and manage the messages. As the amount of data grows, MSK automatically increases the storage space for each broker. However, they needed a way to add more brokers to the system when the existing ones got overloaded. To solve this problem, they created an Autoscaler function. See the diagram below: Think of this function as a watchdog that keeps an eye on how hard each broker is working. When a broker's workload (measured by CPU utilization) goes above a certain level, the Autoscaler function kicks in and does two things:

This way, the MSK system can automatically adapt to handle more data and traffic without the need to add brokers or move data around manually. Domain-Based ShardingTo ensure that the messaging system can handle a lot of data and minimize the risk of failures, they divide events into separate groups based on their domain. Each group has its own dedicated MSK cluster. This is like having separate mailrooms for different departments in a large company. The domain of an event determines which cluster and topic it belongs to. For example, events related to user profiles might go to one cluster, while events related to product orders might go to another. Applications that need to receive events can choose to get them from any of these domain-based topics. This improves flexibility and helps distribute the workload across the system. To make sure the platform is always available and can serve users globally, it is set up to work across multiple regions. In each region, there is a high-availability configuration. This means that if one part of the system goes down, another part can take over seamlessly, ensuring uninterrupted service. ConclusionMcDonald's event-driven architecture demonstrates a successful implementation of a large-scale, global event processing platform. The system effectively handles diverse use cases from mobile order tracking to marketing communications while maintaining high reliability and performance. Key success factors include the robust implementation of AWS MSK, effective schema management, and comprehensive error-handling mechanisms. The architecture's domain-based sharding approach and auto-scaling capabilities have proven crucial for handling growing event volumes. Some best practices established through this implementation include:

Looking ahead, McDonald's platform is positioned to evolve with planned enhancements including:

These improvements will further strengthen the platform's capabilities while maintaining its core design principles of scalability, reliability, and simplicity. References: © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 5 Nov 2024