- Mailing Lists

- in

- How Netflix Stores 140 Million Hours of Viewing Data Per Day

Archives

- By thread 5415

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 229

Birchwood Golf Club is one of the finest venues in the Warrington area

(SSW - Video #2) The Epiphany Bridge

How Netflix Stores 140 Million Hours of Viewing Data Per Day

How Netflix Stores 140 Million Hours of Viewing Data Per Day

🚀Faster mobile releases with automated QA (Sponsored)Manual testing on mobile devices is too low and too limited. It forces teams to cut releases a week early just to test before submitting them to app stores. And without broad device coverage, issues slip through. QA Wolf’s AI-native service delivers 80% automated test coverage in weeks, with test running on real iOS devices and Android emulators—all in 100% parallel with zero flakes.

Engineering teams move faster, releases stay on track, and testing happens automatically—so developers can focus on building, not debugging. Rated 4.8/5 ⭐ on G2 Disclaimer: The details in this post have been derived from the Netflix Tech Blog. All credit for the technical details goes to the Netflix engineering team. Some details related to Apache Cassandra® have been taken from Apache Cassandra® official documentation. Apache Cassandra® is a registered trademark of The Apache Software Foundation. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. Every single day millions of people stream their favorite movies and TV shows on Netflix. With each stream, a massive amount of data is generated: what a user watches, when they pause, rewind, or stop, and what they return to later. This information is essential for providing users with features like resume watching, personalized recommendations, and content suggestions. However, Netflix's growth has led to an explosion of time series data (data recorded over time, like a user’s viewing history). The company relies heavily on this data to enhance the user experience, but handling such a vast and ever-increasing amount of information also presents a technical challenge in the following ways:

A failure to manage this data properly could lead to catastrophic user experience delays when loading watch history. It could also result in useless recommendations, or even lost progress in shows. In this article, we’ll learn how Netflix tackled these problems and improved their storage system to handle millions of hours of viewing data every day. The Initial ApproachWhen Netflix first started handling large amounts of viewing data, they chose Apache Cassandra® for the following reasons:

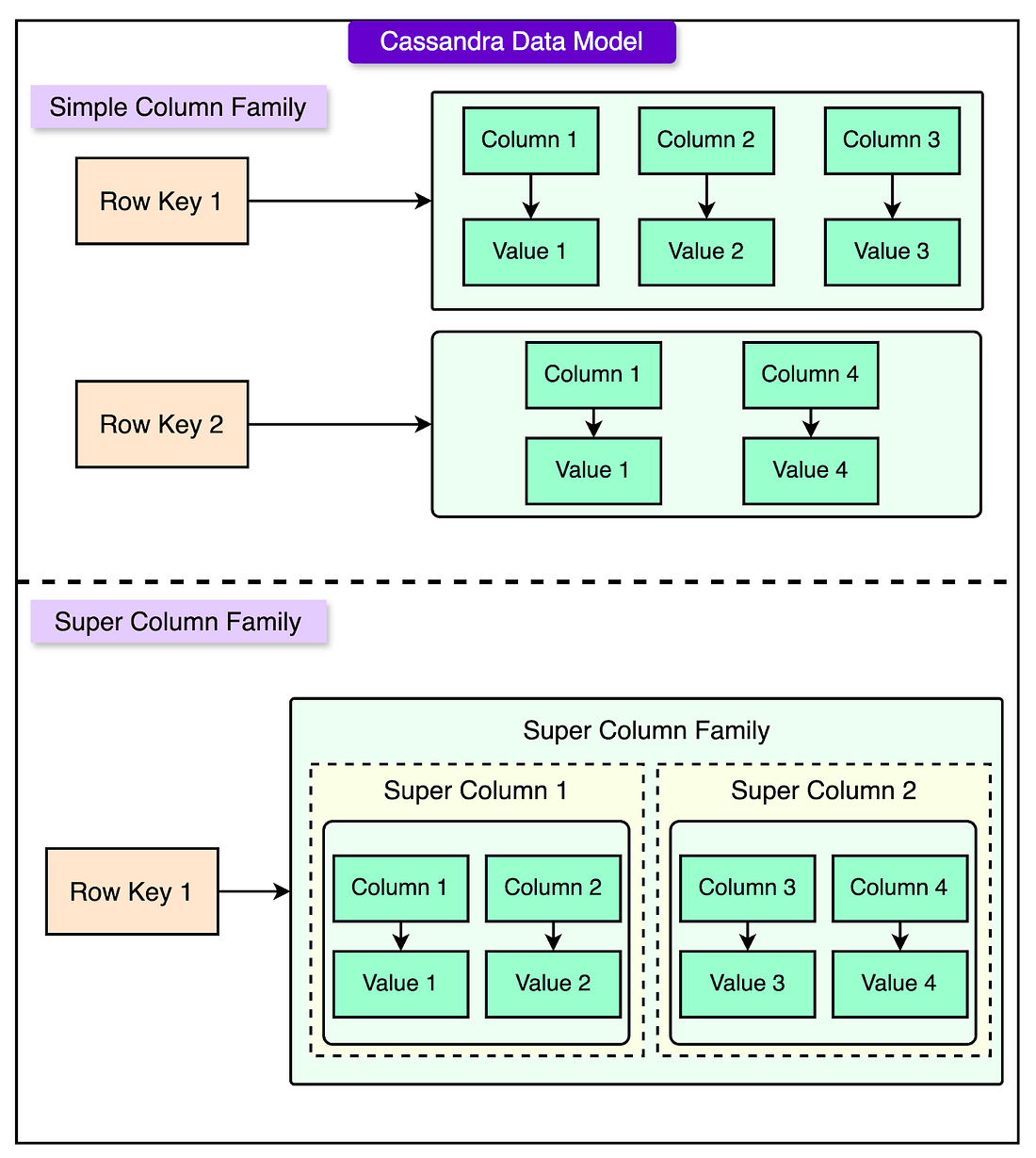

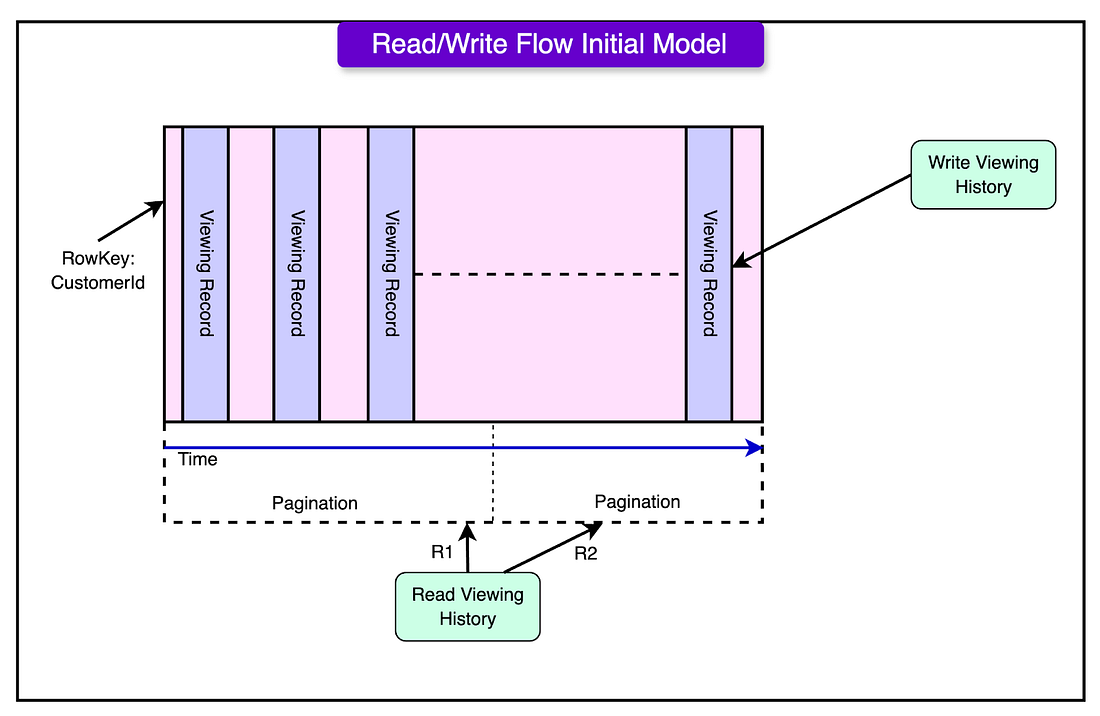

See the diagram below, which shows the data model of Apache Cassandra® using column families. To structure the data efficiently, Netflix designed a simple yet scalable storage model in Cassandra®. Each user’s viewing history was stored under their unique ID (CustomerId). Every viewing record (such as a movie or TV show watched) was stored in a separate column under that user’s ID. To handle millions of users, Netflix used "horizontal partitioning," meaning data was spread across multiple servers based on CustomerId. This ensured that no single server was overloaded. Reads and Writes in the Initial SystemThe diagram below shows how the initial system handled reads and writes to the viewing history data. Every time a user started watching a show or movie, Netflix added a new column to their viewing history record in the database. If the user paused or stopped watching, that same column was updated to reflect their latest progress. While storing data was easy, retrieving it efficiently became more challenging as users' viewing histories grew. Netflix used three different methods to fetch data, each with its advantages and drawbacks:

At first, this system worked well because it provided a fast and scalable way to store viewing history. However, as more users watched more content, this system started to hit performance limits. Some of the issues were as follows:

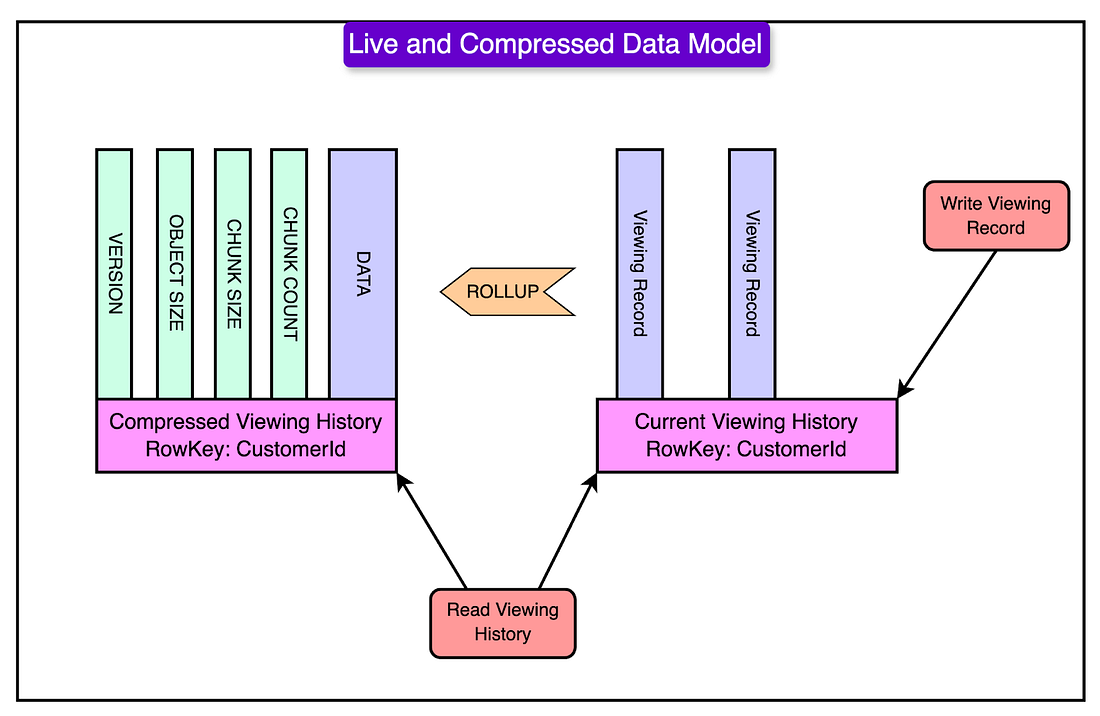

To speed up data retrieval, Netflix introduced a caching solution called EVCache. Instead of reading everything from the Apache Cassandra® database every time, each user’s viewing history is stored in a cache in a compressed format. When a user watches a new show, their viewing data is added to Apache Cassandra® and merged with the cached value in EVCache. If a user’s viewing history is not found in the cache, Netflix fetches it from Cassandra®, compresses it, and then stores it in EVCache for future use. By adding EVCache, Netflix significantly reduced the load on their Apache Cassandra® database. However, this solution also had its limits. The New Approach: Live & Compressed Storage ModelOne important fact about the viewing history data was this: most users frequently accessed only their recent viewing history, while older data was rarely needed. However, storing everything the same way led to unnecessary performance and storage costs. To solve this, Netflix redesigned its storage model by splitting viewing history into two categories:

Since LiveVH and CompressedVH serve different purposes, they were tuned differently to maximize performance. For LiveVH, which stores recent viewing records, Netflix prioritized speed and real-time updates. Frequent compactions were performed to clean up old data and keep the system running efficiently. Additionally, a low GC (Garbage Collection) grace period was set, meaning outdated records were removed quickly to free up space. Since this data was accessed often, frequent read repairs were implemented to maintain consistency, ensuring that users always saw accurate and up-to-date viewing progress. On the other hand, CompressedVH, which stores older viewing records, was optimized for storage efficiency rather than speed. Since this data was rarely updated, fewer compactions were needed, reducing unnecessary processing overhead. Read repairs were also performed less frequently, as data consistency was less critical for archival records. The most significant optimization was compressing the stored data, which drastically reduced the storage footprint while still making older viewing history accessible when needed. The Large View History Performance IssueEven with compression, some users had extremely large viewing histories. For these users, reading and writing a single massive compressed file became inefficient. If CompressedVH grows too large, retrieving data becomes slow. Also, single large files create performance bottlenecks when read or written. To avoid these issues, Netflix introduced chunking, where large compressed data is split into smaller parts and stored across multiple Apache Cassandra® database nodes. Here’s how it works:

This system gave Netflix the headroom needed to handle future growth. The New ChallengesWith the global expansion of Netflix, the company launched its service in 130+ new countries and introduced support for 20 languages. This led to a massive surge in data storage and retrieval needs. At the same time, Netflix introduced video previews in the user interface (UI), a feature that allowed users to watch short clips before selecting a title. While this improved the browsing experience, it also dramatically increased the volume of time-series data being stored. When Netflix analyzed the performance of its system, it found several inefficiencies:

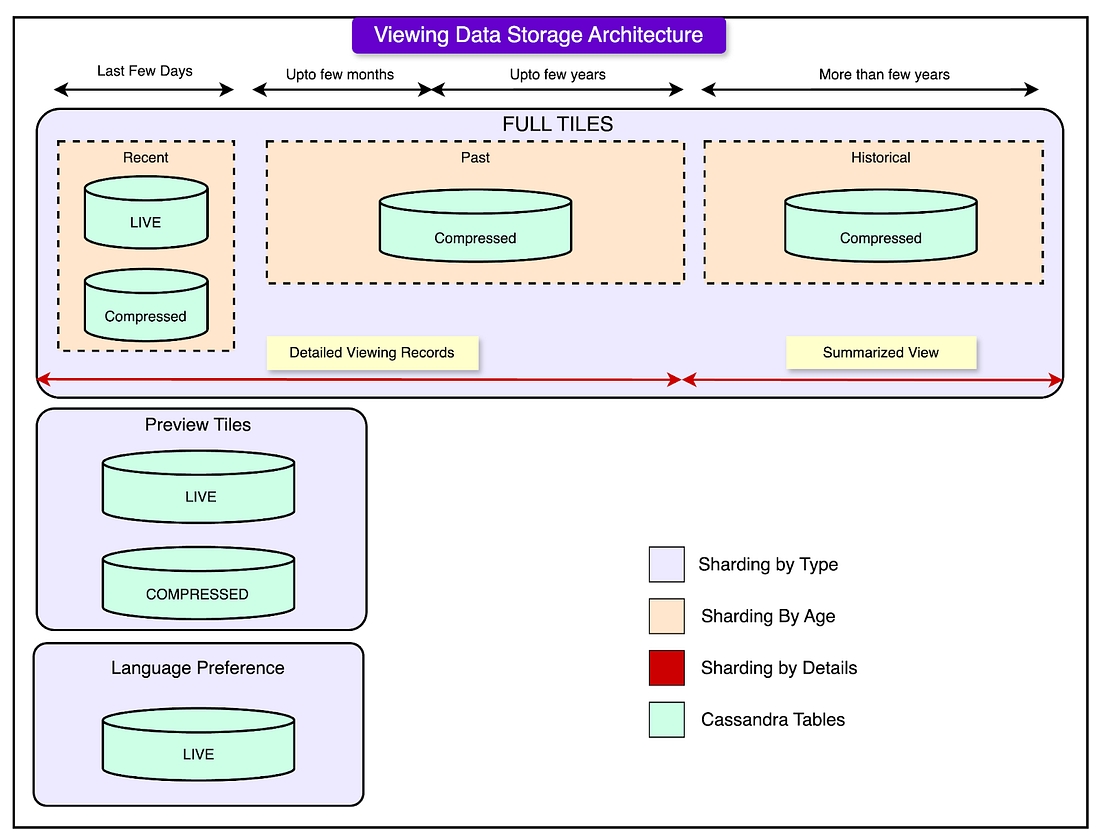

At this stage, Netflix’s existing architecture could no longer scale efficiently. Apache Cassandra® had been a solid choice for scalability, but by this stage, Netflix was already operating one of the largest Apache Cassandra® clusters in existence. The company had already pushed Apache Cassandra® to its limits, and without a new approach, performance issues would continue to worsen as the platform grew. A more fundamental redesign was needed. Netflix’s New Storage ArchitectureTo overcome the growing challenges of data overload, inefficient retrieval, and high latency, Netflix introduced a new storage architecture that categorized and stored data more intelligently. Step 1: Categorizing Data by TypeOne of the biggest inefficiencies in the previous system was that all viewing data (whether it was a full movie playback, a short video preview, or a language preference setting) was stored together. Netflix solved this by splitting viewing history into three separate categories, each with its dedicated storage cluster:

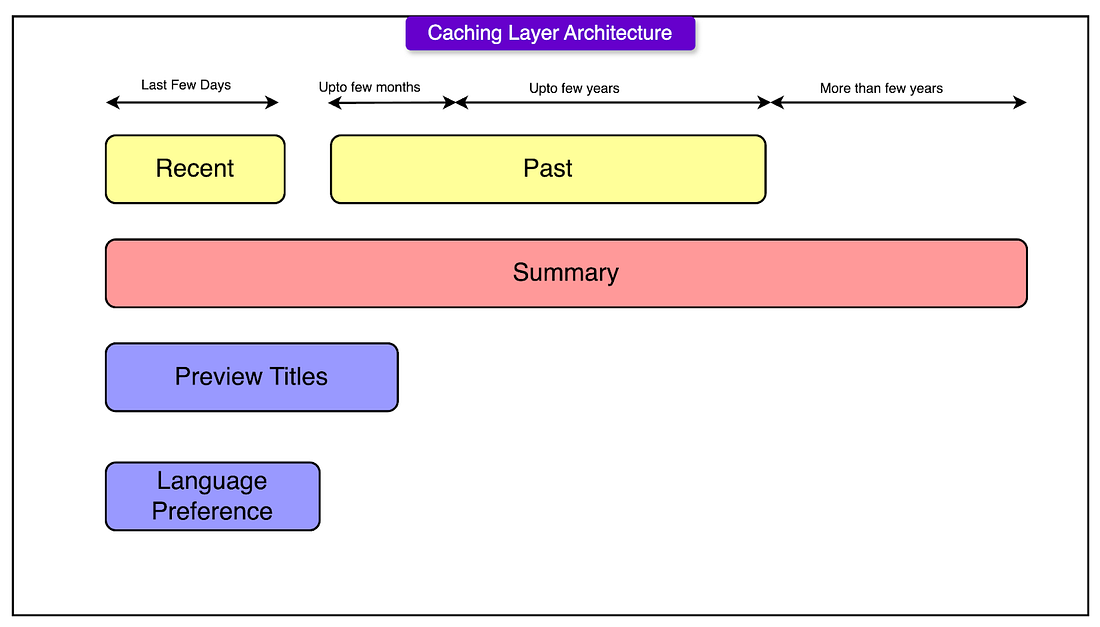

Step 2: Sharding Data for Better PerformanceNetflix sharded (split) its data across multiple clusters based on data type and data age, improving both storage efficiency and query performance. See the diagram below for reference: 1 - Sharding by Data TypeEach of the three data categories (Full Plays, Previews, and Language Preferences) was assigned its separate cluster. This allowed Netflix to tune each cluster differently based on how frequently the data was accessed and how long it needed to be stored. It also prevented one type of data from overloading the entire system. 2 - Sharding by Data AgeNot all data is accessed equally. Users frequently check their recent viewing history, but older data is rarely needed. To optimize for this, Netflix divided its data storage into three time-based clusters:

Optimizations in the New ArchitectureTo keep the system efficient, scalable, and cost-effective, the Netflix engineering team introduced several key optimizations. 1 - Improving Storage EfficiencyWith the introduction of video previews and multi-language support, Netflix had to find ways to reduce the amount of unnecessary data stored. The following strategies were adopted:

2 - Making Data Retrieval More EfficientInstead of fetching all data at once, the system was redesigned to retrieve only what was needed:

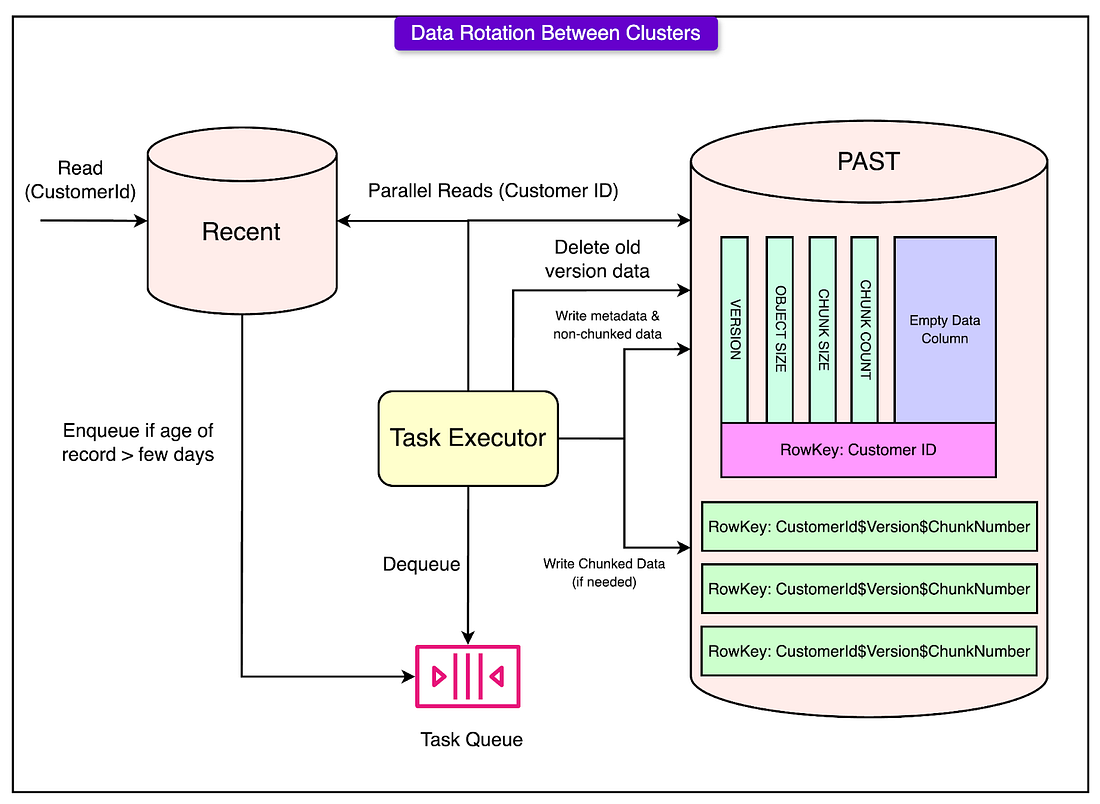

3 - Automating Data Movement with Data RotationTo keep the system optimized, Netflix introduced a background process that automatically moved older data to the appropriate storage location:

See the diagram below: 4 - Caching for Faster Data AccessNetflix restructured its EVCache (in-memory caching layer) to mirror the backend storage architecture. A new summary cache cluster was introduced, storing precomputed summaries of viewing data for most users. This meant that instead of computing summaries every time a user made a request, Netflix could fetch them instantly from the cache. They managed to achieve a 99% cache hit rate, meaning that nearly all requests were served from memory rather than querying Apache Cassandra®, reducing overall database load. ConclusionWith the growth that Netflix went through, their engineering team had to evolve the time-series data storage system to meet the increasing demands. Initially, Netflix relied on a simple Apache Cassandra®-based architecture, which worked well for early growth but struggled as data volume soared. The introduction of video previews, global expansion, and multilingual support pushed the system to its breaking point, leading to high latency, storage inefficiencies, and unnecessary data retrieval. To address these challenges, Netflix redesigned its architecture by categorizing data into Full Title Plays, Video Previews, and Language Preferences and sharding data by type and age. This allowed for faster access to recent data and efficient storage for older records. These innovations allowed Netflix to scale efficiently, reducing storage costs, improving data retrieval speeds, and ensuring a great streaming experience for millions of users worldwide. Note: Apache Cassandra® is a registered trademark of the Apache Software Foundation. References: SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. © 2025 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 18 Mar 2025