- Mailing Lists

- in

- How Uber Built Real-Time Chat to Handle 3 Million Tickets Per Week?

Archives

- By thread 5200

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 11

How Uber Built Real-Time Chat to Handle 3 Million Tickets Per Week?

How Uber Built Real-Time Chat to Handle 3 Million Tickets Per Week?

Integrate API users 50% faster (Sponsored)Creating a frictionless API experience for your partners and customers no longer requires an army of engineers. Speakeasy’s platform makes crafting type-safe, idiomatic SDKs for enterprise APIs easy. That means you can unlock API revenue while keeping your team focused on what matters most: shipping new products. Make SDK generation part of your API’s CI/CD and distribute libraries that users love at a fraction of the cost of maintaining them in-house. Uber has a diverse customer base consisting of riders, drivers, eaters, couriers, and merchants. Each user persona has different support requirements when reaching out to Uber’s customer agents through various live and non-live channels. Live channels are chat and phone while non-live is Uber’s inApp messaging channel. For the users, the timely resolution of issues takes center stage. However, Uber’s main concern revolves around customer satisfaction and the cost of resolution of tickets. To keep costs in control, Uber needs to maintain a low CPC (cost-per-contact) with a good customer satisfaction rating. Based on their analysis, they found that the live chat channel offers the most value when compared to other channels. It allows for:

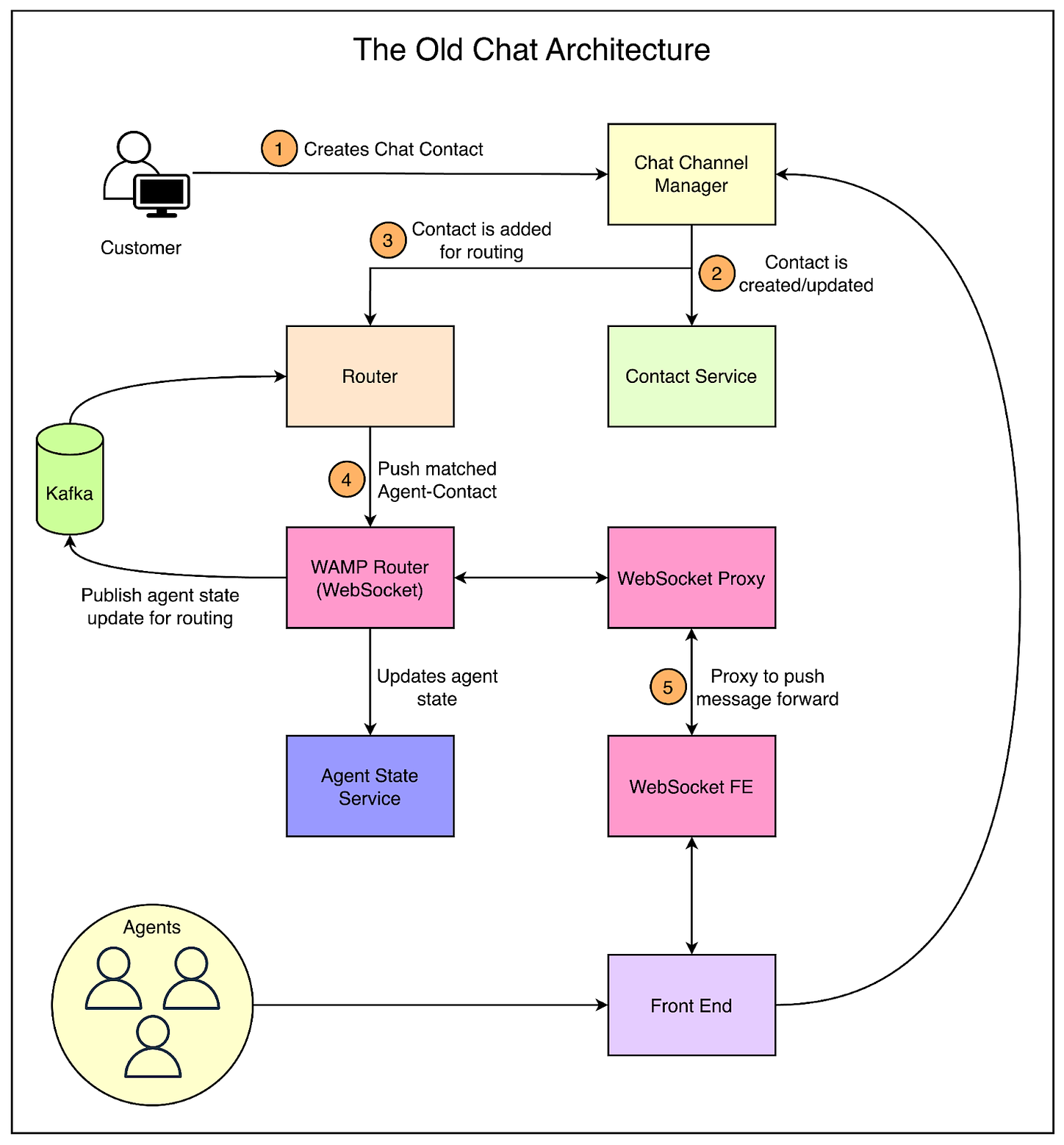

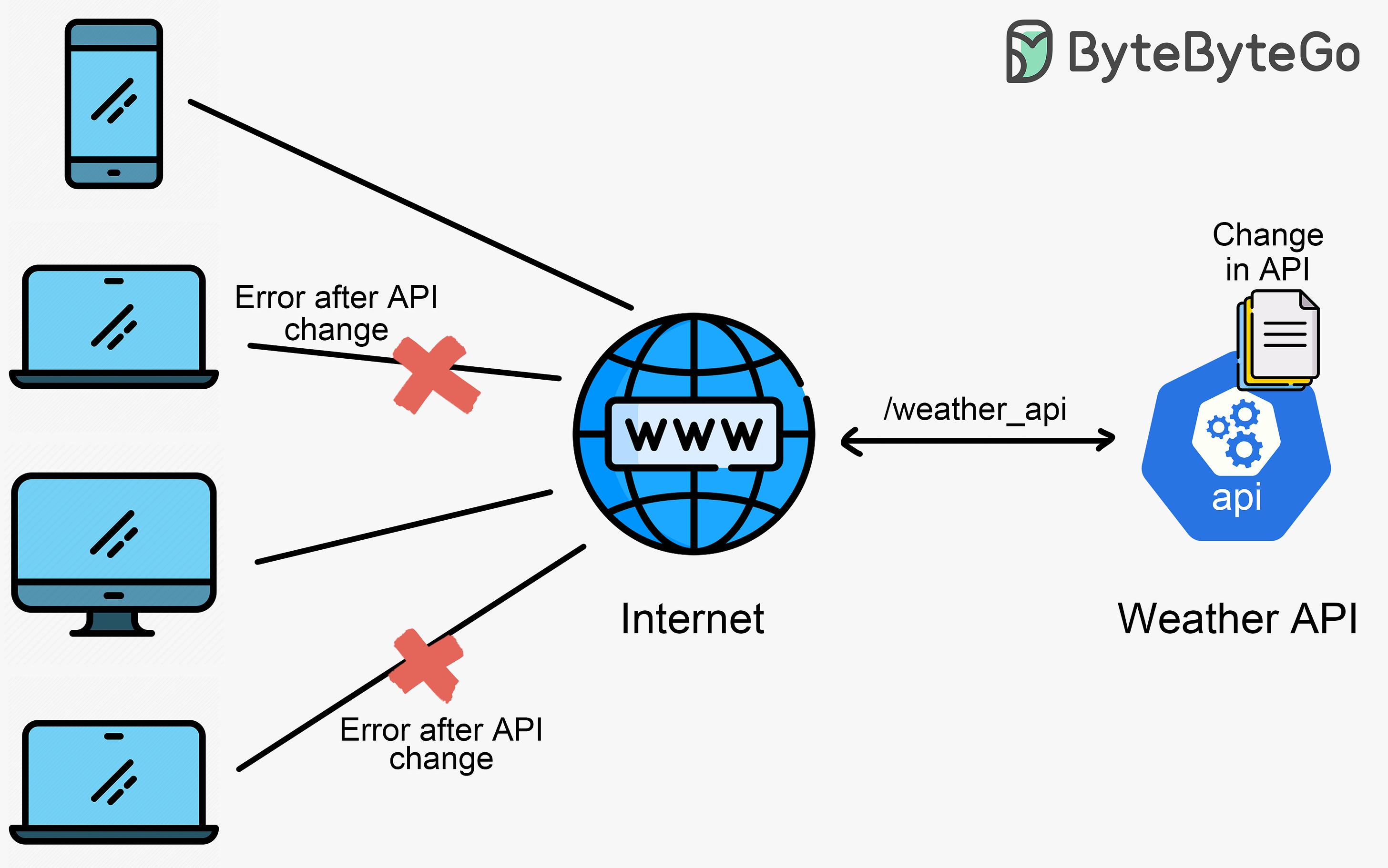

However, from 2019 to 2024, only 1% of all support interactions (also known as contacts) were served via the live chat channel because the chat infrastructure at Uber wasn’t capable of meeting the demand. In this post, we look at how Uber built their real-time chat channel to work at the required scale. The Legacy Chat ArchitectureThe legacy architecture for live chat at Uber was built using the WAMP protocol. WAMP or Web Application Messaging Protocol is a WebSocket subprotocol that is used to exchange messages between application components. It was primarily used for message passing and PubSub over WebSockets to relay contact information to the agent’s machine. The below diagram shows a high-level flow of the chat contact from being created to being routed to an agent on the front end. This architecture had some core issues as follows: 1 - ReliabilityOnce the traffic scaled to 1.5X, the system started to face reliability issues. Almost 46% of the events from the backend were not getting delivered to the Agent’s browser, adding to the customer’s wait time to speak to an agent. It also created delays for the agent resulting in wastage of bandwidth. 2 - ScaleOnce the request per second crossed 10, the system’s performance deteriorated due to high memory usage and file descriptor leaks. Also, it wasn’t possible to horizontally scale the system due to limitations with older versions of the WAMP library. 3 - Observability and DebuggingThere were major issues related to observability and debugging:

4 - StatefulThe services in the architecture were stateful resulting in maintenance and restart complications. This caused frequent spikes in message delivery time and losses. Latest articlesIf you’re not a paid subscriber, here’s what you missed. To receive all the full articles and support ByteByteGo, consider subscribing: Goals of the New Chat ArchitectureDue to these challenges, Uber decided to build a new real-time chat infrastructure with the following goals:

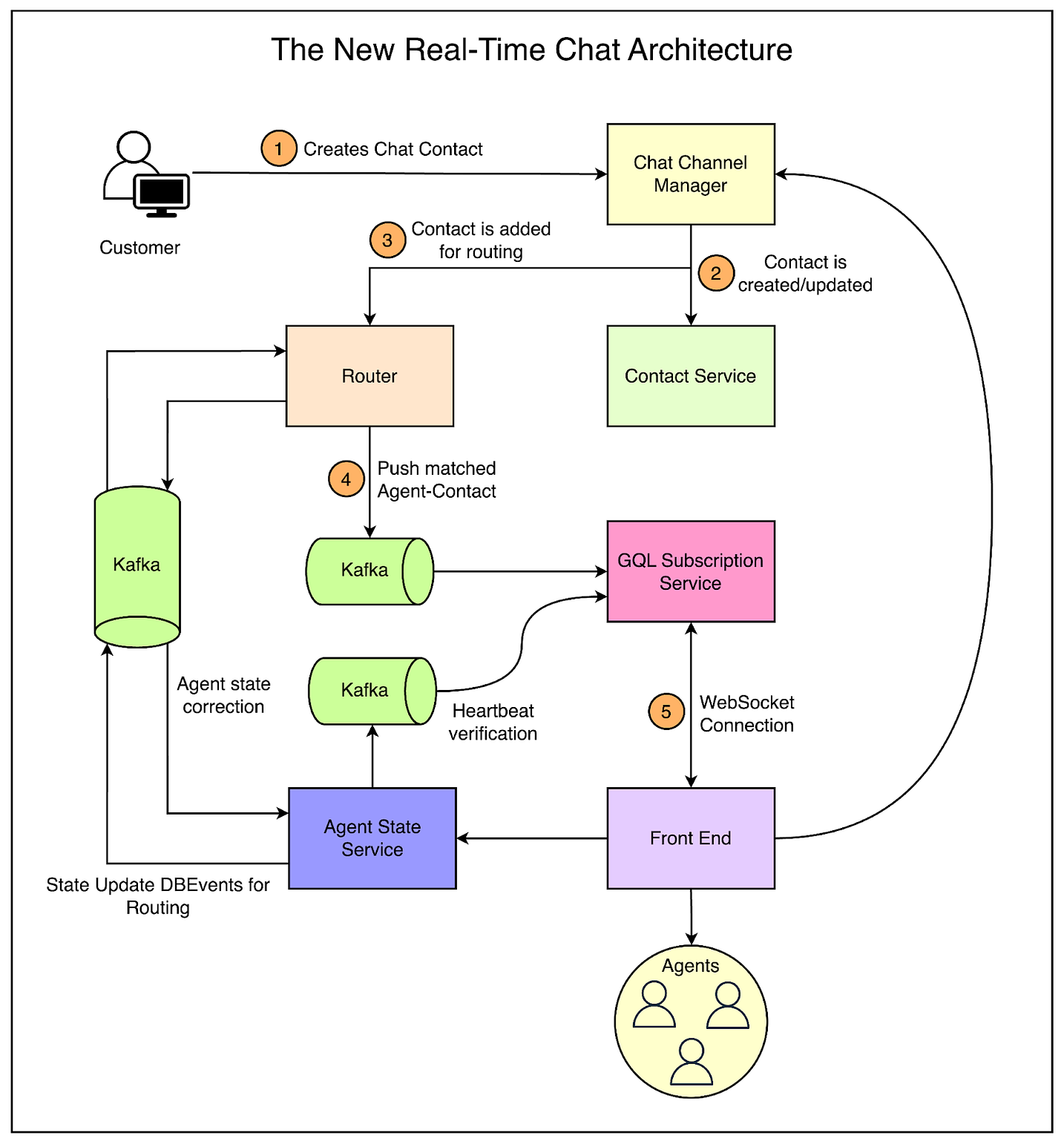

The New Live Chat ArchitectureIt was important for the new architecture to be simple to improve transparency and scalability. The team at Uber decided to go with the Push Pipeline. It was a simple WebSocket server that agent machines would connect to and be able to send and receive messages through one generic socket channel. The below diagram shows the new architecture. Below are the details of the various components: Front End UIThis is used by the agents to interact with the customers. Widgets and different actions are made available to the agents to take appropriate actions for the customer. Contact ReservationThe router is the service that finds the most appropriate match between the agent and contact depending on the contact details. An agent is selected based on the concurrency set of the agent’s profile such as the number of chats an agent can handle simultaneously. Other considerations include:

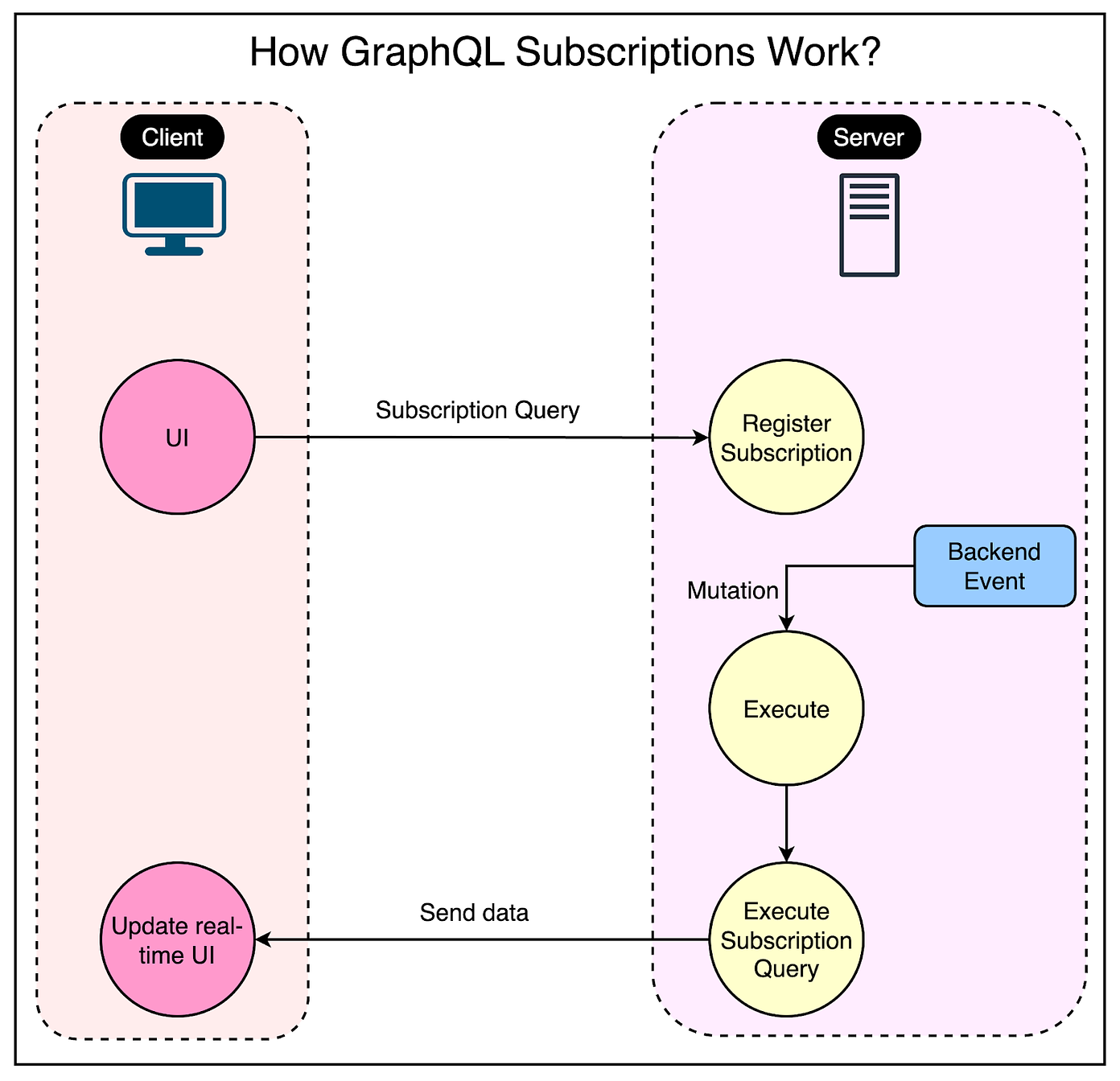

On finding the match, the contact is pushed into a reserved state for the agent. Push PipelineWhen the contact is reserved for an agent, the information is published to Kafka and is received by the GQL Subscription Service. On receiving the information through the socket via GraphQL subscriptions, the Front End loads the contact for the agent along with all the necessary widgets and actions. Agent StateWhen the agent starts working, he/she goes online via a toggle on the Front End. This updates the Agent State service, allowing the agent to be mapped to a contact. GQL Subscription ServiceThe front-end team was already using GraphQL for HTTP calls to the services. Due to this familiarity, the team selected GraphQL subscriptions for pushing data from the server to the client. The below diagram shows how GraphQL subscriptions work on a high level. In GraphQL subscriptions, the client sends messages to the server via subscription requests. The server matches the queries and sends back messages to the client machines. In this case, the client machines are the agent machines. Uber’s engineering team used GraphQL over WebSockets by leveraging the graphql-ws library. The library had almost 2.3 million weekly downloads and was also recommended by Apollo. To improve the availability, they used a few techniques:

Test Results from the New Chat ArchitectureUber performed functional and non-functional tests to ensure that both customers and agents received the best experience. Some results from the tests were as follows:

References: SPONSOR USGet your product in front of more than 500,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing hi@bytebytego.com. © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 23 Apr 2024