- Mailing Lists

- in

- How Uber Uses Integrated Redis Cache to Serve 40M Reads/Second?

Archives

- By thread 5321

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 133

Elevate your log storage strategy with New Relic live archives

Is a net-zero transition still possible?

How Uber Uses Integrated Redis Cache to Serve 40M Reads/Second?

How Uber Uses Integrated Redis Cache to Serve 40M Reads/Second?

80% automated E2E test coverage in 4 months (Sponsored)Were you aware that despite allocating 25%+ of budgets to QA, 2/3rds of companies still have less than 50% end-to-end test coverage? This means over half of every app is exposed to quality issues. QA Wolf solves this coverage problem by being the first QA solution to get web apps to 80% automated E2E test coverage in weeks instead of years. How's QA Wolf different?

→ Learn more about QA Wolf today! In 2020, Uber launched their in-house, distributed database named Docstore. It was built on top of MySQL and was capable of storing tens of petabytes of data while serving tens of millions of requests per second. Over the years, Docstore was adopted by all business verticals at Uber for building their services. Most of these applications required low latency, higher performance, and scalability from the database, while also supporting higher workloads. Challenges with Low Latency Database ReadsEvery database faces a challenge when dealing with applications that need low-latency read access with a highly scalable design. Some of these challenges are as follows:

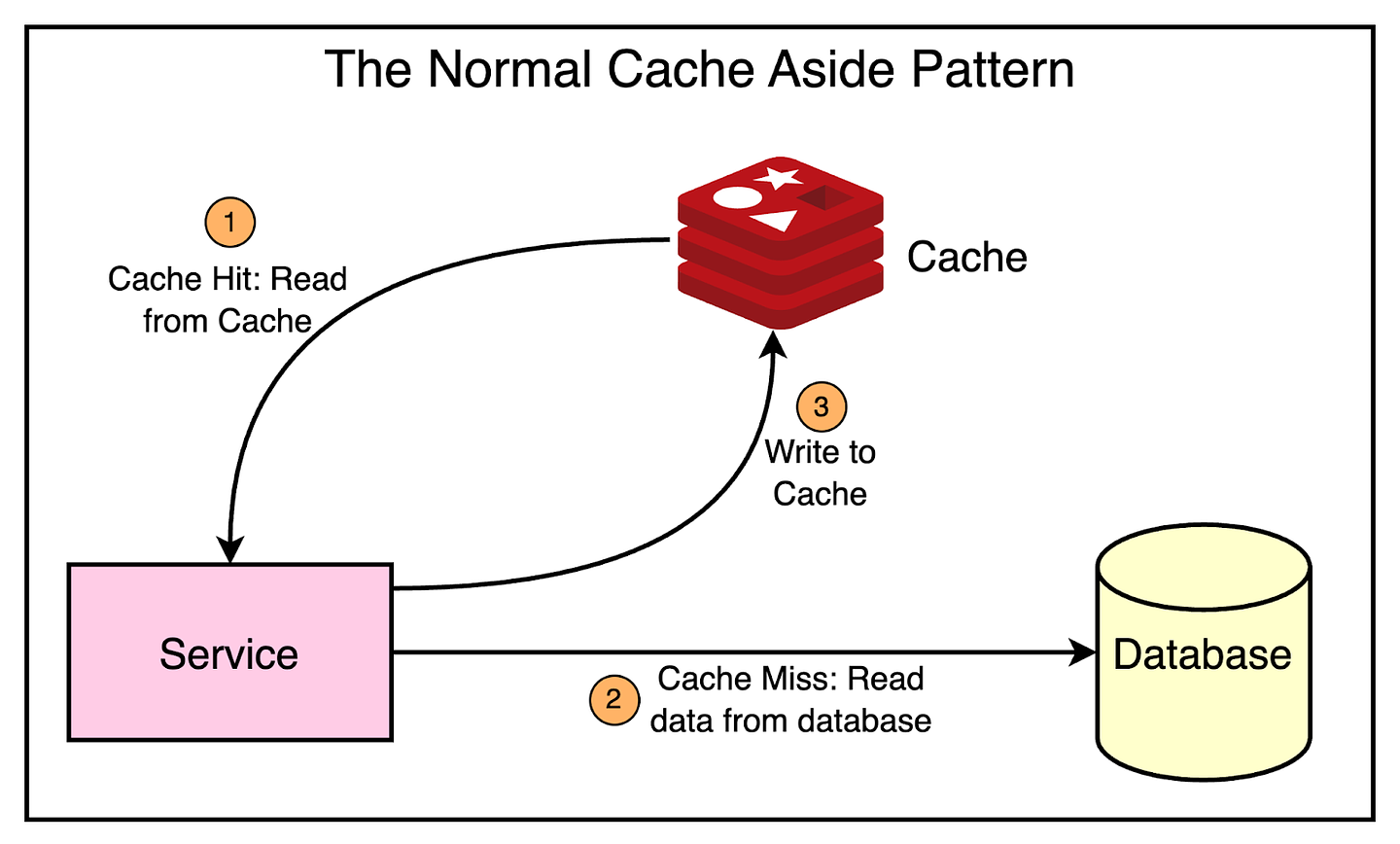

To overcome these challenges, microservices typically make use of caching. Uber started offering Redis as a distributed caching solution for the various teams. They followed the typical caching design pattern where the service writes to the database and cache while serving reads directly from the cache. The below diagram shows this pattern: However, the normal caching pattern where a service takes care of managing the cache has a few problems at the scale of Uber.

The main point is that every team that needed caching had to spend a large amount of effort to build and maintain a custom caching solution. To avoid this, Uber decided to build an integrated caching solution known as CacheFront. Latest articlesIf you’re not a paid subscriber, here’s what you missed this month. To receive all the full articles and support ByteByteGo, consider subscribing: Design Goals with CacheFrontWhile building CacheFront, Uber had a few important design goals in mind:

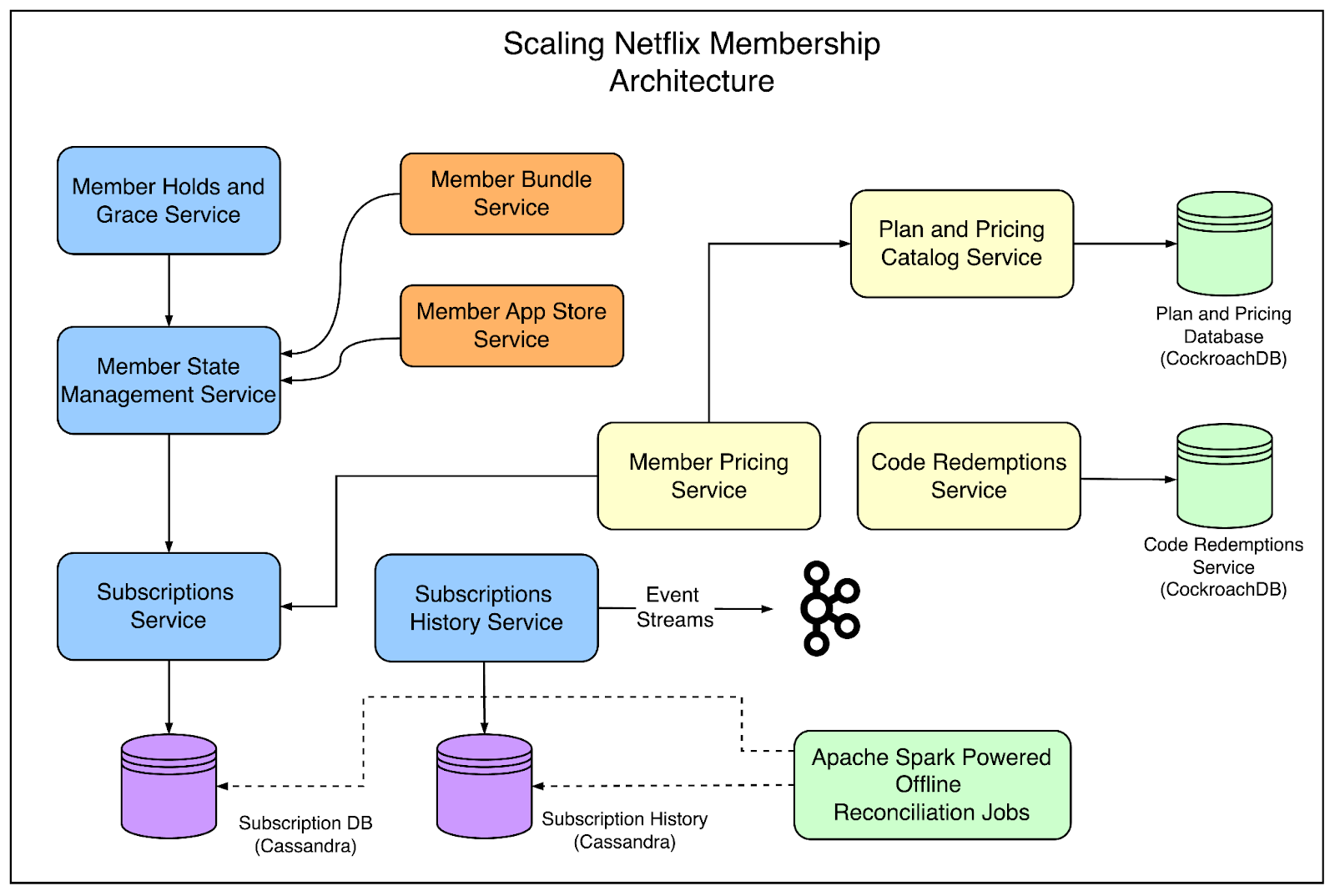

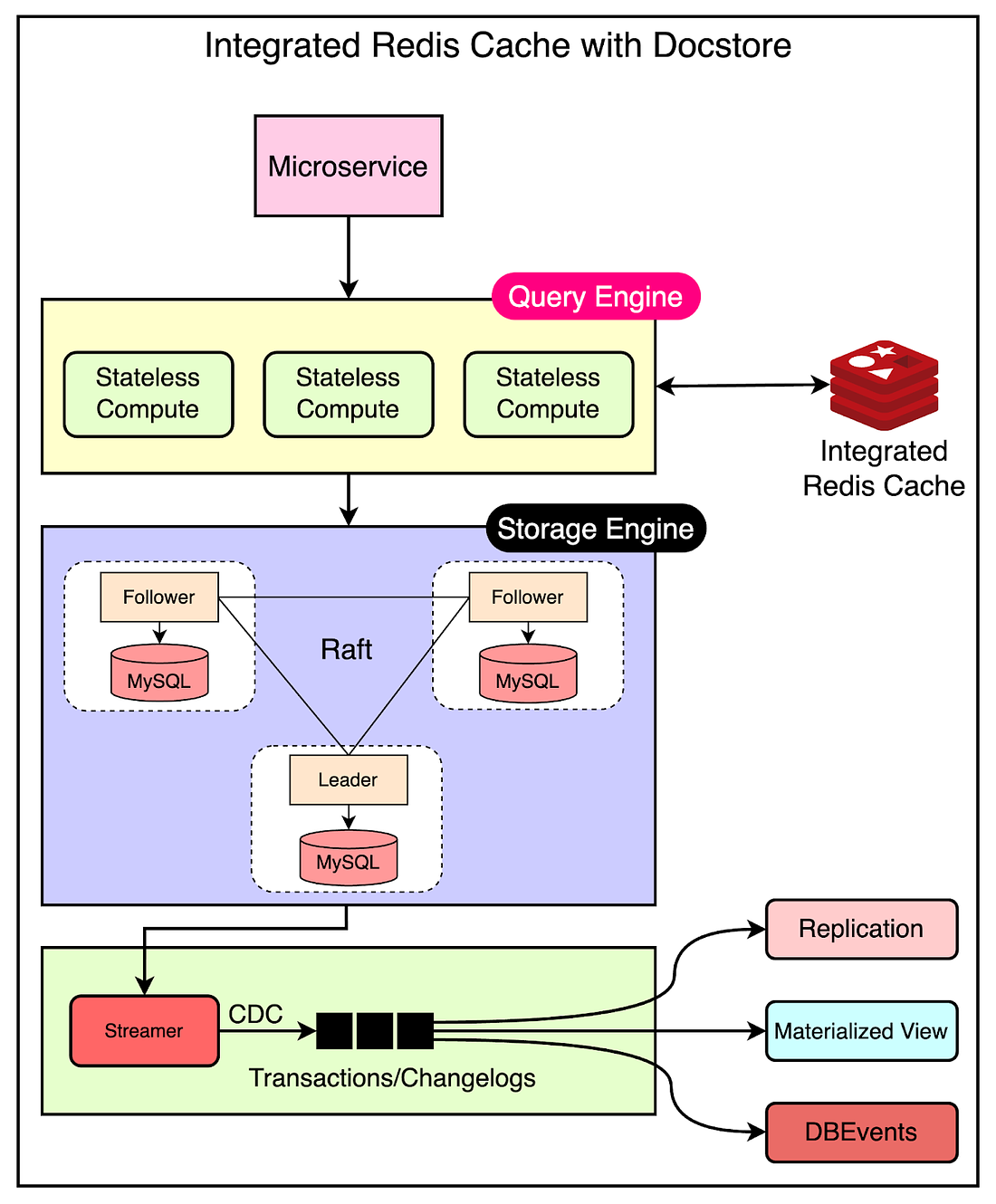

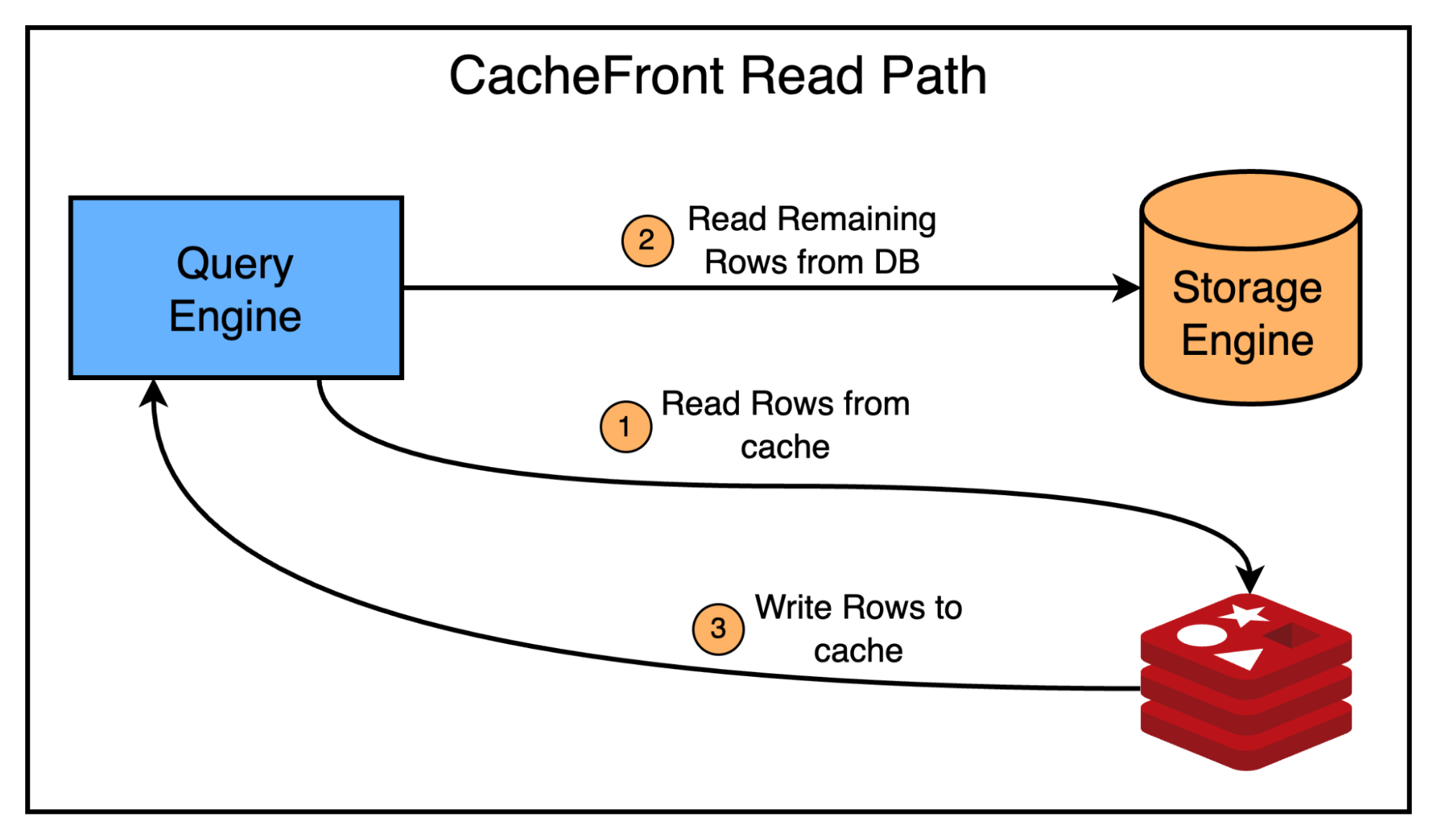

High-Level Architecture with CacheFrontTo support these design goals, Uber created its integrated caching solution tied to Docstore. The below diagram shows the high-level architecture of Docstore along with CacheFront: As you can see, Docstore’s query engine acts as the entry point for services and is responsible for serving reads and writes to clients. Therefore, it was the ideal place to integrate the caching layer, allowing the cache to be decoupled from the disk-based storage. The query engine implemented an interface to Redis to store cached data along with mechanisms to invalidate the cache entries. Handing Cached ReadsCacheFront uses a cache aside or look aside strategy when it comes to reads. The below steps explain how it works:

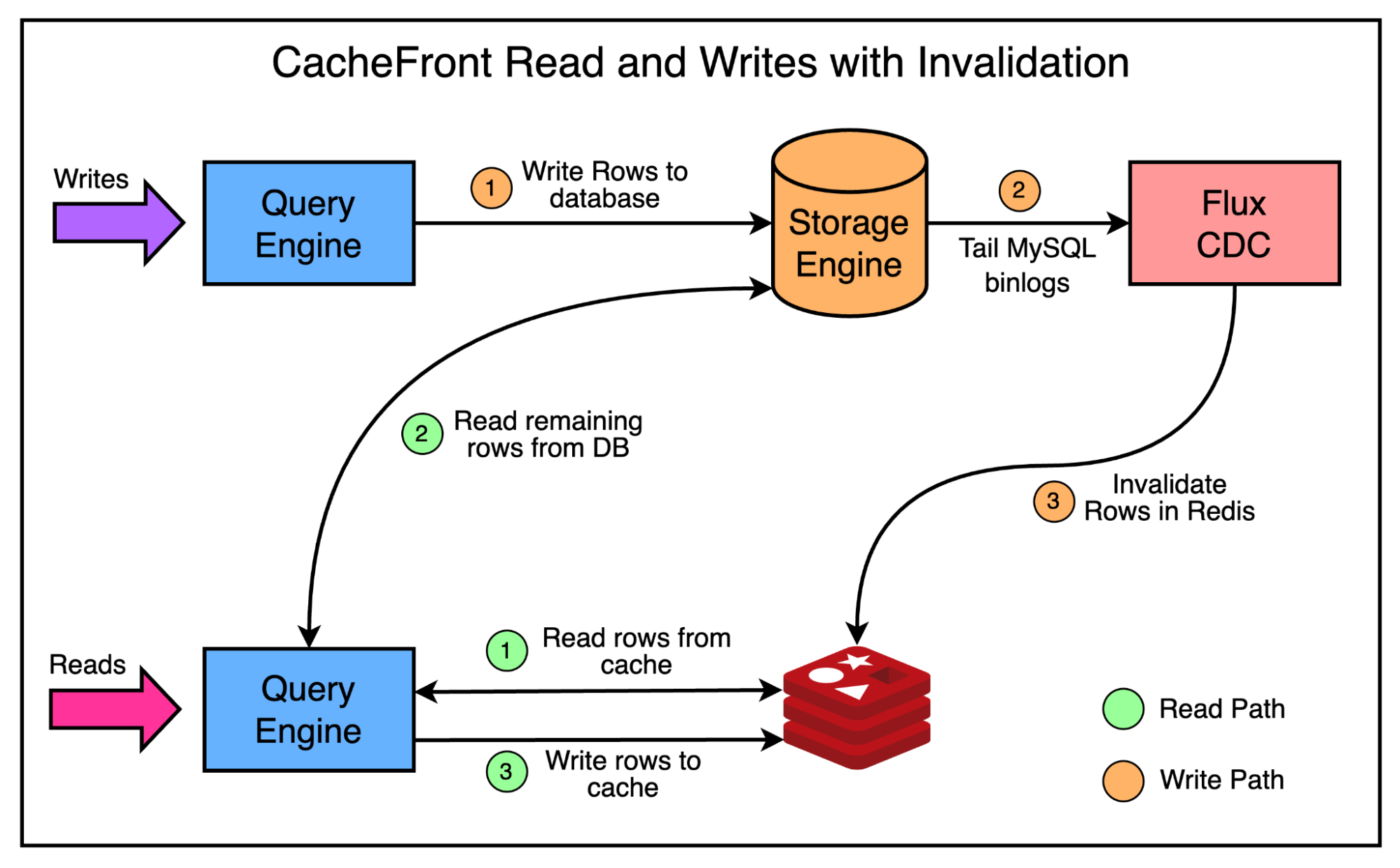

Refer to the below diagram that explains the process more clearly: Cache Invalidation with CDCAs you may have heard a million times by now, cache invalidation is one of the two hard things in Computer Science. One of the simplest cache invalidation strategies is configuring a TTL (Time-to-Live) and letting the cache entries expire once they cross the TTL. While this can work for many cases, most users expect changes to be reflected faster than the TTL. However, lowering the default TTL to a very small value can sink the cache hit rate and reduce its effectiveness. To make cache invalidation more relevant, Uber leveraged Flux, Docstore’s change data capture and streaming service. Flux works by tailing the MySQL binlog events for each database cluster and publishes the events to a list of consumers. It powers replication, materialized views, data lake ingestions, and data consistency validations among various nodes. For cache invalidation, a new consumer was created that subscribes to the data events and invalidates/upserts the new rows in Redis. The below diagram shows the read and write paths with cache invalidation. There were some key advantages of this approach:

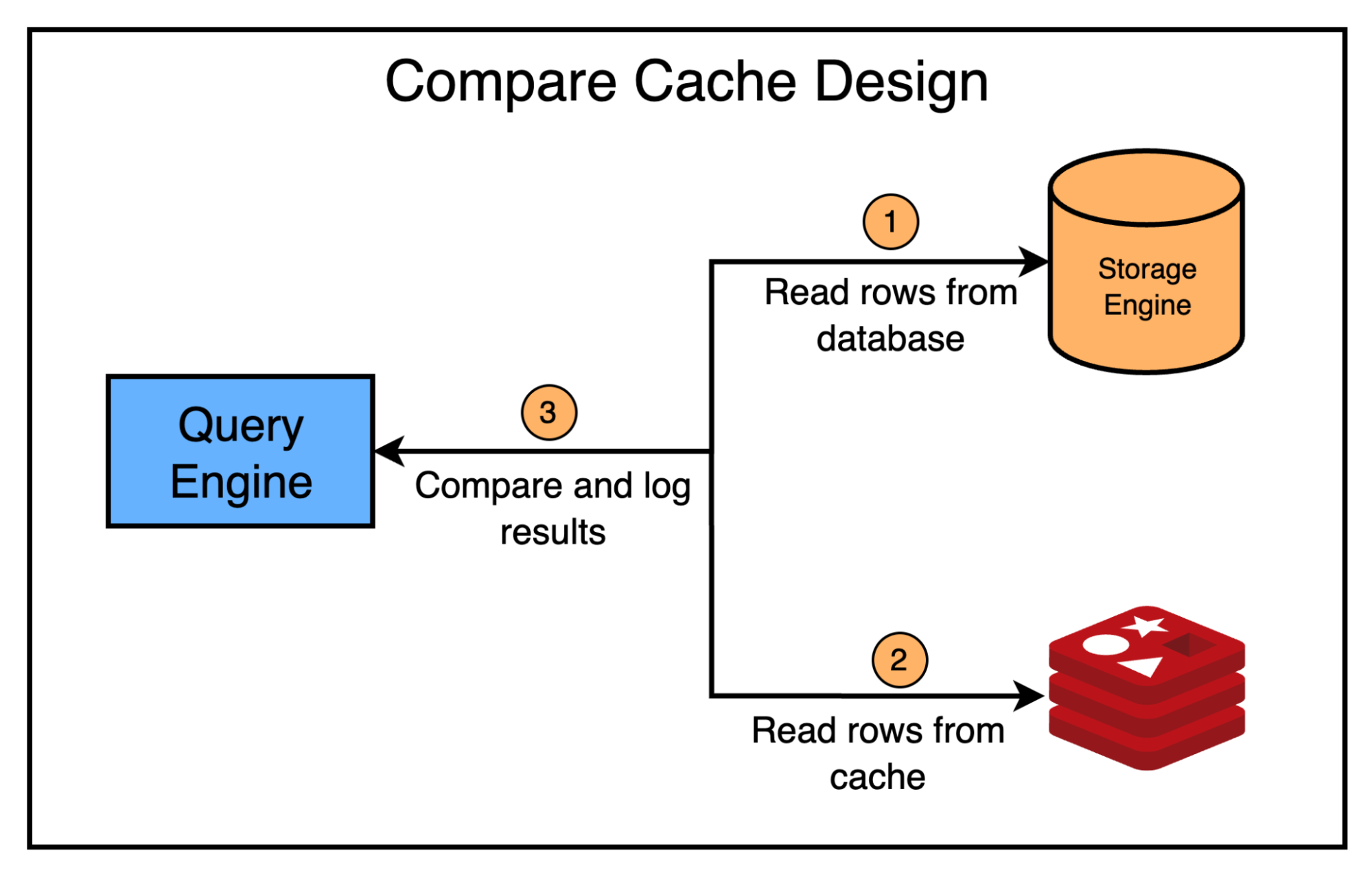

However, there were also a couple of issues that had to be ironed out. 1 - Deduplicating Cache WritesSince writes happen to the cache simultaneously between the read and write paths, it was possible to write a stale row to the cache by overwriting the newest value. To prevent this, they deduplicated writes based on the timestamp of the row set in MySQL. This timestamp served as a version number and was parsed from the encoded row value in Redis using the EVAL command. 2 - Stronger Consistency RequirementEven though cache invalidation using CDC with Flux was faster than relying on TTL, it still provided eventual consistency. However, some use cases required stronger consistency guarantees such as the reading-own-writes guarantee. For such cases, they created a dedicated API to the query engine that allowed users to explicitly invalidate the cached rows right after the corresponding writes were completed. By doing so, they didn’t have to wait for the CDC process to complete for the cache to become consistent. Scale and Resiliency with CacheFrontThe basic requirement of CacheFront was ready once they started supporting reads and cache invalidation. However, Uber also wanted this solution to work at their scale. They also had critical resiliency needs around the entire platform. To achieve scale and resiliency with CacheFront, they utilized multiple strategies. Compare cacheMeasurements are the key to proving that a system works as expected. The same was the case with CacheFront. They added a special mode to CacheFront that shadows read requests to cache, allowing them to run a comparison between the data in the cache and the database to verify that both were in sync. Any mismatches such as stale rows are logged as metrics for further analysis.

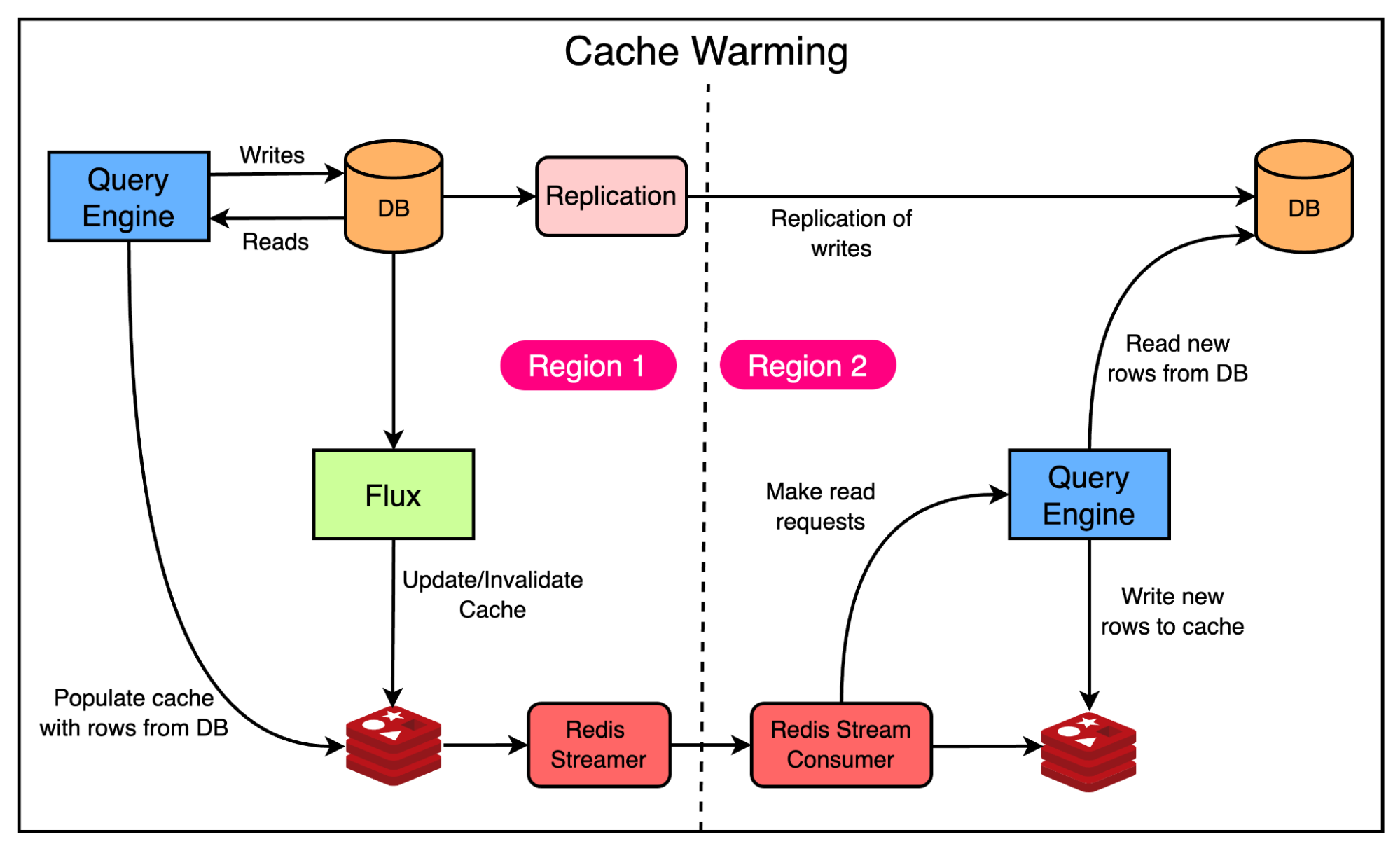

Based on the results from this system, Uber found that the cache was 99.99% consistent. Cache WarmingIn a multi-region environment, a cache is only effective if it is always warm. If that’s not the case, a region fail-over can result in cache misses and drastically increase the number of requests to the database. Since a Docstore instance spawned in two different geographical regions with an active-active deployment, a cold cache meant that you couldn’t scale down the storage engine to save costs since there was a high chance of heavy database load in the case of failover. To solve this problem, the Uber engineering team used cross-region Redis replication. However, Docstore also had its own cross-region replication. Since operating both replication setups simultaneously could result in consistent data between the cache and database, they enhanced the Redis cross-region replication by adding a new cache warming mode. Here’s how the cache warming mode works:

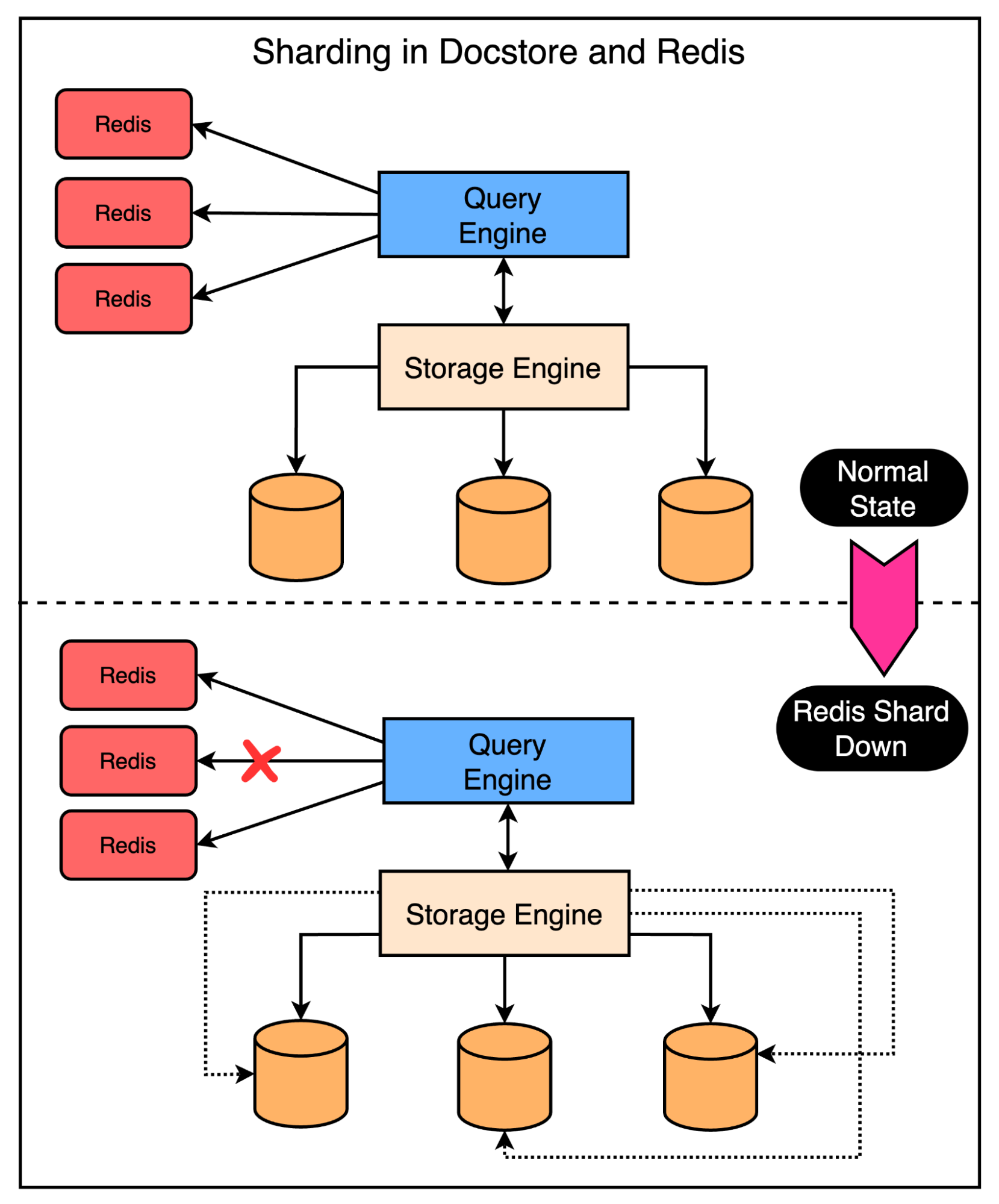

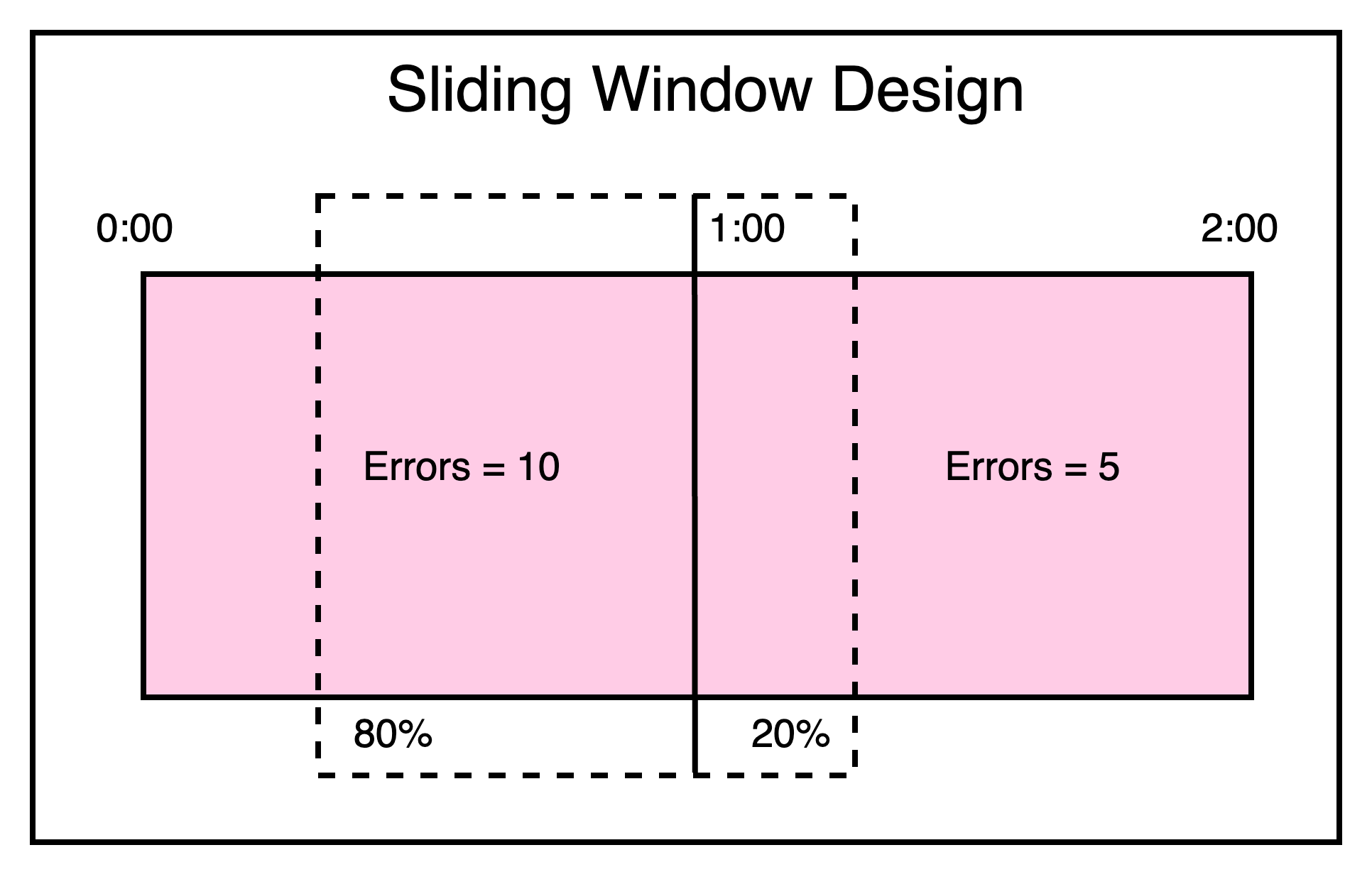

The below diagram shows this approach in detail: Replicating keys instead of values makes sure that the data in the cache is consistent with the database in its respective region. Also, it ensures that the same set of cached rows is present in both regions, thereby keeping the cache warm in case of a failover. ShardingSome large customers of Docstore within Uber can generate a very large number of read-write requests. It was challenging to cache all of it within a single Redis cluster that’s limited by the maximum number of nodes. To mitigate this, they allowed a single Docstore instance to map to multiple Redis clusters. This helped avoid a massive surge of requests to the database in case multiple nodes in a single Redis cluster go down. However, there was still a case where a single Redis cluster going down may create a hot shard on the database. To prevent this, they sharded the Redis cluster using a scheme that was different from the database sharding scheme. This makes sure that the load from a single Redis cluster going down is distributed between multiple database shards. The below diagram explains this scenario in more detail. Circuit BreakerWhen a Redis node goes down, a get/set request to that node generates an unnecessary latency penalty. To avoid this penalty, Uber implemented a sliding window circuit breaker to short-circuit such requests. They count the number of errors on each node for a particular bucket of time and compute the number of errors within the sliding window’s width. See the below diagram to understand the sliding window approach: The circuit breaker is configured to short-circuit a fraction of the requests to a node based on the error count. Once the threshold is hit, the circuit breaker is tripped and no more requests can be made to the node until the sliding window passes. Results and ConclusionUber’s project of implementing an integrated Redis cache with Docstore was quite successful. They created a transparent caching solution that was scalable and managed to improve latency, reduce load, and bring down costs. Here are some stats that show the results:

References: SPONSOR USGet your product in front of more than 500,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing hi@bytebytego.com. © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:35 - 26 Mar 2024