- Mailing Lists

- in

- Shopify Tech Stack

Archives

- By thread 5274

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 86

Shopify Tech Stack

Shopify Tech Stack

Note: This article is written in collaboration with the Shopify engineering team. Special thanks to the Shopify engineering team for sharing details with us about their tech stack and also for reviewing the final article before publication. All credit for the technical details and diagrams shared in this article goes to the Shopify Engineering Team. Shopify handles scale that would break most systems. On a single day (Black Friday 2024), the platform processed 173 billion requests, peaked at 284 million requests per minute, and pushed 12 terabytes of traffic every minute through its edge. These numbers aren’t anomalies. They’re sustained targets that Shopify strives to meet. Behind this scale is a stack that looks deceptively simple from the outside: Ruby on Rails, React, MySQL, and Kafka. But that simplicity hides sharp architectural decisions, years of refactoring, and thousands of deliberate trade-offs. In this article, we map the tech stack powering Shopify from the modular monolith that still runs the business, to the pods that isolate failure domains, to the deployment pipelines that ship hundreds of changes a day. It covers the tools, programming languages, and patterns Shopify uses to stay fast, resilient, and developer-friendly at incredible scale. Shopify Backend ArchitectureShopify’s backend runs on Ruby on Rails. The original codebase, written in the early 2000s, still forms the heart of the system. Rails offers fast development, convention over configuration, and strong patterns for database-backed web applications. Shopify also uses Rust for its systems programming language. While most startups eventually rewrite their early frameworks, Shopify doubled down to help ensure Ruby and Rails are 100-year tools that will continue to merit being in their toolchain of choice. Instead of moving on to another framework, Shopify pushed it further. They invested in:

The result is one of the largest and longest-running Rails applications in production. Modularization StrategyShopify runs a modular monolith. That phrase gets thrown around a lot, but in Shopify’s case, it means this: the entire codebase lives in one repository, runs in a single process, but is split into independently deployable components with strict boundaries. Each component defines a public interface, with contracts enforced via Sorbet. These interfaces aren’t optional. They’re a way to prevent tight coupling, allow safe refactoring, and make the system feel smaller than it is. Developers don’t need to understand millions of lines of code. They need to know the contracts their component depends on and trust those contracts will hold. To manage complexity, components are organized into logical layers:

This layering prevents cyclic dependencies and encourages clean flow across domains. To support this at scale, Shopify maintains a comprehensive system of static analysis tools, exception monitoring dashboards, and differentiated application/business metrics to track component health across the company. This modular structure doesn’t make development effortless. It introduces boundaries, which can feel like friction. However, it keeps teams aligned, reduces accidental coupling, and lets Shopify evolve without losing control of its core. Frontend TechnologiesShopify’s frontend has gone through multiple architectural shifts, each one reflecting changes in the broader web ecosystem and lessons learned under scale. The early days used standard patterns: server-rendered HTML templates, enhanced with jQuery and prototype.js. As frontend complexity grew, Shopify built Batman.js, its single-page application (SPA) framework. It offered reactivity and routing, but like most in-house frameworks, it came with long-term maintenance overhead. Eventually, Shopify shifted back to simpler patterns: statically rendered HTML and vanilla JavaScript. However, that also had limits. Once the broader ecosystem matured, particularly around React and TypeScript, the team made a clean move forward. Today, the Shopify Admin interface runs on React, React Router by Remix, written in TypeScript, and driven entirely by GraphQL. It follows a strict separation: no business logic in the client, no shared state across views. The Admin is one of Shopify’s biggest apps, built on Remix that behaves as a stateless GraphQL client. Each page fetches exactly the data it needs, when it needs it. This discipline enforces consistency across platforms. Mobile apps and web admin screens speak the same language (GraphQL), reducing duplication and misalignment between surfaces. Mobile Development with React NativeMobile development at Shopify follows a similar philosophy: reuse where possible, specialize where needed. Every major app now runs on React Native. The goal of using a single framework is to share code, reduce drift between platforms, and improve developer velocity across Android and iOS. Shared libraries power common concerns like authentication, error tracking, and performance monitoring. When apps need to drop into native for camera access, payment hardware, or long-running background tasks, they do so through well-defined native modules. Shopify teams also contribute directly to React Native ecosystem projects like Mobile Bridge (for enabling web to trigger native UI elements), Skia (for fast 2D rendering), WebGPU (that enables modern GPU APIs and enables general-purpose GPU computation for AI/ML), and Reanimated (for performant animations). In some cases, Shopify engineers co-captain React Native releases. Programming Languages and ToolingShopify’s language choices reflect its commitment to developer productivity and operational resilience.

Developer Tooling & Open Source ContributionsA large monolith doesn’t stay healthy without support. Shopify has developed an ecosystem of internal and open-source tools to enforce structure, automate safety checks, and reduce operational toil.

A much more exhaustive list of open-source software supported by Shopify is also present here. Databases, Caching, and QueuingThere are two main categories here: Primary Database: MySQLShopify uses MySQL as its primary relational database, and has done so since the platform's early days. However, as merchant volume and transactional throughput grew, the limits of a single instance became unavoidable. In 2014, Shopify introduced sharding. Each shard holds a partition of the overall data, and merchants are distributed across those shards based on deterministic rules. This works well in commerce, where tenant isolation is natural. One merchant’s orders don’t need to query another merchant’s inventory. Over time, Shopify replaced the flat shard model with Pods. A pod is a fully isolated slice of Shopify, containing its own MySQL instance, Redis node, and Memcached cluster. Each pod can run independently, and each one can be deployed in a separate geographic region. This model solves two problems:

By pushing isolation to the infrastructure level, Shopify contains failure domains and simplifies operational recovery. Caching and QueuesShopify relies on two core systems for caching and asynchronous work: Memcached and Redis.

But Redis wasn’t always scoped cleanly. At one point, all database shards shared a single Redis instance. A failure in that central Redis brought down the entire platform. Internally, the incident is still known as “Redismageddon.” The lesson Shopify took from this incident was clear: never centralize a system that’s supposed to isolate work. Afterward, Redis was restructured to match the pod model, giving each pod its own Redis node. Since then, outages have been localized, and the platform has avoided global failures tied to shared infrastructure. Messaging and Communication Between ServicesThere are two main categories of the same: Eventing & StreamingShopify uses Kafka as the backbone for messaging and event distribution. It forms the spine of the platform’s internal communication layer, decoupling producers from consumers, buffering high-volume traffic, and supporting real-time pipelines that feed search, analytics, and business workflows. At peak, Kafka at Shopify has handled 66 million messages per second, a throughput level that few systems encounter outside large-scale financial or streaming platforms. This messaging layer serves several use cases:

By relying on Kafka, Shopify avoids tight coupling between services. Producers don't wait for consumers. Consumers process at their own pace. And when something goes wrong, like a downstream service crashing, the event stream holds the data until the system recovers. That’s a practical way to build resilience into a fast-moving platform. API InterfacesFor synchronous interactions, Shopify services communicate over HTTP, using a mix of REST and GraphQL.

However, as the number of services grows, this model starts to strain. Synchronous calls introduce tight coupling and hidden failure paths, especially when one service transitively depends on five others. To address this, Shopify is actively exploring RPC standardization and service mesh architectures. The goal is to build a communication layer that’s:

ML Infrastructure at ShopifyThe ML infrastructure at Shopify could be divided into two main parts: Real-Time Search with EmbeddingsShopify’s storefront search doesn’t rely on traditional keyword matching. It uses semantic search powered by text and image embeddings: vector representations of product metadata and visual features that enable more relevant, contextual search results.

This system runs at production scale. Shopify processes around 2,500 embeddings per second, translating to over 216 million per day. These embeddings cover multiple modalities, including:

Each embedding is generated in near real time and immediately published to downstream consumers that use them to update search indices and personalize results. The embedding system also performs intelligent deduplication. For example, visually identical images are grouped to avoid unnecessary inference. This optimization alone reduced image embedding memory usage from 104 GB to under 40 GB, freeing up GPU resources and cutting costs across the pipeline. Data Pipeline InfrastructureUnder the hood, Shopify runs its ML pipelines on Apache Beam, executed through Google Cloud Dataflow. This setup supports:

Inference jobs are structured to process embeddings as quickly and cheaply as possible. The pipeline uses a low number of concurrent threads (down from 192 to 64) to prevent memory contention, ensuring that inference performance remains predictable under load. Shopify trades off between latency, throughput, and infrastructure cost. The current configuration strikes that balance carefully:

For offline analytics, Shopify stores embeddings in BigQuery, allowing large-scale querying, trend analysis, and model performance evaluation without affecting live systems. DevOps, CI/CD & DeploymentThis area can be divided into the following parts: Kubernetes-Based DeploymentShopify deploys infrastructure using Kubernetes, running on Google Kubernetes Engine (GKE). Each Shopify pod, an isolated unit containing its own MySQL, Redis, and Memcached stack, is defined declaratively through Kubernetes YAML, making it easy to replicate, scale, and isolate across regions. The runtime environment uses Docker containers for packaging applications and OpenResty, built on Nginx with embedded Lua scripting, for custom load balancing at the edge. These Lua scripts give Shopify fine-grained control over HTTP behavior, enabling smart routing decisions and performance optimizations closer to the user. Before Kubernetes, deployment was managed through Chef, a configuration management tool better suited for static environments. As the platform evolved, so did the need for a more dynamic, container-based architecture. The move to Kubernetes replaced slow, manual provisioning with fast, declarative infrastructure-as-code. CI/CD ProcessShopify’s monolith contains over 400,000 unit tests, many of which exercise complex ORM behaviors. Running all of them serially would take hours, maybe days. To stay fast, Shopify relies on Buildkite as its CI orchestrator. Buildkite coordinates test runs across hundreds of parallel workers, slashing feedback time and keeping builds within a 15–20 minute window. Once the build passes, Shopify's internal deployment tools take over and offer visibility into who's deploying what, and where.

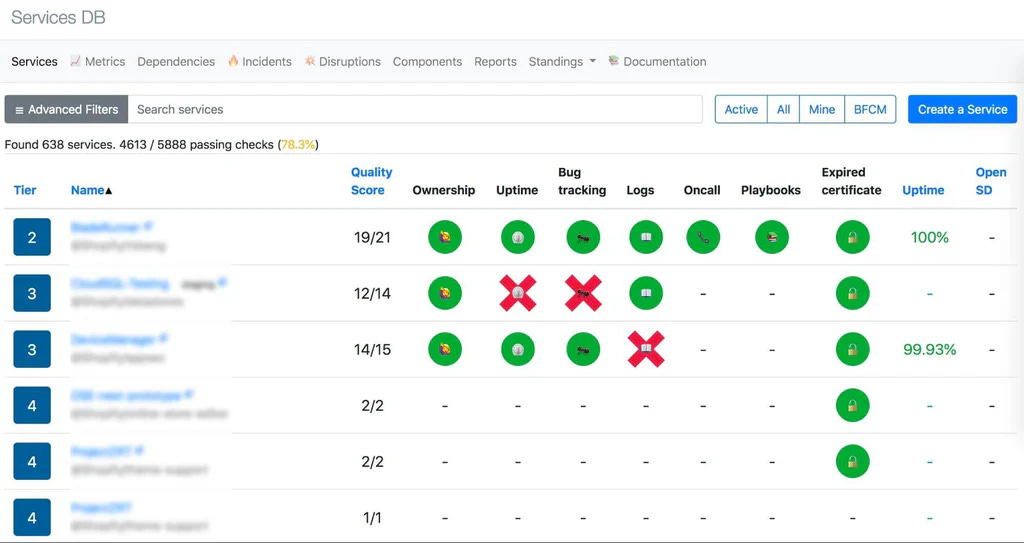

Deployments don’t go straight to production. Instead, ShipIt uses a Merge Queue to control rollout. At peak hours, only 5–10 commits are merged and deployed at a time. This throttling makes issues easier to trace and minimizes the blast radius when something breaks. Notably, Shopify doesn’t rely on staging environments or canary deploys. Instead, they use feature flags to control exposure and fast rollback mechanisms to undo bad changes quickly. If a feature misbehaves, it can be turned off without redeploying the code. Observability, Reliability, and SecurityThis area can be divided into multiple parts, such as: Observability InfrastructureShopify takes a structured, service-aware approach to observability. At the center of this is ServicesDB, an internal service registry that tracks:

ServicesDB catalog metadata and enforces good practices. When a service falls out of compliance (for example, due to outdated gems or missing logs), it automatically opens GitHub issues and tags the responsible team. This creates continuous pressure to maintain service quality across the board.

Incident response isn’t siloed into a single ops team. Shopify uses a lateral escalation model: all engineers share responsibility for uptime, and escalation happens based on domain expertise, not job title. This encourages shared ownership and reduces handoff delays during critical outages. For fault tolerance, Shopify leans on two key tools:

Supply Chain & SecuritySecurity isn’t an afterthought in Shopify’s stack, but part of the ecosystem investment. Since the company relies heavily on Ruby, it also works actively to secure the Ruby community at large. Key efforts include:

The goal isn’t just to secure Shopify’s stack, but to strengthen the foundation shared by thousands of developers who depend on the same tools. Shopify’s ScaleShopify's architecture isn’t theoretical. It’s built to withstand real-world pressure—Black Friday flash sales, celebrity product drops, and continuous developer activity across a global platform. These numbers put that scale in context.

References:

SPONSOR USGet your product in front of more than 1,000,000 tech professionals. Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases. Space Fills Up Fast - Reserve Today Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com. © 2025 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:40 - 11 Jun 2025