- Mailing Lists

- in

- How Facebook Syncs Time Across Millions of Servers

Archives

- By thread 5204

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 15

Boost Your Website's Google Ranking with Paid Guest Posts

New Sales Order - Ref[4] - (Maqabim Distributors & British Columbia Liquor Distribution Branch)

How Facebook Syncs Time Across Millions of Servers

How Facebook Syncs Time Across Millions of Servers

FusionAuth: Auth. Built for devs, by devs. (Sponsored)

FusionAuth is a complete auth & user platform that has 10M+ downloads and is trusted by industry leaders! Disclaimer: The details in this post have been derived from the Facebook/Meta Engineering Blog. All credit for the technical details goes to the Facebook engineering team. The links to the original articles are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them. A clock showing the wrong time is worse than a faulty clock. This is the challenge Facebook had to deal with while operating millions of servers connected to the Internet and each other. All of these devices have onboard clocks that are expected to be accurate. However, many onboard clocks contain inaccurate internal oscillators, which cause seconds of inaccuracy per day and need to be periodically corrected. Think of these internal oscillators as the “heartbeat” of the clock. Just like how an irregular heartbeat can affect a person’s health, an inaccurate oscillator can cause the clock to gain or lose time. Incorrect time can lead to issues with varying degrees of impact. It could be missing a simple reminder or failing a spacecraft launch. As Facebook’s infrastructure has grown, time precision has become extremely important. For example, knowing the accurate time difference between two random servers in a data center is critical to preserving the order of transactions across these servers. In this post, we’ll learn how Facebook achieved time precision across its millions of servers with NTP and later with PTP. Network Time ProtocolFacebook started with Network Time Protocol (NTP) to keep the devices in sync. NTP is a way for computers to synchronize their clocks over a network. It helps ensure that all devices on the network have the same, accurate time. Having synchronized clocks is critical for many tasks, such as:

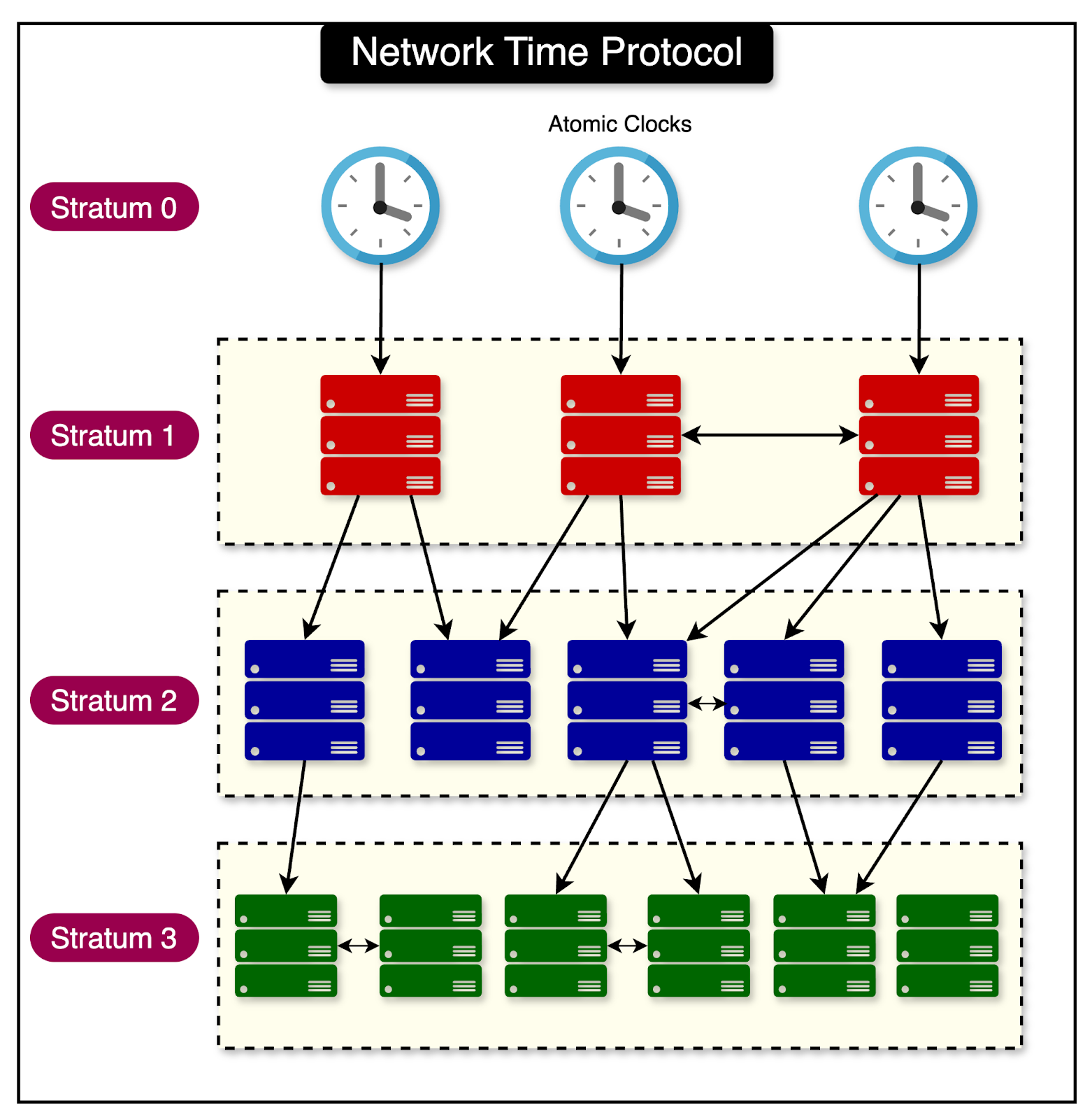

NTP uses a hierarchical system of time servers where the most accurate servers are at the top. There is a 3-step process to how NTP works:

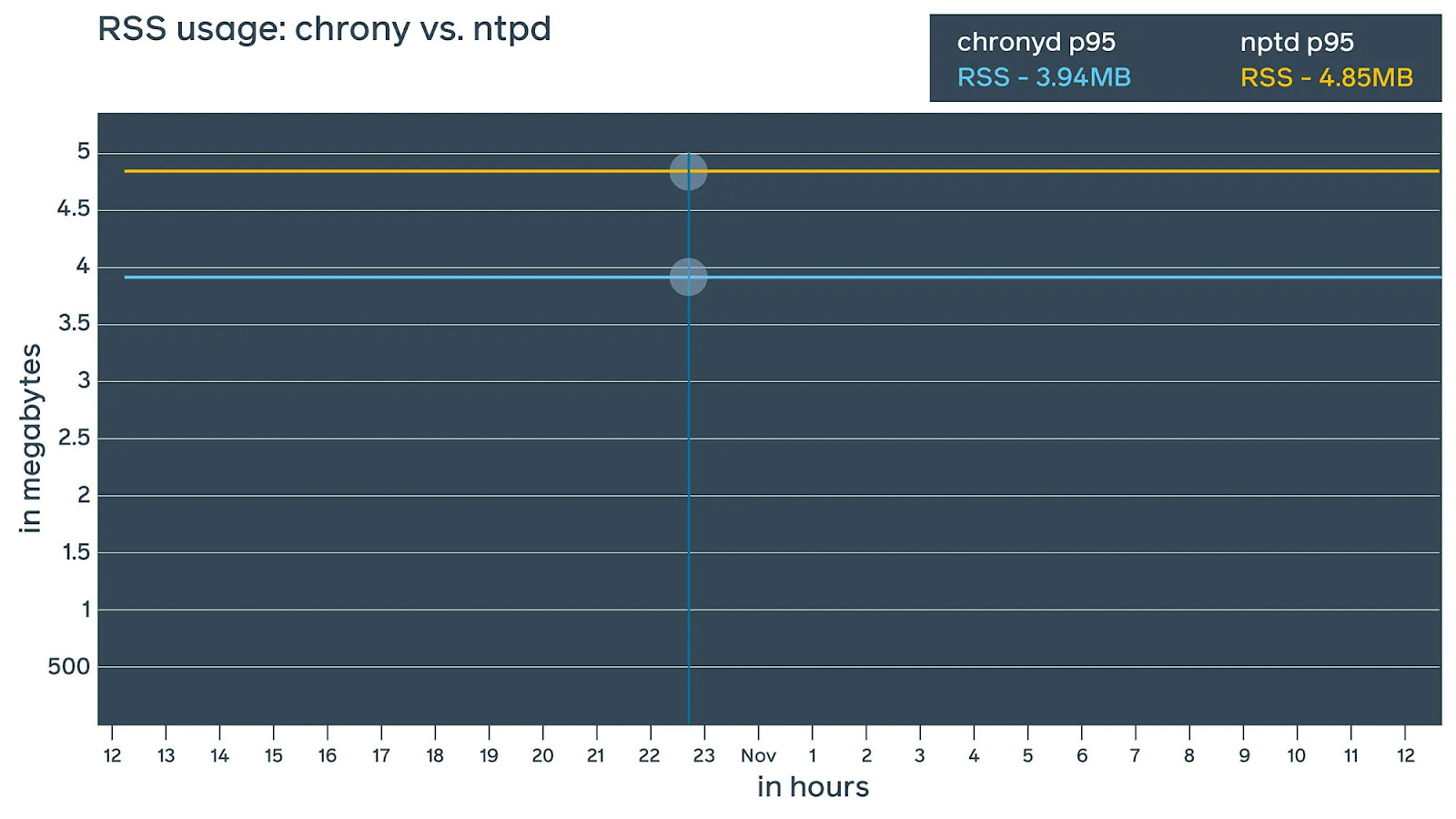

The diagram below shows the hierarchical system of servers used by NTP. Facebook built an NTP service at scale. They used chrony, a modern NTP server implementation. While they used the ntpd service initially, testing revealed that chrony was far more accurate and scalable. Chrony was a fairly new daemon at the time, but it offered a chance to bring the precision down to nanoseconds. Also, from a resource consumption perspective, chrony consumed less RAM compared to ntpd. See the diagram below that shows the difference of around 1MB when it came to RAM consumption between chrony and ntpd.

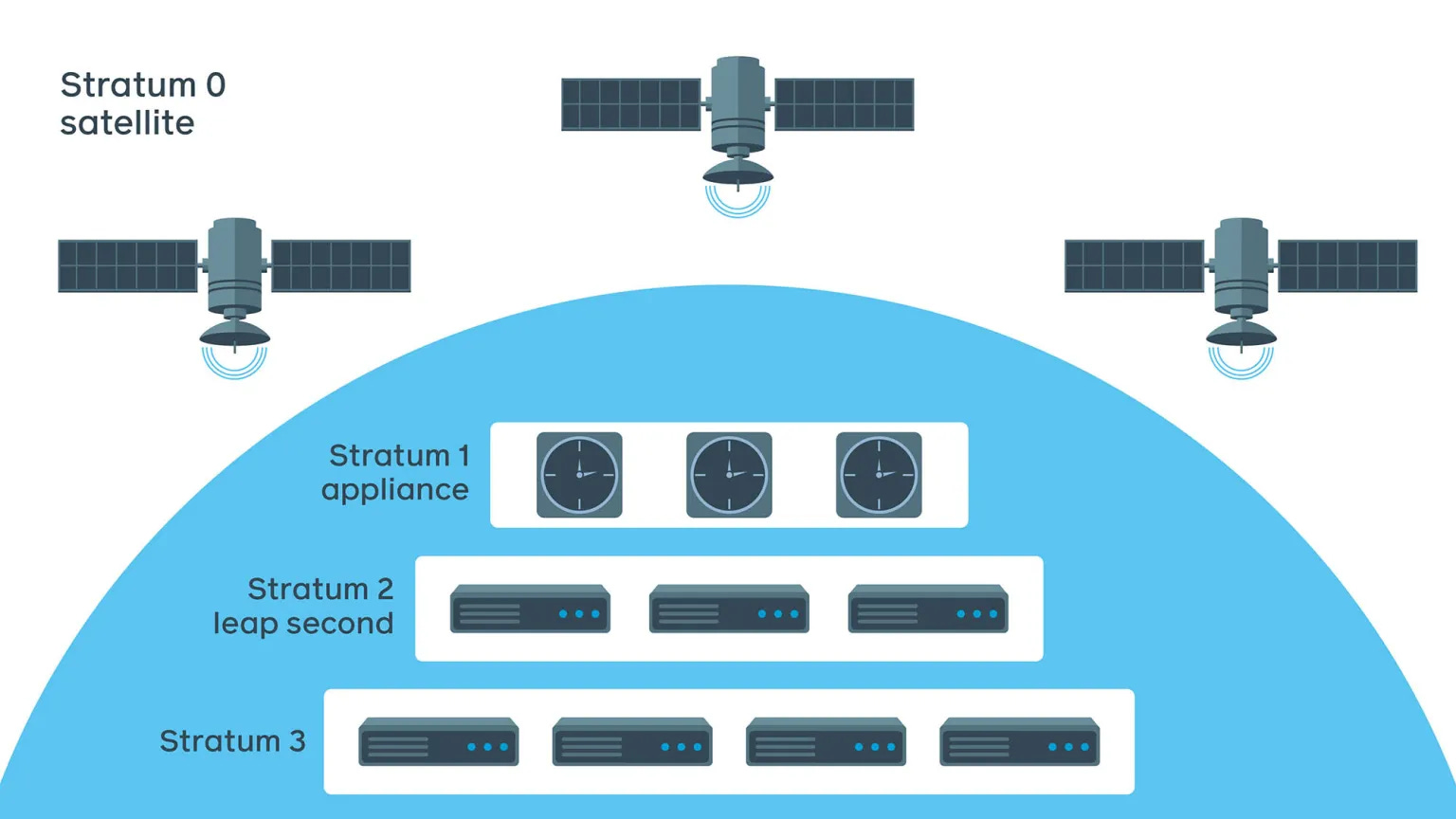

They designed the NTP service in four layers based on the hierarchical structure of NTP.

The diagram below shows the layers of Facebook’s NTP service.

There are a couple of interesting concepts to note over here:

The Earth’s rotation is not consistent and can vary slightly over time. Therefore, clocks are kept in sync with the Earth’s rotation by occasionally adding or removing a second. This is called a leap second. While adding or removing a leap second is hardly noticeable to humans, it can cause server issues. Servers expect time to move forward continuously, and a sudden change of a second can cause them to miss important tasks. To mitigate the impact of leap seconds on servers, a technique called “smearing” is used. Instead of adding or removing a full second at once, the time is gradually adjusted in small increments over several hours. It’s similar to masking a train’s delay by spreading the adjustment across multiple stations. In the case of Facebook’s NTP service, the Leap-second smearing happens at Stratum 2. The Stratum 3 servers receive smeared time and are ignorant of leap seconds. Latest articlesIf you’re not a paid subscriber, here’s what you missed. To receive all the full articles and support ByteByteGo, consider subscribing: The Arrival of Precision Time ProtocolNTP adoption was quite successful for Facebook. It helped them improve accuracy from 10 milliseconds to 100 microseconds. However, as Facebook wanted to scale to more advanced systems and build the metaverse, they wanted even greater levels of accuracy. Therefore, in late 2022, Facebook moved from NTP to Precision Time Protocol (PTP). There were some problems with NTP, which are as follows:

In contrast, PTP works like a smartphone clock that updates its time automatically. When there’s a time change or the phone moves to a new time zone, the clock updates itself by referencing the time over a network. While NTP provided millisecond-level synchronization, PTP networks could hope to achieve nanosecond-level precision. What makes PTP More Effective?As discussed earlier, a special computer called a Stratum acts as a time reference for other computers on a network. When a computer needs to know the current time, it sends a request to the Stratum, which replies with the current time. This process is known as sync messaging. When the Stratum sends the current time to another computer, the information travels across the network, resulting in some latency. Several factors can increase this latency, such as:

Due to latency, the time received by the other computer is no longer accurate when it arrives at the receiving computer. The obvious solution is to measure the latency and add it to the time received by the other computer to get a more accurate time. However, measuring latency is challenging because each computer has its clock, and there is no universal clock to compare against. To measure latency, two assumptions about consistency and symmetry are made:

Therefore, it follows that the accuracy of time synchronization can be improved by maximizing consistency and symmetry in the network. PTP is a solution that helps achieve this.

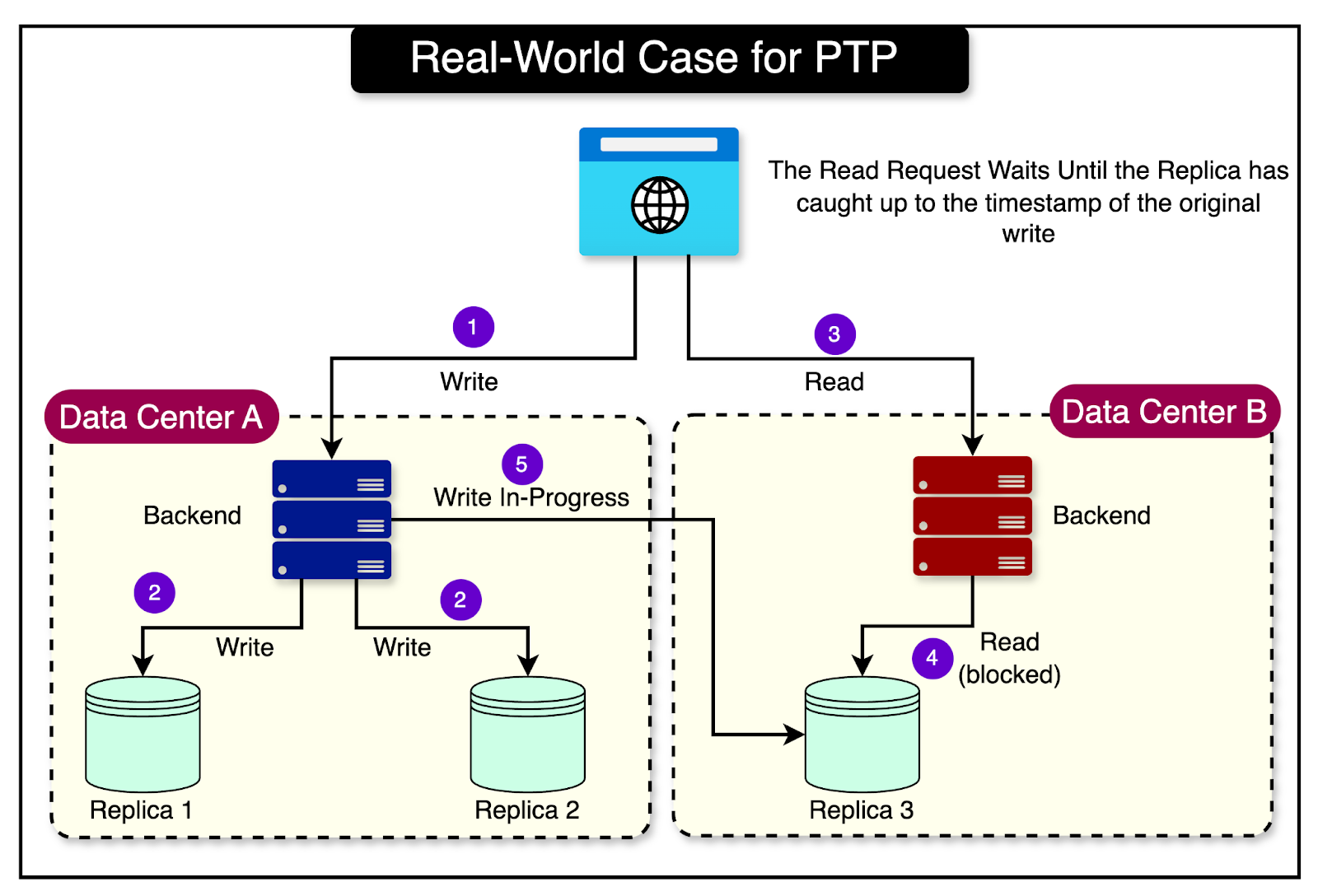

The Need for PTPLet’s look at a practical case where PTP is needed. Imagine you’re using Facebook and you post a new status update. When you try to view your post, there’s a chance that your request to see the post is handled by a different server than the one that originally processed your post. If the server handling your view request doesn’t have the latest updated data, you might not see your post. This is annoying for users and goes against the promise that interacting with a distributed system like Facebook should work the same as interacting with a single server that has all the data. In the old solution, Facebook used to send your view request to multiple servers and wait for a majority of them to agree on the data before showing it to you. But this takes up extra computing resources and adds delay because of the back-and-forth communication over the network. By using PTP to keep precise time synchronization across all its servers, they can simply have the view request wait until the server has caught up to the timestamp of your original post. There is no need for multiple requests and responses. The diagram below shows this scenario. However, this only works if all the server clocks are closely synchronized. Also, the difference between a server’s clock and the reference time needs to be known. PTP provides this tight synchronization. It could synchronize time about 100 times more precisely than NTP, which was necessary for Facebook’s requirement. This was just one example. There were several additional use cases where PTP excelled such as:

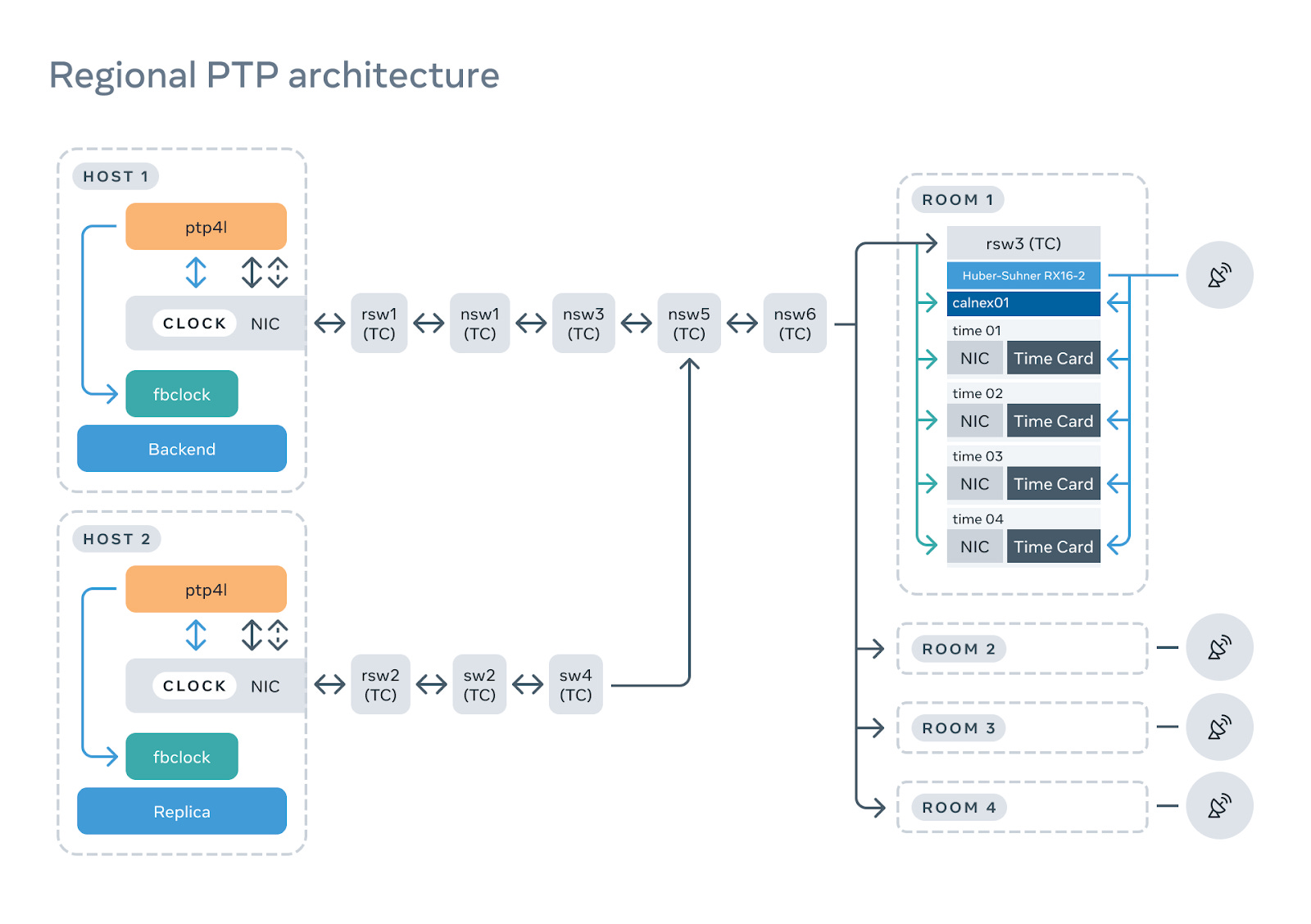

The PTP ArchitectureFacebook’s PTP architecture consists of three main components:

See the diagram below for a high-level view of the architecture:

Let’s look at each component and understand how they work together to provide precise timekeeping. The PTP RackThe PTP rack houses the hardware and software that serve time to clients. It consists of critical components such as:

The PTP NetworkThe PTP network is responsible for distributing time from the PTP rack to clients. Facebook uses PTP over a standard IP network with a few key enhancements:

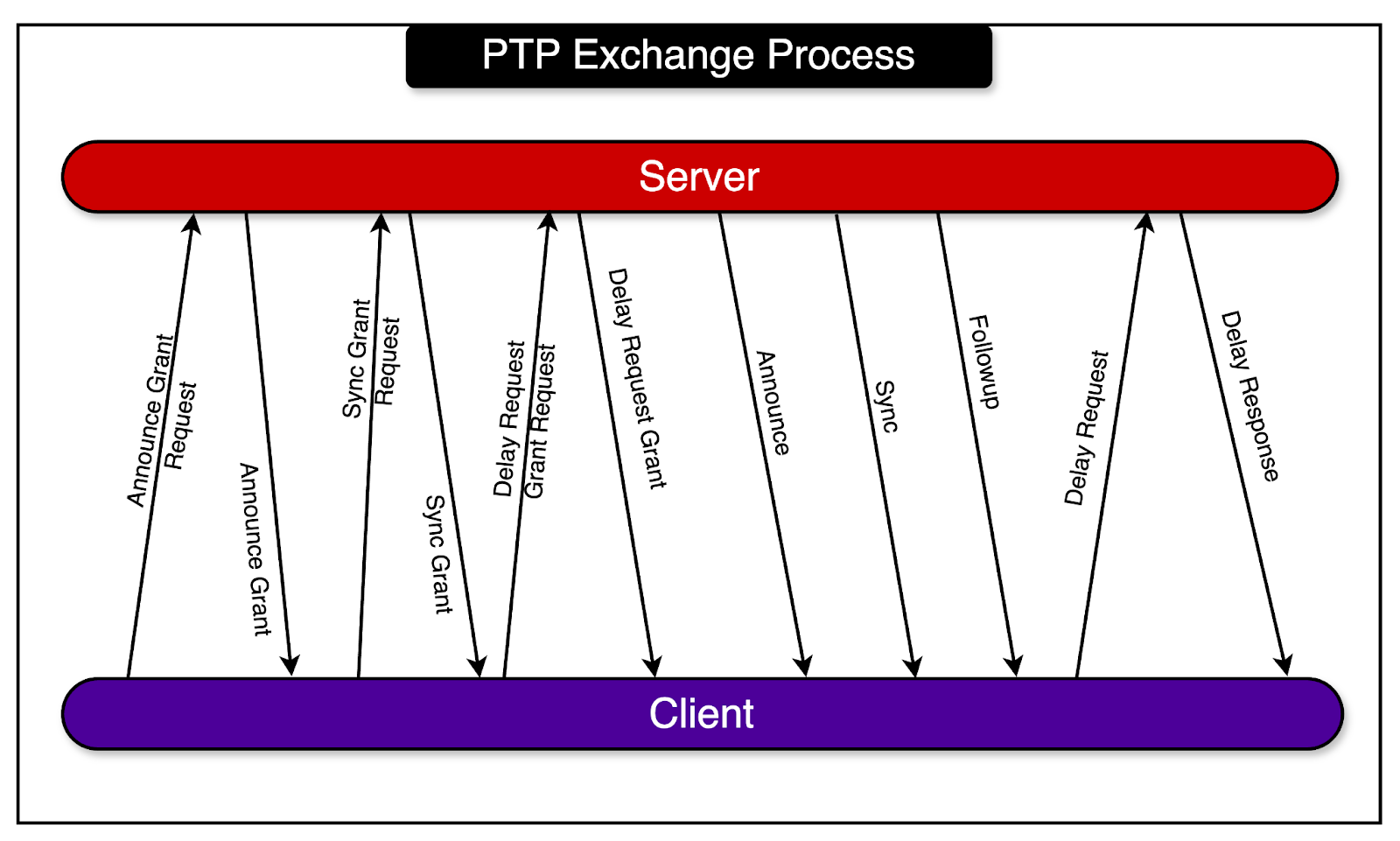

A typical PTP unicast flow consists of the following steps:

The diagram below shows the PTP Exchange Process The PTP ClientThe PTP client software runs on each server that needs accurate time. Facebook uses a few different components:

ConclusionTo conclude, Facebook’s adoption of Precision Time Protocol (PTP) across its infrastructure is a significant step forward in ensuring precise and reliable timekeeping at an unprecedented scale. By redesigning and rebuilding various components, Facebook has pushed the boundaries of what’s possible with PTP. Also, the open-source nature of most of the work helps us learn from the PTP solution implemented by them. References: © 2024 ByteByteGo |

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 6 Aug 2024