Archives

- By thread 5373

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 186

-

The best way to motivate your global team

The best way to motivate your global team

Find out how granting equity to your team drives engagement and retention-1.png?width=1200&upscale=true&name=image%20(3)-1.png)

Hi MD,

Do you know that 95% of Human Resource leaders consider equity compensation to be the most effective way to motivate and retain top talent?

But granting equity internationally is very complicated - with different rules and requirements across the world that could put your company at risk for missed deadlines, higher taxes, harsh penalties.

Remote Equity Essentials gives you:

- Compliance by design – Expert-backed insights on tax and reporting requirements, keeping your company safe from potential fines.

- Clarity - A country-by-country breakdown of how equity works worldwide, so you’re never in the dark.

- Transparency – A dedicated employee portal that educates your team and improves visibility around your equity grants

- Efficiency – No more hours spent researching regulations or hiring external consultants — all the answers are in one place.

So, if you’re ready to provide incentives to your team and drive great engagement, set up a demo with our EOR team today - we’ll get you onto the platform and provide a stronger equity program for your team.

You received this email because you are subscribed to

News & Offers from Remote Europe Holding B.V

Update your email preferences to choose the types of emails you receive.

Unsubscribe from all future emailsRemote Europe Holding B.V

Copyright © 2025 Remote Europe Holding B.V All rights reserved.

Kraijenhoffstraat 137A 1018RG Amsterdam The Netherlands

by "Remote Team" <hello@remote-comms.com> - 10:04 - 15 Apr 2025 -

What McKinsey research suggests about trade shifts

On McKinsey Perspectives

Geopolitical geometry Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Only McKinsey Perspectives. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

—Edited by Belinda Yu, editor, Atlanta

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Only McKinsey Perspectives newsletter, formerly known as Only McKinsey.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "Only McKinsey Perspectives" <publishing@email.mckinsey.com> - 01:47 - 15 Apr 2025 -

New Filter shower head

Dear,

We're Horrizon Technology Co.,Ltd, professional manufacturer of kitchen & bathroom products for more than 10 years, with BSCI and Walmart audit.

We're excited to introduce our latest Filter Showerhead. In today's health - conscious world, water quality matters, especially during showers. Our showerhead is here to enhance your shower experience.

Powerful Filtration

This filter shower, it efficiently removes impurities, chlorine, and heavy metals. It cuts chlorine in water, safeguarding your skin and hair. This makes it stand out among market alternatives.

Custom & ReliableOur strong production and supply chain ensure stable product supply. We also offer customization based on your brand and market needs, from logos to water - flow settings.

We believe our Filter Showerhead, combined with your market reach, can create great opportunities. Let's partner up!

Best regards,

Jessica

Xiamen Galenpoo Kitchen&Bathroom Technology Co.,LtdMOB:86 13376985999

WEB:www.galenpoo.com

ADD:No.1,Huli industrial park,Meixi road,Tongan Dictrict,Xiamen,China

by "Princi Kilickaya" <princikilickaya182@gmail.com> - 11:20 - 14 Apr 2025 -

Just days away: Fuel insights with AI-ready data

This webinar offers actionable insights to help you stay one step ahead.

SAP WEBCAST

Fuel your most important business processes with AI-ready data that is connected, actionable, and contextualWe’re just days away from diving into how to put your data to work to enable AI across all your processes.

Join us on April 23 to explore how to:

- Harmonize all your data and analytics to empower faster, more informed decision-making.

- Integrate your business data and analytics with key ERP processes for deeper insights.

- Use data, AI, and ERP solutions together to automate critical daily decisions.

Contact us

See our complete list of local country numbers

SAP (Legal Disclosure | SAP)

This e-mail may contain trade secrets or privileged, undisclosed, or otherwise confidential information. If you have received this e-mail in error, you are hereby notified that any review, copying, or distribution of it is strictly prohibited. Please inform us immediately and destroy the original transmittal. Thank you for your cooperation.

You are receiving this e-mail for one or more of the following reasons: you are an SAP customer, you were an SAP customer, SAP was asked to contact you by one of your colleagues, you expressed interest in one or more of our products or services, or you participated in or expressed interest to participate in a webinar, seminar, or event. SAP Privacy Statement

This email was sent to info@learn.odoo.com on behalf of the SAP Group with which you have a business relationship. If you would like to have more information about your Data Controller(s) please click here to contact webmaster@sap.com.

This offer is extended to you under the condition that your acceptance does not violate any applicable laws or policies within your organization. If you are unsure of whether your acceptance may violate any such laws or policies, we strongly encourage you to seek advice from your ethics or compliance official. For organizations that are unable to accept all or a portion of this complimentary offer and would like to pay for their own expenses, upon request, SAP will provide a reasonable market value and an invoice or other suitable payment process.

This e-mail was sent to info@learn.odoo.com by SAP and provides information on SAP’s products and services that may be of interest to you. If you received this e-mail in error, or if you no longer wish to receive communications from the SAP Group of companies, you can unsubscribe here.

To ensure you continue to receive SAP related information properly, please add sap@mailsap.com to your address book or safe senders list.

by "SAP" <sap@mailsap.com> - 10:34 - 14 Apr 2025 -

A leader’s guide to keeping customers happy and loyal

Leading Off

Know their needs Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Leading Off. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

For businesses in all sectors, providing the best possible customer experience (CX) is a must. It’s also an increasingly complex challenge. CX strategies—which encompass everything a company does to deliver high-quality experiences, value, and growth to customers—are changing rapidly, as younger consumers exert more influence and technology reshapes the ways that both B2C and B2B organizations fulfill their customers’ needs. This week, we look at how leaders in various industries can help their organizations create an exceptional CX.

Consumers increasingly expect more convenience, cost transparency, and personalized engagement from businesses of all kinds. Healthcare offers one example. Many organizations in the healthcare industry have been slow to adapt to customers’ preferences, giving both providers and payers a substantial opportunity to deliver a better CX and improve business results by addressing consumers’ evolving expectations. According to McKinsey Partner Jenny Cordina and her coauthors, three McKinsey surveys of US consumers revealed low to medium satisfaction with many elements of their healthcare journeys. The authors recommend six steps for upgrading the healthcare CX, including more expedient options for care, more competitive pricing, and the use of digital tools in health management. “Leaders of healthcare organizations who invest in building strong consumer foundations will be well positioned to compete in a market that’s increasingly empowering consumers to own their healthcare journeys,” the authors say.

That’s how much faster revenue grew for companies with higher CX ratings compared with “CX laggards” between 2016 and 2021, according to McKinsey research on how organizations can better serve their existing customers. McKinsey’s Harald Fanderl, Oliver Ehrlich, Robert Schiff, and Victoria Bough also note that revenues of CX leaders rebounded more quickly from the COVID-19 pandemic than those of other organizations.

That’s McKinsey Senior Partners Alexander Sukharevsky and Emily Reasor and their coauthors on how retail companies can generate value from gen AI. One important tool is the AI-powered chatbot, which can recommend products, help customers learn more about certain items or companies, or manage a customer’s virtual shopping cart. “Since many consumers will use these chatbots before deciding to purchase a product rather than after, using chatbots allows retailers to engage with customers earlier in their shopping journey, which can help increase customers’ overall satisfaction,” the authors say.

Shortages of certain products during the COVID-19 pandemic gave rise to a phenomenon of weakened brand loyalty that continues to shape consumer activity. “A lot of these behaviors stuck: switching brands, switching channels, exploring alternatives,” Senior Partner Sajal Kohli says in an episode of The McKinsey Podcast on the state of consumer behavior. A McKinsey survey of 15,000 consumers revealed that more than one-third of shoppers in more developed markets had tried different brands over the previous three months, and some 40 percent had switched retailers to find better prices or discounts. “That is pretty unprecedented in terms of consumer choices,” Kohli notes. These changes suggest that companies will need to dig deeper to understand consumers’ desires and motivations. “It’s about investing in data and advanced capabilities to really understand consumers at an individual, small, or microsegment level,” Kohli says.

Advertising and marketing campaigns that deliver “surprise and delight”—from scrappy viral marketing campaigns to big-money Super Bowl commercials—are time-tested tools for wooing new customers. But the importance of delighting customers goes far beyond getting them to buy for the first time. Delight is critical to customer loyalty and business growth, according to McKinsey’s Ankit Bisht and Sangeeth Ram and their coauthors. Through surveys of 25,000 customers across industries, including tourism, insurance, and banking, they found that companies that prioritize customer delight outperform their competitors in both revenue growth and total shareholder returns. Their research also indicates that service excellence and product innovation are the biggest factors in keeping customers happy and coming back to the company. “By prioritizing customer delight and implementing smart, sustainable strategies, companies can unlock new frontiers of service and drive lasting returns without incurring prohibitive expenses,” the authors say.

Lead by satisfying your customers.

— Edited by Eric Quiñones, senior editor, New York

Share these insights

Did you enjoy this newsletter? Forward it to colleagues and friends so they can subscribe too. Was this issue forwarded to you? Sign up for it and sample our 40+ other free email subscriptions here.

This email contains information about McKinsey’s research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Leading Off newsletter.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey Leading Off" <publishing@email.mckinsey.com> - 04:17 - 14 Apr 2025 -

How are executives’ views on the economy changing?

On McKinsey Perspectives

Biggest risks to growth Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Only McKinsey Perspectives. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

•

Mounting uncertainty. In the midst of numerous policy changes, uncertainty looms over views on the economy. In a recent McKinsey Global Survey from March, Senior Partner and McKinsey Global Institute Chair Sven Smit and coauthors found that respondents now view shifts in trade policy and geopolitical instability—which was long seen as the top risk to growth—as equally disruptive forces. In addition, respondents are much more likely now than they were in December to predict a decline in economic conditions, both worldwide and in their own countries.

—Edited by Belinda Yu, editor, Atlanta

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Only McKinsey Perspectives newsletter, formerly known as Only McKinsey.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "Only McKinsey Perspectives" <publishing@email.mckinsey.com> - 01:21 - 14 Apr 2025 -

Re: Follow Up

Hi,

I wanted to check with you if you had time to go through my previous email.

Let me know your thoughts about acquiring this email list.

Regards,

RebeccaHi, Good day to you!

We have successfully built a verified file of Cosmetics Industry contacts with accurate emails. Would you be interested in acquiring Cosmetics Industry Professionals List across North America, UK, Europe and Global?

Few Lists:

üCosmetics and personal care product manufacturers

üFragrance Manufacturers, Home Care Manufacturers

üCosmetics and Personal Care Brand Owners

üPerfume& Toiletries Manufacturers

üPrivate label and Contract manufacturers

üSalon and hotel chains

üConsultants, Packaging Professionals, etc

Our list comes with: Company/Organization, Website, Contacts, Title, Address, Direct Number and Email Address, Revenue Size, Employee Size, Industry segment.

Please send me your target audience and geographical area, so that I can give you more information, Counts and Pricing just for your review.

Thank you for your time and consideration. I eagerly await your response.

Regards,

Rebecca Baker| Customer Success Manager

B2B Marketing & Tradeshow Specialist

PWe have a responsibility to the environment

Before printing this e-mail or any other document, let's ask ourselves whether we need a hard copy

by "Rebecca Baker" <Rebecca@b2bleadsonline.us> - 12:16 - 14 Apr 2025 -

Premium Hotel Amenities & Custom Solutions

Dear info

How are you

I just visited your website and learned that you are a leading supplier of high-quality hotel products in [Client's Country], with a prestigious reputation for excellence. You may be interested in finding a new reliable source for "hotel amenities, slippers and hotel accessories etc that combine superior quality with competitive pricing.

At JiangSu QianYiFan ., we offer a wide range of premium hotel products, including eco-friendly amenities, luxurious slippers, and custom-designed hotel accessories etc, with designs updated regularly to meet the latest trends.

With over 23 years of experience,we are the one of the largest suppliers in China. We are proud to have passed **ISO9001;ISO14001;FSC;GMPC etc certification**, and all our products meet international quality standards.

Our satisfied customers include **Marriott, Ritz-Carlton, IHG, Radisson, and Kempinski etc**.

I am confident that working with us will bring you the same level of satisfaction.

To help you better understand our quality and service, we can start from the small order.

If you need,i can send our e-catalogue to you, I’ll be happy to provide further details.

Looking forward to your reply!Best regards,

Bella

Sales Director

JiangSu QianYiFan International Trading Co.

Website: [www.qyfamenitydeluxe.com]

by "Nori Bethel" <bethelnori337@gmail.com> - 11:33 - 13 Apr 2025 -

Product Catalog Request

Dear Sir/Madam,

I am writing to express the interest of MAGSI INTERNATIONAL in exploring purchasing opportunities with your esteemed organization. My name is Dominique Floch, and I would be grateful if a sales manager could respond to this email at their earliest convenience.

In order to facilitate our evaluation, could you please provide us with an updated price list of your products for our review? We would appreciate it if this information could be shared with us as soon as possible.

Thank you for your prompt attention to this matter, and we look forward to hearing from you soon.

Best regards,

Dominique Floch

MAGSI INTERNATIONALDominique FLOC'H

Responsable Export, Grands Comptes et Loueurs

+33(0) 609 14 54 28

+33(0) 298 24 12 85

dominiquefloch805@gmail.com

www.magsi.fr

MAGSI, ZA de Bel Air, 29 450 SIZUN

by "Dominique Floc'h" <dominiquefloch805@gmail.com> - 07:32 - 13 Apr 2025 -

HRDC !!! BREAKTHROUGH THINKING SKILLS FOR EXECUTIVES & MANAGERS (5 & 6 May 2025)

HRDC CLAIMABLE COURSE !!!

Please call 012-588 2728

email to pearl-otc@outlook.com

FACE-TO-FACE PUBLIC PROGRAM

BREAKTHROUGH THINKING SKILLS

FOR EXECUTIVES & MANAGERS

- HARNESS YOUR CREATIVE AND INNOVATIVE POTENTIAL IN DIFFICULT TIMES

Venue : Wyndham Grand Bangsar Kuala Lumpur Hotel (SBL Khas / HRD Corp Claimable Course)

Date : 5 May 2025 (Mon) | 9am – 5pm By Daniel

6 May 2025 (Tue) | 9am – 5pm . .

COURSE OVERVIEW:

BREAKTHROUGH THINKING provides the key skills that business and marketing professionals need to hone their creative and innovative thinking. It includes interactive teaching tools and exercises that will help participants strengthen their creativity and learn how to apply creative concepts and innovative strategies to their business environments.

What do businesses need today? A new way of thinking that opens a door they didn't even know existed. A way of thinking that seeks a solution to an intractable problem through unorthodox or rational methods or elements that would normally be ignored by logical thinking. They need Breakthrough Thinking.

Developing breakthrough ideas does not have to be the result of luck or a shotgun effort. This proven Breakthrough Thinking methods provide a deliberate, systematic process that will result in innovative thinking. It will enable participants to empower their creative process and generate innovative ideas and solutions needed in a difficult economy.

Creative thinking is not a talent; it's a skill that can be learned. It empowers people by adding strength to their natural abilities, which improves creativity and innovation, which leads to increased productivity and profit. Today, better quality and better service are essential, but they are not enough; exceeding expectation is the call of the day in a highly competitive market. Creativity and innovation are the only engines that will not only shield a business from competitive challenges but drive lasting global success.

EXPECTED RESULT:

You will be able to:

- Create many alternatives which give you options for achieving the purpose

- Be very clear as to where you want to focus your creative energies and what you want as final output

- Find a different and/or better way to seemingly insoluble problems

- Break your thinking patterns to generate new practical ideas

- Constructively challenge your organization’s current thinking strategy

- Turn issues into real opportunities.

WHO SHOULD ATTEND

All executives & managers alike who need highly innovative solutions that help them shake up traditional logic and mindsets and bring that extraordinary creativity and innovation to the workplace.

METHODOLOGY

- The workshop is highly interactive and participative allowing participants to internalize the concepts and knowledge learnt.

- Real life problems in the office will be dealt with for relevance.

- There is a mixture of lectures, role plays, skill practices, discussions, games, group dynamics, simulations, reflective, inductions, incubation and NLP mind programming exercises to integrate learning.

OUTLINE OF WORKSHOP

Introduction to the Program

- Introducing the workshop Objectives & Facilitator

- Understanding the Right Brain and Left Brain Functions

- Crazy or Illogical or Stupid?

- Group Activity: Highly Creative Ice Breaker

The Importance of Creative Thinking

- The limitations of logical thinking.

- The Nature of Creativity

- Creative Thinking is Both a Mental and Physical Activity

- Activity: Self-assessment with Creativity Profile

- Exercise: Maximize Creativity By Using Eye Movements

Breakthrough Thinking Techniques:

1. Alternatives

a. Generating alternative ideas

b. Advance Brainstorming

c. Activity: Reverse Brainstorming

d. How to use ‘concepts’ as a breeding ground for new ideas.

e. Creative Visualization with Mind Maps & Concept Maps

f. Exercise: The Elbert Einstein’s Technique

2. Focus

a. The discipline of defining your focus

b. Sharpening your Senses

c. How to generate and use a ‘creative hit list’.

d. Video activity: Self-discipline in focus

e. Exercise: Nominal Group Technique

3. Challenge

a. Breaking free from the limits of the accepted ways of operating.

b. Heading towards the Impossible or Illogical

c. Turning the Impossible to Possible

d. Exercise; Transcending Limitations

e. Activity: Collapsing Anchors to break free from comfort zone

4. Random entry

a. Using unconnected input to open up new lines of thinking.

b. Activity: Entertaining Heterogeneous Competence

c. Exercise: Random Word Technique

5. Provocation and movement

a. Explore the nature of perception and how it limits our creativity.

b. How to Change Perceptions

c. Exercise: Reframing for Change

Implementation of Ideas

- Harvesting

i. Collect all ideas and concepts that are less developed or obvious.

ii. Harnessing the solutions of another project/problem by association

- Treatment of ideas

i. How to develop ideas and shape them towards their practical application in your business.

ii. Develop Creative Potential By Allowing Incubation Time

- Implementation Process

i. Task Cycle

ii. Activity: Process Game

iii. Exercise : Process flow Chart/PERT Charting

This advanced segment goes beyond basic creative thinking skills and focuses on a process of solving business problems using six specific steps: objective finding, fact finding, problem finding, idea finding, solution finding, and acceptance finding. When used properly, this method can significantly reduce the time required to discover an innovative and sound solution.

** Certificate of attendance will be awarded for those who completed the course

ABOUT THE FACILITATOR

Daniel

- Certified Professional Trainer, MIM

- MBA (General) University of Hull, UK

- Council of Engineering Institutions (UK) Part I & II

- Associate Member of Institute of Electrical Engineers, UK

- ISO Standardization Internal Auditor

- NLP Master Trainer Certification, NFNLP US

- Certified Master Conversational Hypnotherapist, IAPCH US

- Certified Specialist in Neuro-Hypnotic Repatterning, SNLP US

- Diploma in International Sound Therapy, IAST Alicante, Spain

Daniel has more than 30 years experience in the corporate world, out of which 20 years has been spent on coaching and training: teaching Sales & Negotiation Skills, Management & Leadership skills - training Companies and individuals on Coaching & Mentoring Skills, Communication Skills, Neuro-Linguistic Programming (NLP) and Project Management skills. And in the process, continually coaching and motivating all his students, staffs and associates to learn, grow and expand their personal paradigms and horizons.

He also has extensive sales management, marketing and sales experience; from selling single course programs to education franchise businesses; as well as single pieces of equipment right-up to multi-million US dollar projects for more than 15 years. In his last major corporate appointment as Assistant General Manager of GEC (UK), Mahkota Technologies, where he was fully in-charge of both local and regional sales for his Division, he successfully managed over 15 different products and systems, with an annual turnover of RM60 million, for both the domestic and regional markets.

He is an engineer by profession and has completed his professional engineering degree, the Council of Engineering Institutions, UK., Part I & II within 2 years, one year short of the normal period of 3 years. As such he was awarded: Excellent Performance Award in the Professional degree. Positions held in the various multi-national corporate companies include from a young installation/service engineer to department manager & general manager of a leading MNC. Currently, he is a Certified Professional Trainer with the Malaysian Institute of Management (MIM) and Cambridge ICT. He is also a Certified Master NLP Trainer of the National Federation of Neuro-Linguistic Psychology, USA.

He also specializes in Effective Communication & Project Management skills, Management & Leadership Skills, Sales training applying Neuro-linguistic Programming and Subconscious Learning in order to accelerate adult learning. His other specialties include Functional & Instructional English for the Workplace, Effective English for Front-liners, Writing Skills, Presentation skills and Engaging Dialogue.

Daniel recognizes the great potential within each individual participant and he believes in making a difference in their learning through his personalised, demonstrative, practical and dynamic approach to make training an enjoyable and valuable experience. His passion for training stems from his innate desire to empower all those individuals who are keen to seek knowledge, wisdom and self improvement; so that they can be the person they need to be to have what they want.

(SBL Khas / HRD Corp Claimable Course)

TRAINING FEE

2 days Face-to-Face Public Program

RM 2,407.00/pax

(excluded 8% SST)

Group Registration: Register 3 participants from the same organization, the 4th participant is FREE.

(Buy 3 Get 1 Free) if Register before 25 April 2025. Please act fast to grab your favourite training program!We hope you find it informative and interesting and we look forward to seeing you soon.

Please act fast to grab your favorite training program! Please call 012-588 2728

or email to pearl-otc@outlook.com

Do forward this email to all your friends and colleagues who might be interested to attend these programs

If you would like to unsubscribe from our email list at any time, please simply reply to the e-mail and type Unsubscribe in the subject area.

We will remove your name from the list and you will not receive any additional e-mail

Thanks

Regards

Pearl

by "pearl@otcmsb.com.my" <pearl@otcmsb.com.my> - 04:30 - 13 Apr 2025 -

Exploring Partnership Opportunities in CNC Machining

Dear info

OI Teach specialist in the cnc machining manufacture for 10 years. And we have 200 CNC machining machines.We have been made the aluminum part for the medical device ,car parts,motocycle parts and bicycle accessories .We have a lot of customers from all over the world.like Ge/LG.We can do 5 axis /4axis/3 axis /leather etc.The material we can do is AL6061 /AL7075 /AL6063 /AL5052 /Stainless Steel /Brass /Copper /ect..The finishing we can do is the oxidation with all color /chroming/hard oxidation/powder coating.

Please send your file step/stp/igs format to me to quote.

OI Tech expecting establish long business relationship with you .

Best Regards

by "Shital Sabi" <shitalsabi96@gmail.com> - 03:33 - 13 Apr 2025 -

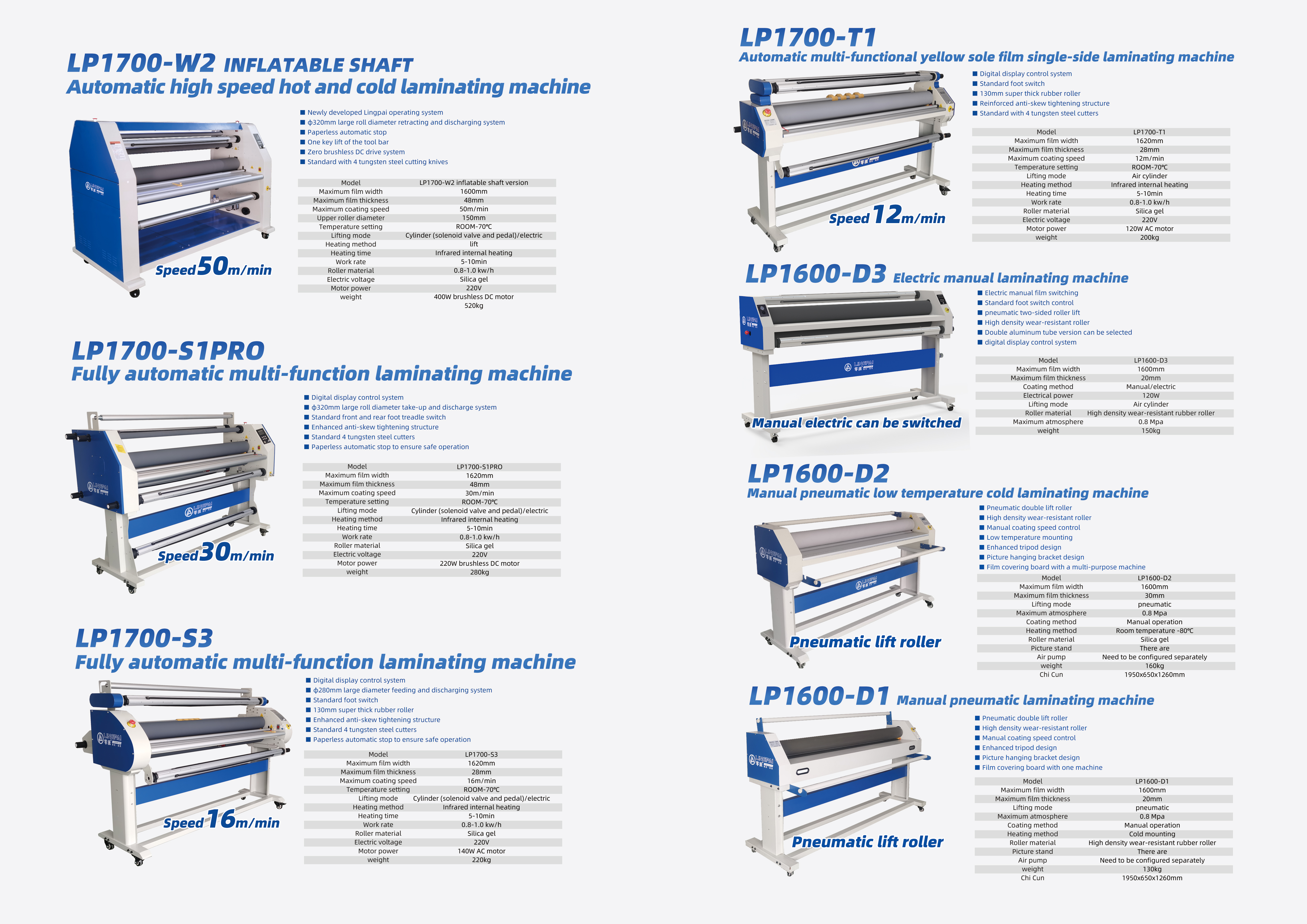

Laminator, improve your packaging efficiency

Dear info,

This is Jessie from LP Laminator factory from Hangzhou,China. We are a leading manufacturer of cold laminator, low-heat laminator, thermal laminator, no bottom film laminator and ect. we have been focus on laminator for more than 12 years. We have competitive price .

We are looking forward to starting kindly and long-term business with you. Wish to get your back.

Sincerely,

Jessie Li

Hangzhou Zhanqian Technology Co., Ltd

Tel: 0086-18905869797

Skype:lxf0925@163.com

Email:lxf0925@163.com

Website:https://chinalingpai.en.alibaba.com

by "Jarlin Mancha" <manchajarlin@gmail.com> - 02:36 - 13 Apr 2025 -

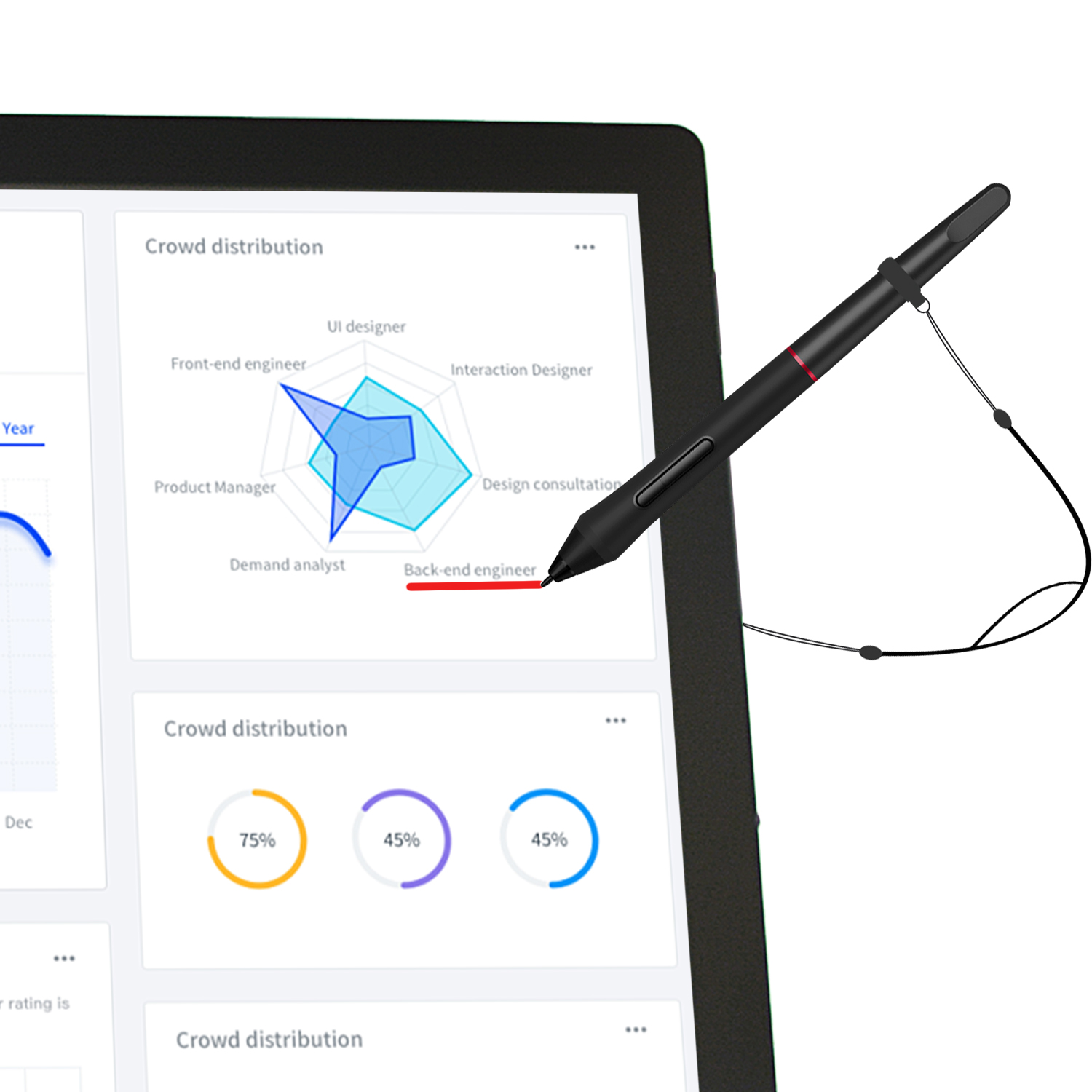

Interactive Podium Monitor

About us Product Contact

Features of QIT 600F3

Precision Pen

Touch

Adjustable Angles

Finger Touch Control

Dual - Protection Screen

Real-Time Sync for Operations and Annotations

READ MORE

Why choose us?

Chat support 24/7

Protecting your orders

The best prices on the market

Provide You with Warranty Service

QOMOEDU.COM Click the link above to visit our website.

About us Product Solution Contact us Email:galen@qomo.com Tel:+86 183 5911 0265

Simple, intuitive solutions that empower everyone to focus on what they do best.

by "Uyetake Wahlund" <uyetakewahlund@gmail.com> - 11:41 - 12 Apr 2025 -

Quotation and Specifications for Milk Thistle Extract from Xi’an Demeter Biotech Co., Ltd

Dear info,

Glad to know you are interested in Milk thistle extract by google. We, Xi’an Demeter Biotech Co., Ltd, specialize in offering animal and plant active ingredients to Chinese traditional medicine, nutrition, cosmetics and addictive since 2008. Milk thistle extract is our key product.

As for Milk thistle extract,

(1) Price sheetItem

Descriprion

Qty.

Unit

Unit Price

FOB China

1

Milk Thistle Extract Powder 80% Sylimarin

25

kg

US$33.50

US$837.50

2

Milk Thistle Extract Granular 80% Sylimarin

25

kg

US$35.85

US$896.25

Here are popular specs for application.

Application

Effect

Part of Specs

Medicine

Protect the liver

Silymarin 80% UV, Silybin 80% -99%

Health products

Protect the liver

Silymarin 80% UV, Silybin 80% -100%

Food

Protect the liver

Silymarin 80% UV, Silybin 80% -101%

Cosmetics

anti-radiation, anti-aging, anti-wrinkle

Silymarin 80% UV

Sports health drink

Protect the liver

Silymarin 40% UV

Feed grade

Protect the liver

Silymarin 80% UV / 30% HPLC, 75% UV

If other specification and quantity is wanted, tell me. Or your usage is offered, better specification will be recommended.

Your prompt reply will be very much appreciated.Best regards

by "Emma Swift" <atcrussur@gmail.com> - 09:44 - 12 Apr 2025 -

Nanocrystalline cores for EMC common mode choke

Dear Sir/Madam,

We're professional manufacturer of high performance amorphous and nanocrystalline cores. Nanocrystalline core's inductance is about 5 times higher than ferrite cores, it is very easy to achieve high inductance, suitable for common mode choke.

Over 10 yeas experience in manufacturing nanocrystalline cores, our cores have similar performance compared to cores from VAC, Hitachi, Magnetec.

In case you have any interest or inquiry, please feel free to let us know.

Best Regards,

KM Core Team

King Magnetics

www.kingmagnetics.com

core@kingmagnetics.com

by "km12" <km12@kingmagcores.com> - 04:02 - 12 Apr 2025 -

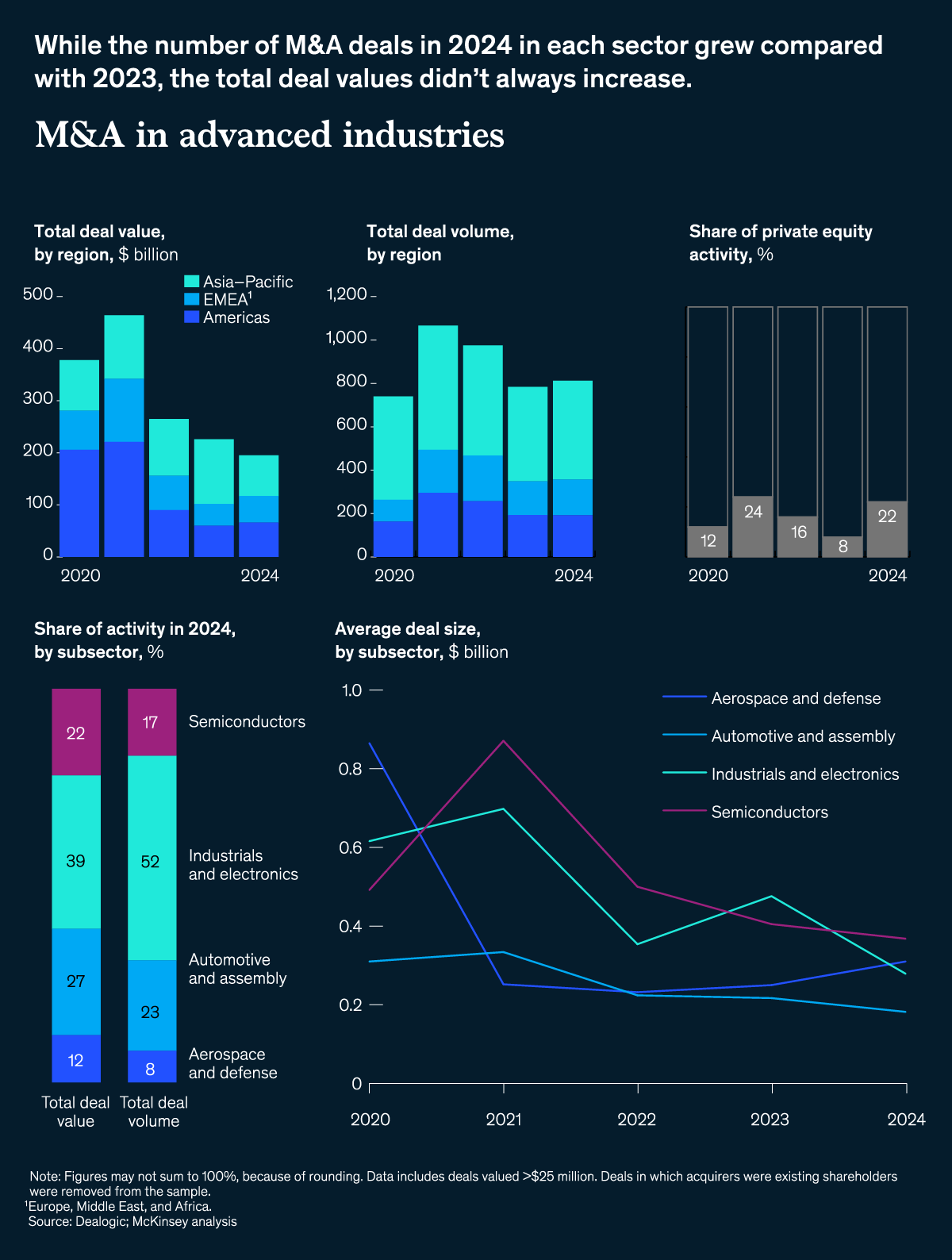

The week in charts

The Week in Charts

Global M&A deals, senior housing, and more Share these insights

Did you enjoy this newsletter? Forward it to colleagues and friends so they can subscribe too. Was this issue forwarded to you? Sign up for it and sample our 40+ other free email subscriptions here.

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to The Week in Charts newsletter.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey Week in Charts" <publishing@email.mckinsey.com> - 03:14 - 12 Apr 2025 -

EP158: How to Learn API Development

EP158: How to Learn API Development

Learning how to develop APIs is an important skill for modern-day developers.͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ Forwarded this email? Subscribe here for moreIntroducing Augment Agent: an AI Agent for pro software engineers (Sponsored)

Augment Code is the first AI coding Assistant built for professional software engineers, large codebases, and production-grade projects.

Now they're excited to unveil Augment Agent, a powerful new mode for Augment Code that can help you complete software development tasks end-to-end. Agent can:

Add new features spanning multiple files

Queue up tests in the terminal

Open Linear tickets, start a pull request

Start a new branch in GitHub from recent commits

...and all kinds of other things. Skip the toy AI coding projects and build something real -- Augment Agent is

standing by to help.This week’s system design refresher:

How to Learn API Development?

Must-Know Network Protocol Dependencies

Top AI Coding Tools for Developers You Can Use in 2025

18 Key Design Patterns Every Developer Should Know

Hiring 🚀: ByteByteGo’s First Sales & Partnerships Hire

SPONSOR US

How to Learn API Development?

Learning how to develop APIs is an important skill for modern-day developers. Here’s a mind map of what all you need to learn about API development:

API Fundamentals

What is an API, types of API (REST, SOAP, GraphQL, gRPC, etc.), and API vs SDK.API Request/Response

HTTP Methods, Response Codes, and Headers.Authentication and Security

Authentication mechanisms (JWT, OAuth 2, API Keys, Basic Auth) and security strategies.API Design and Development

RESTful API principles include stateless, resource-based URL, versioning, and pagination. Also, API documentation tools like OpenAPI, Postman, Swagger.API Testing

Tools for testing APIs such as Postman, cURL, SoapUI, and so on.API Deployment and Integration

Consuming APIs in different languages like JS, Python, and Java. Also, working with 3rd party APIs like the Google Maps API and the Stripe API. Learn about API Gateways like AWS, Kong, Apigee.

Over to you: What else will you add to the list for learning API development?

Must-Know Network Protocol Dependencies

Understanding network protocol dependencies is essential for cybersecurity and networking. Here’s a quick understanding of the same:

IPv4 and IPv6 are the foundation of all networking. ICMP and ICMPv6 handle diagnostics, while IPsec ensures secure communication.

TCP and UDP support various protocols. SCTP and DCCP serve specific cases.

Some TCP-based protocols are HTTP, SSH, BGP, RDP, IMAP, SMTP, POP, etc.

UDP-based protocols are DNS, DHCP, SIP, RTP, NTP, etc.

SSL/TLS encrypts HTTPS, IMAPS, and SMTPS.

LDAP and LDAPs are used for directory services over TCP and secured with SSL/TLS.

QUIC is a UDP-based replacement for TCP+TLS for faster, encrypted connections.

MCP or Model Context Protocol is an emerging standard for communicating with LLMs.

Over to you: Which other network protocol will you add to the list?

Top AI Coding Tools for Developers You Can Use in 2025

AI Code Assistants

GitHub Copilot: Code completion and automatic programming tool.

ChatGPT: Helps write and debug code with the latest models.

Claude: Recent and specialized coding knowledge to generate accurate and up-to-date code.

Amazon CodeWhisperer: AI Assistant in the IDEAI-Powered IDEs

Cursor: AI-powered IDE for Windows, macOS, and Linux.

Windsurf: AI-powered IDE that tackles complex tasks independently.

Replit: Create fully working apps to go live fast.Team Productivity

Cody: The enterprise AI code assistant for writing, fixing, and maintaining code.

Pieces: AI-enabled productivity tool to help developers manage code snippets.

Visual Copilot: Convert Figma designs into React, Vue, Svelte, Angular, or HTML code.Code Quality and Completion

Snyk: Real-time vulnerability scanning of human and AI-generated code.

Tabnine: A code completion tool to accelerate software development.

Over to you: Which other AI Coding Tool will you add to the list? What’s your favorite?

18 Key Design Patterns Every Developer Should Know

Patterns are reusable solutions to common design problems, resulting in a smoother, more efficient development process. They serve as blueprints for building better software structures. These are some of the most popular patterns:

Abstract Factory: Family Creator - Makes groups of related items.

Builder: Lego Master - Builds objects step by step, keeping creation and appearance

Prototype: Clone Maker - Creates copies of fully prepared examples.

Singleton: One and Only - A special class with just one instance.

Adapter: Universal Plug - Connects things with different interfaces.

Bridge: Function Connector - Links how an object works to what it does.

Composite: Tree Builder - Forms tree-like structures of simple and complex parts.

Decorator: Customizer - Adds features to objects without changing their core.

Facade: One-Stop-Shop - Represents a whole system with a single, simplified interface.

Flyweight: Space Saver - Shares small, reusable items efficiently.

Proxy: Stand-In Actor - Represents another object, controlling access or actions.

Chain of Responsibility: Request Relay - Passes a request through a chain of objects until handled.

Command: Task Wrapper - Turns a request into an object, ready for action.

Iterator: Collection Explorer - Accesses elements in a collection one by one.

Mediator: Communication Hub - Simplifies interactions between different classes.

Memento: Time Capsule - Captures and restores an object's state.

Observer: News Broadcaster - Notifies classes about changes in other objects.

Visitor: Skillful Guest - Adds new operations to a class without altering it.

Hiring 🚀: ByteByteGo’s First Sales & Partnerships Hire

Location: Remote

Compensation: Based on experience and hours committed

Experience Level: 1+ years in digital media sales or partnerships

About ByteByteGo

ByteByteGo is the largest technology newsletter on Substack, breaking down complex software and system design concepts into clear, visual, and engaging formats. Our weekly newsletter reaches over a million developers, engineers, and tech leaders across the world.

About the Role

We’re looking for a sales and partnerships lead who will help grow our newsletter sponsorship business. This role will focus on securing new advertisers, nurturing existing relationships, and optimizing revenue opportunities across our newsletter and other media formats.

What You’ll Do

- Identify, pitch, and close sponsorship deals with companies targeting a technical audience.

- Manage inbound interest and proactively source new leads

- Handle sales calls and follow-ups with prospective sponsors

- Grow existing accounts through renewals, upsells, and relationship management

- Track performance and help optimize pricing, packages, and positioning

What We’re Looking For

- 1+ years of experience in sales, partnerships, or media sponsorships—ideally in tech or newsletter media

- Excellent written and verbal communication skills

- Experience with outbound prospecting, CRM tools, and closing deals

- Strong understanding of the tech ecosystem—developer tools, SaaS, AI, startups

- Bonus: you’re already a fan of ByteByteGo and get our voice and audience

- You’re self-motivated, organized, and ready to grow with a small team

How to Apply

Send your resume and a short note on why you’re excited about this role to jobs@bytebytego.comSPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Like

Comment

Restack

© 2025 ByteByteGo

548 Market Street PMB 72296, San Francisco, CA 94104

Unsubscribe

by "ByteByteGo" <bytebytego@substack.com> - 11:37 - 12 Apr 2025 -

Last chance to join the “new & improved” OFA!

We start on Monday, and you can’t be late!Russell kicks off the new One Funnel Away Challenge MONDAY morning!! If you haven’t joined yet… This is your last chance.

I’d recommend you sign up now so you have a little time to orient yourself and prepare to hit the ground running on Day 1.

You’ll be drinking from a fire hose… So, get registered and prepped now!

Expert Or Ecom - Which OFA Track Is Right For You? (Starts Next Monday) >>

They’ve got 30 days of step-by-step coaching lined up…

And whether you pick TEAM RUSSELL… or TEAM TREY…

You can’t go wrong!! This new and improved OFA is going to change your life. I can feel it!

Register now and don’t wait!!!

We’re starting first thing on Monday morning!

Expert Or Ecom - Which OFA Track Is Right For You? (Starts Next Monday) >>

See you in OFA!Todd Dickerson

© Etison LLC

By reading this, you agree to all of the following: You understand this to be an expression of opinions and not professional advice. You are solely responsible for the use of any content and hold Etison LLC and all members and affiliates harmless in any event or claim.

If you purchase anything through a link in this email, you should assume that we have an affiliate relationship with the company providing the product or service that you purchase, and that we will be paid in some way. We recommend that you do your own independent research before purchasing anything.

Copyright © 2018+ Etison LLC. All Rights Reserved.

To make sure you keep getting these emails, please add us to your address book or whitelist us. If you don't want to receive any other emails, click on the unsubscribe link below.

Etison LLC

3443 W Bavaria St

Eagle, ID 83616

United States

by "Todd Dickerson" <noreply@clickfunnelsnotifications.com> - 11:32 - 12 Apr 2025 -

Una vía de crecimiento para las empresas de movilidad

Además, oportunidades para proveedores de vivienda para personas mayores La industria de la movilidad ha entrado en una nueva era de innovación. Las start-ups y los fabricantes de equipos originales (OEM) tradicionales están invirtiendo en la digitalización y en las tendencias ACES: conducción autónoma, conectividad, electrificación y movilidad compartida. En nuestro artículo destacado, Kersten Heineke, Philipp Kampshoff y Timo Möller, de McKinsey, examinan seis medidas que pueden situar a las start-ups de movilidad en una sólida trayectoria de crecimiento. Otros temas destacados incluyen:

•

Cómo la industria automotriz europea puede recuperar su ventaja competitiva

•

Oportunidades a largo plazo para proveedores de vivienda para personas mayores

•

Temas clave que darán forma a la industria de artículos deportivos en 2025

•

El futuro de las operaciones en 2025: cómo las organizaciones pueden seguir siendo ágiles y prosperar a pesar de la incertidumbre en 2025 y más allá

La selección de nuestros editores

LOS DESTACADOS DE ESTE MES

Industria automotriz europea: Lo que necesita para recuperar competitividad

La industria automotriz europea ha sido una piedra angular de la economía de la región, pero las disrupciones han desafiado su liderazgo. ¿Cómo puede recuperar su ventaja competitiva?

Alinee a sus partes interesadas

Cómo la inversión privada puede mejorar las opciones de vivienda para personas mayores

El mundo necesita mejores soluciones residenciales para las personas mayores. Tres innovaciones pueden ser la clave para unos años verdaderamente dorados.

3 oportunidades a largo plazo

Artículos deportivos 2025—El nuevo acto de equilibrio: Convertir la incertidumbre en oportunidad

Aunque la industria de artículos deportivos ha perseverado durante el último año, las previsiones de desaceleración del crecimiento están obligando a los ejecutivos a centrarse por igual en el crecimiento de los ingresos y en las mejoras de la productividad.

Explore el informe para conocer los temas clave

Ingredientes para el futuro: Llevar la revolución biotecnológica a la alimentación

Los ingredientes fermentados podrían transformar el panorama alimentario más rápido de lo que se pensaba. Sin embargo, se necesitan nuevos modelos de negocio para aprovechar la oportunidad de entre $100 y $150 mil millones de dólares que representan las proteínas novedosas fermentadas.

Acelere el cambio

Cómo pueden los bancos aumentar la productividad mediante la simplificación a escala

La productividad en el sector bancario se ha estancado. Estas son las herramientas y técnicas para lograr un cambio duradero.

Impulse el cambio

Impulsar la productividad: Perspectivas operativas para 2025

A pesar de la incertidumbre geopolítica actual, el futuro de las operaciones en 2025 es prometedor. Las empresas que se apoyan en la tecnología, la colaboración interdisciplinaria y la curiosidad pueden impulsar la productividad.

Manténgase ágilEsperamos que disfrute de los artículos en español que seleccionamos este mes y lo invitamos a explorar también los siguientes artículos en inglés.

OUT NOW: The Broken Rung

Leaders and companies must do more to address structural gender inequalities in the workplace—but you don’t have to wait. The Broken Rung, a new book by McKinsey senior partners, Kweilin Ellingrud, Lareina Yee, and María del Mar Martínez, is your guide, right now, for moving up the corporate ladder and reaching your full potential at work.

McKinsey Explainers

Find direct answers to complex questions, backed by McKinsey’s expert insights.

Learn more

McKinsey Themes

Browse our essential reading on the topics that matter.

Get up to speed

McKinsey on Lives & Legacies

Read monthly obituaries from business and society that highlight the lasting legacies of executives and leaders from around the globe.

Explore the latest obituaries

Only McKinsey Perspectives

Discover actionable insights on the day’s news as only McKinsey can bring.

Get the latest

McKinsey Classics

Many new CEOs reshuffle their top teams, but surprisingly few make them more diverse. Can we do better? Read our 2018 classic “Closing the gender gap: A missed opportunity for new CEOs” to learn more.

Rewind

The Weekend Read

— Edited by Joyce Yoo, editor, New York

COMPARTA ESTAS IDEAS

¿Disfrutó este boletín? Reenvíelo a colegas y amigos para que ellos también puedan suscribirse. ¿Se le remitió este articulo? Regístrese y pruebe nuestras más de 40 suscripciones gratuitas por correo electrónico aquí.

Este correo electrónico contiene información sobre la investigación , los conocimientos, los servicios o los eventos de McKinsey. Al abrir nuestros correos electrónicos o hacer clic en los enlaces, acepta nuestro uso de cookies y tecnología de seguimiento web. Para obtener más información sobre cómo usamos y protegemos su información, consulte nuestra política de privacidad.

Recibió este correo electrónico porque es un miembro registrado de nuestro boletín informativo Destacados.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "Destacados de McKinsey" <publishing@email.mckinsey.com> - 08:43 - 12 Apr 2025 -

2024 New Collection Aluminum Window and Door -China Oneplus

Hello,Sir or Madam,

Good day.

This is Coco from Foshan Oneplus Windows and Doors Co., Ltd, which is a leading aluminum manufacturer in China providing super aluminum products such as windows & doors, Sunroom, handrails, and windows walls, etc.

You can contact me by WhatsApp at 86 13430211600. Or Email oneplusaluminum@outlook.com1、Are you interested in aluminum windows and doors? If so,you can send us the dimensions or drawings so that we can give you a competitive price.

2、Our products have NFRC and other certificates to meet energy efficiency requirements.

3、Could I add your Whatsapp number? It's easier to communicate that way.Or you can visit our website for more detailed information: Our

Website: www.onepluswindows.com

Showroom: https://oneplusaluminum.en.made-in-china.com/It would be much appreciated if you could spend a few minutes to have a look at this email, attachments, and our website.

Oneplus has more than ten years of experience in exporting casement windows, doors, and Sunroom all over the world, and America/Europe/Australia is one of our main markets.

We focus on the door and window industry for professional high-end customized services.

Our products for the USA and Australia markets comply with complete certificated procedures such as CE, and NFRC, and meet the highest national inspection standards.Oneplus owns its brand "KINTE ALUMINUM D&W"-Smart Window &Doors, You can visit our website link below for more detailed information and we wish to build up a business relationship with you.

Delivery Lead Time:30-35Days

Payment Term: EXW /FOB

Looking forward to your kind reply.Thank you!

-----------------------------------------

Best Regards!

Coco Chan

Sales ManagerMob& WhatsApp: +86 13430211600

Website: https://oneplusaluminum.en.made-in-china.com/

Foshan Oneplus Windows and Doors Co., Ltd.

Tel: +86-757 8562 8529 Fax: +86-757 2362 1068

No. 3, Xingye Road, Ganglian Industrial Zone, Lishui Town, Nanhai District, Foshan, Guangdong, China (Post Code 528000)

by "Yaque Lopezaguilar" <lopezaguilaryaque@gmail.com> - 04:02 - 12 Apr 2025