Archives

- By thread 5369

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 182

-

We have about 15 years of experience in producing soft starters and technology

Hi Dear info,

Please check attached our catalog and manual about our SCKR1-7000 series built in bypass contactor soft starter.

1.The quality ,outlook,functions is the same as Schneider,ABB,but the price is much lower than it,if we can cooperate ,you can have more profit.

2.Our soft starter can replace the Aucom soft starter(model:EMX3).

3. We also can print your logo,and no any Chinese on the soft starter and carton.

4.We have manufactured soft starter about 15 years ,experience,technical,engineer we all have,can better solve your problems.

5.Our soft starter customize your country language ,then your customer no need to translate.

6.Our soft starter have the profibus communication,can connect the Siemens PLC.

So if you not interested it,We have more cost-effective online soft starter and bypass soft starter.

Damon

Whatsapp/tel:+8615270931770

Email:chuanken123@163.com

Website:www.shckele.com

by "vfd06" <vfd06@softstartervfd.com> - 05:56 - 4 May 2025 -

Comprehensive Support for Your Display Needs

Dear info,

I hope this email finds you well. I'm Johnson, the Sales Director at Shenzhen Yanxun Display Technology CO., LTD. We are dedicated to not just providing high-quality LCD monitors and AIO computers, but also ensuring that our clients receive the best after-sales support.

We plan to establish overseas warranty centers to further enhance our service delivery. Your satisfaction is our top priority, and we believe that strong after-sales service sets us apart.

I would love to discuss how our solutions can align with your business objectives. Can we schedule a time to chat?

Best wishes,

Johnson

Sales Director

Shenzhen Yanxun Display Technology CO., LTD.

Building C, Xingyi Digital Fashion Park, No. 3 Of Langjing Road, Dalang Block, Longhua District, Shenzhen, China. 518109.

Johnson@yanxundisplay.com

by "Elazar Pajon" <elazarpajon102@gmail.com> - 05:15 - 4 May 2025 -

Bring Your Space to Life This Holiday Season

Hello info,

My name is RonCM from HOYECHI – we help businesses and public spaces transform their environments with festive lighting and decorations.

If you're planning holiday-themed displays this year, we’d love to support you with a free custom lighting design, tailored to your space and theme. From concept to execution, we handle everything, and for larger projects, our team can travel to assist with on-site installation anywhere globally.

There’s no cost to start—we’re happy to offer ideas or a proposal for your review.

If interested, simply reply to this message, or let me know a convenient time and number to call you. You can also find us by searching "HOYECHI" online to explore our work.

Warm regards,

RonCM

[Your Title], HOYECHI

by "RonCM" <RonCM@hoyechin.com> - 01:27 - 4 May 2025 -

Providing you with high-quality glove products - helping you to be safe and comfortable

Dear info,

Hello! Our company has accumulated more than 20 years of experience in the field of glove manufacturing, focusing on the production of various high-quality glove products, including mechanical gloves, TPR anti-cut gloves, nitrile gloves, etc. All products have passed CE certification to ensure compliance with international safety standards.

Our gloves not only have excellent protective performance, but also provide a comfortable wearing experience. They are widely used in many industries such as industry, construction, and medical care. We always adhere to high-quality production and are committed to providing customers with the safest and most durable glove products.

If you are interested in our products or would like to learn more about our cooperation opportunities, please feel free to contact us. We look forward to exploring the market with you.

Thank you for your valuable time and look forward to your reply!

Regards

LynnPhone: +8613714693585

Email: yulinling1120@gmail.com

WhatsApp:+8613714693585Website: www.herogloves.com

by "sales14" <sales14@herogloves.com> - 08:12 - 3 May 2025 -

Fast Delivery Motorcycle GPS Displays | Ready to Ship

Dear sir

Need motorcycle GPS displays with quick turnaround? HI-SOUND offers:

? GPS Navigators (5-inch HD, waterproof)

? Digital Dashboards (real-time data tracking)

? Multimedia Displays (Bluetooth/USB connectivity)Why choose us?

Bulk order discounts & no hidden costs

20-day production time for urgent demands

3-year warranty & global logistics support

Request a free quote or check stock availability at jmhisound.goldsupplier.com. Ready to ship today!

Regards,

Connie

by "Danil Madrigar" <danilmadrigar221@gmail.com> - 08:01 - 3 May 2025 -

The week in charts

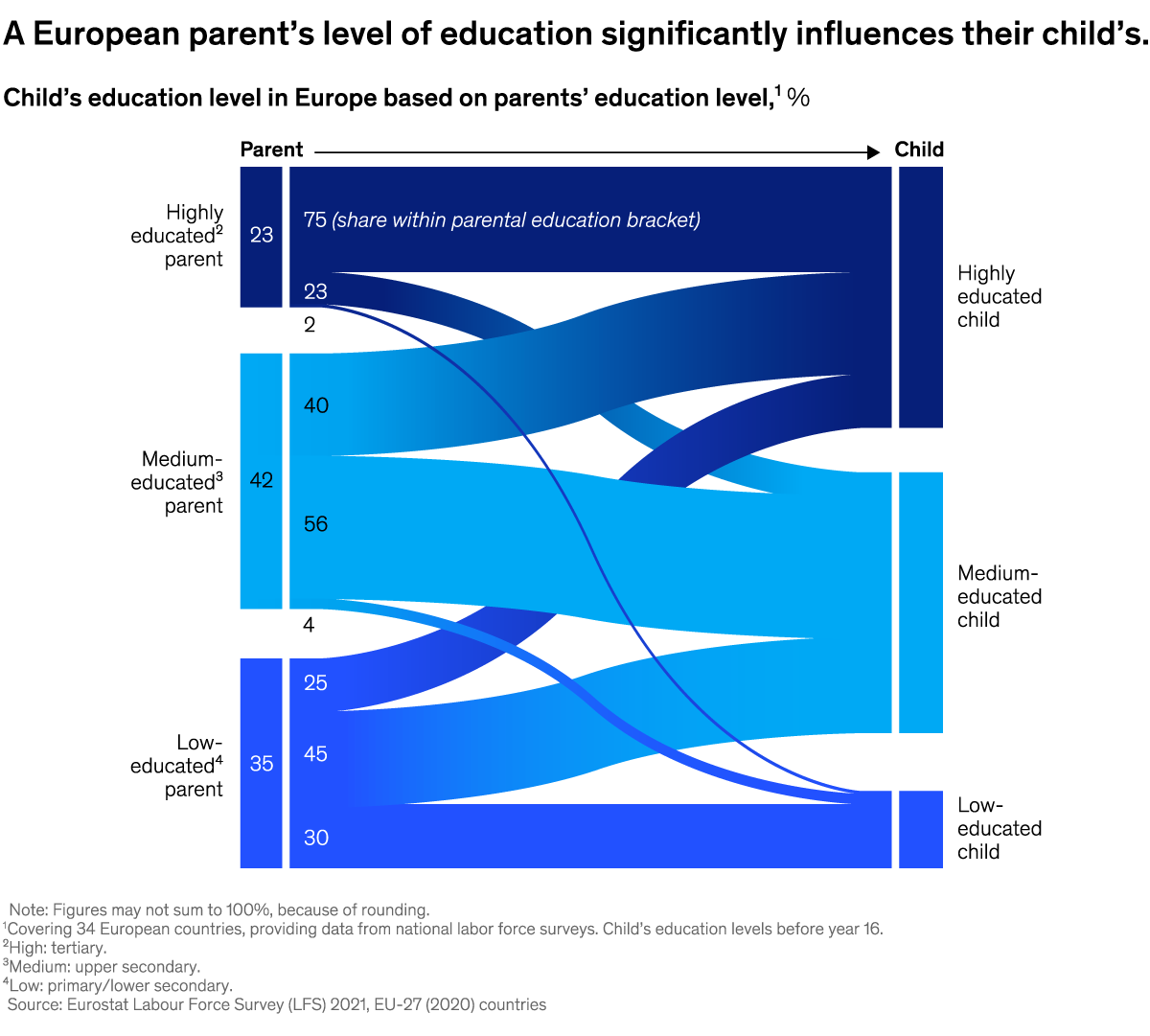

The Week in Charts

Educational attainment, ROI from gen AI, and more Share these insights

Did you enjoy this newsletter? Forward it to colleagues and friends so they can subscribe too. Was this issue forwarded to you? Sign up for it and sample our 40+ other free email subscriptions here.

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to The Week in Charts newsletter.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey Week in Charts" <publishing@email.mckinsey.com> - 03:59 - 3 May 2025 -

supply metallic silicon

Dear Sir or Madam:

Tianjin HeshengChangyi International Trade Co., Ltd. was established in 2014 and is located in Binhai New Area, Tianjin China. It is a comprehensive enterprise specializing in the production and trade of industrial basic materials such as industrial silicon (metallic silicon), silicon carbide, and ferrosilicon.

Through more than ten years of hard work, our company has established stable cooperative relationships with multiple overseas buyers, such as Japan, South Korea, Malaysia, Bahrain, the United Arab Emirates, Germany, and so on.We can provide products with perfect quality and competitive prices.

If you are interested in our products, please feel free to contact us, we will be happy to serve you.

We look forward to establishing closer cooperation with you and let us work together for a better future.

Below are our products pictures:Metal silicon

Silicon carbide

Ferro silicon

For more products information you can check our website : https://www.hscy-trading.com

Best regards

summer

Tianjin HeshengChangyi International Trade Co., Ltd

Tel:+86-18522821872

wechat/whatsapp

Email:summer@hscy-trading.com

by "sales05" <sales05@hscy-industry.com> - 03:25 - 3 May 2025 -

Topwill Flan brings wonderful Flan adventures!

Hello info!

How's it going? We're here to add some exciting fun to your day! At Topwill Flange, we are dedicated to manufacturing high quality steel flanges, pressed flanges and forged parts. Whether you prefer an EN standard flange, an ANSI standard flange, a JIS standard flange or a completely unique flange, we have your back.

Our material grades range from austenitic ferritic corrosion-resistant steels to nickel-based alloys. In addition, our factory has obtained ISO 9001 and PED certificates from TUV, so you can trust that you are getting the genuine product.

We're committed to making your trip to Flange a breeze. When you're ready to embark on this amazing adventure, drop us a line!

Best wishes,

Adam Jiang

General Manager

Topwill Group

Head office: 1103F,Kaisa Plaza, No. 1091 Renmin East Road,Jiangyin city.

Tel:+86 510 86830885 Fax:+86 510 86805885

Website: https://www.topwillgroup.com/ info@topwillgroup.com

WhatsApp / Mobile: +86 13806163717Wechat: +86 13806163717

Topwill Group Companies:

Topwill Group Limited- HongKong

Jiangyin Topwill Flange Fitting Co Ltd- China

Jiangyin Topwill Hi-Precision Co Ltd - China

EverRich Flange SDN.BHD - Malaysia

by "Hduwf Hduef" <hduwfhduef@gmail.com> - 02:26 - 3 May 2025 -

EP161: A Cheatsheet on REST API Design Best Practices

EP161: A Cheatsheet on REST API Design Best Practices

Well-designed APIs behave consistently, fair predictably, and grow without friction.͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ Forwarded this email? Subscribe here for moreWorkOS + MCP: Authorization for AI Agents (Sponsored)

Wide-open access to every tool on your MCP server is a major security risk. Unchecked access can quickly lead to serious incidents.

Teams need a fast, easy way to lock down access with roles and permissions.

WorkOS AuthKit makes it simple with RBAC — assign roles, enforce permissions, and control exactly who can access critical tools.

Don’t wait for a breach to happen. Secure your server today.

This week’s system design refresher:

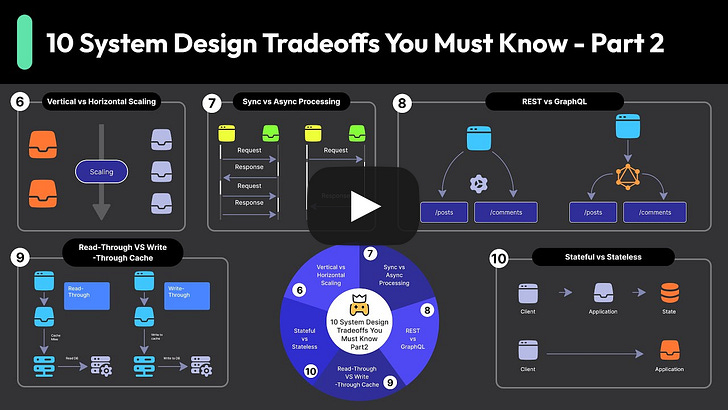

System Design Was HARD - Until You Knew the Trade-Offs, Part 2 (Youtube video)

A Cheatsheet on REST API Design Best Practices

Top 30 AWS Services That Are Commonly Used

The Large-Language Model Glossary

We're hiring at ByteByeGo

SPONSOR US

System Design Was HARD - Until You Knew the Trade-Offs, Part 2

A Cheatsheet on REST API Design Best Practices

Well-designed APIs behave consistently, fair predictably, and grow without friction. Some best practices to keep in mind are as follows:

Resource-oriented paths and proper use of HTTP verbs help APIs align with standard tools.

Use a proper API versioning approach.

Use standard error codes while generating API responses.

APIs should be idempotent. They ensure safe retries by making repeated requests to produce the same result, especially for POST operations.

Idempotency keys allow clients to safely deduplicate operations with side effects.

APIs should support pagination to prevent performance bottlenecks and payload bloat. Some common pagination strategies are offset-based, cursor-based, and keyset-based.

API security is mandatory for well-designed APIs. Use proper authentication and authorization with APIs using API Keys, JWTs, OAuth2, and other mechanisms. HTTPS is also a must-have for APIs running in production.

Over to you: Which other best practices do you follow while designing APIs?

Pgvector vs. Qdrant: Open-Source Vector Database Comparison (Sponsored)

Looking for an open-source, high-performance vector database for large-scale workloads? We compare Qdrant vs. Postgres + pgvector + pgvectorscale.

Top 30 AWS Services That Are Commonly Used

We group them by category and understand what they do.

Compute Services

1 - Amazon EC2: Virtual servers in the cloud

2 - AWS Lambda: Serverless functions for event-driven workloads

3 - Amazon ECS: Managed container orchestration

4 - Amazon EKS: Kubernetes cluster management service

5 - AWS Fargate: Serverless compute for containers

Storage Services

6 - Amazon S3: Scalable secure object storage

7 - Amazon EBS: Block storage for EC2 instances

8 - Amazon FSx: Fully managed file storage

9 - AWS Backup: Centralized backup automation

10 - Amazon Glacier: Archival cold storage for backups

Database Services

11 - Amazon RDS: Managed relational database service

12 - Amazon DynamoDB: NoSQL database with low latency

13 - Amazon Aurora: High-performance cloud-native database

14 - Amazon Redshift: Scalable data warehousing solution

15 - Amazon Elasticache: In-memory caching with Redis/Memcached

16 - Amazon DocumentDB: NoSQL document database (MongoDB-compatible)

17 - Amazon Keyspaces: Managed Cassandra database service

Networking & Security

18 - Amazon VPC: Secure cloud networking

19 - AWS CloudFront: Content Delivery Network

20 - AWS Route53: Scalable domain name system (DNS)

21 - AWS WAF: Protects web applications from attacks

22 - AWS Shield: DDoS protection for AWS workloads

AI & Machine Learning

23 - Amazon SageMaker: Build, train, and deploy ML models

24 - AWS Rekognition: Image and video analysis with AI

25 - AWS Textract: Extracts text from scanned documents

26 - Amazon Comprehend: AI-driven natural language processing

Monitoring & DevOps

27 - Amazon CloudWatch: AWS performance monitoring and alerts

28 - AWS X-Ray: Distributed tracing for applications

29 - AWS CodePipeline: CI/CD automation for deployments

30 - AWS CloudFormation - Infrastructure as Code (IaC)

Over to you: Which other AWS service will you add to the list?The Large-Language Model Glossary

This glossary can be divided into high-level categories:

Models: Includes the types of models such as Foundation, Instruction-Tuned, Multi-modal, Reasoning, and Small Language Model.

Training LLM: Training begins with pretraining RLHF, DPO, and Synthetic Data. Fine-Tuning adds control with datasets, checkpoints, LoRA/QLoRA, guardrails, and parameter tunings.

Prompts: Prompts drive how models respond using User/System Prompts, Chain of Thought, of Few/Zero-Shot learning. Prompt Tuning and large Context Windows help shape more precise, multi-turn conversations.

Inference: This is how models generate responses. Key factors include Temperature, Max Tokens, Seed, and Latency. Hallucination is a common issue here, where the model makes things up that sound real.

Retrieval-Augmented Generation: RAG improves accuracy by fetching real-world data. It uses Retrieval, Semantic Search, Chunks, Embeddings, and VectorDBs. Reranking and Indexing ensure the best answers are surfaced, not just the most likely ones.

Over to you: What else will you add to the LLM glossary?

We're hiring two new positions at ByteByeGo: Full-Stack Engineer and Sales/Partnership

Role Type: Part-time (20+ weekly) or Full-time

Compensation: Competitive

Full-Stack Engineer (Remote)

We are hiring a Full Stack Engineer to build an easy-to-use educational platform and drive product-led growth. You'll work closely with the founder, wearing a product manager's hat when needed to prioritize user experience and feature impact. You'll operate in a fast-paced startup environment where experimentation, creativity, and using AI tools for rapid prototyping are encouraged.

We’re less concerned with years of experience. We care more about what you've built than about your resume. Share your projects, GitHub, portfolio, or any artifacts that showcase your ability to solve interesting problems and create impactful solutions. When you're ready, send your resume and a brief note about why you're excited to join ByteByteGo to jobs@bytebytego.com

Sales/Partnership (US based remote role)

We’re looking for a sales and partnerships specialist who will help grow our newsletter sponsorship business. This role will focus on securing new advertisers, nurturing existing relationships, and optimizing revenue opportunities across our newsletter and other media formats.

We’re less concerned with years of experience. What matters most is that you’re self-motivated, organized, and excited to learn and take on new challenges.

How to Apply: send your resume and a short note on why you’re excited about this role to jobs@bytebytego.comSPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Like

Comment

Restack

© 2025 ByteByteGo

548 Market Street PMB 72296, San Francisco, CA 94104

Unsubscribe

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 3 May 2025 -

How tariffs are reshaping global business

Plus, climate tech investments, India’s growth potential, and America at 250

by "McKinsey Highlights" <publishing@email.mckinsey.com> - 11:02 - 3 May 2025 -

Enhance Spaces with Luxury Chenille Curtains

Dear Purchaser,

I hope this email finds you well.

We are a specialized manufacturer of chenille string curtains, offering a wide range of high-quality products at competitive prices. Our curtains are designed to enhance both residential and commercial spaces with their unique texture and style.

If you are interested in adding our products to your inventory, I would be happy to provide more information or discuss potential cooperation.

Looking forward to the possibility of working together.

Best regards,

Madelyn | Sales manager

by "FAIzi Tewari" <faizitewari@gmail.com> - 03:55 - 3 May 2025 -

Too many unqualified applicants? We fixed that.

Too many unqualified applicants? We fixed that.

Hi MD,

If you’ve hired globally, you’ve seen it before: a great-looking applicant applies, but they can’t legally work where you’re hiring.

Now, you can screen that out from the start and build faster, cleaner pipelines.

Right-to-Work screening is now live inside Recruit:

✅ Add work eligibility rules to your job posts

✅ Applicants confirm their status before applying

✅ If they’re not eligible (and you don’t sponsor), they’re unable to apply

Less wasted time. Fewer dead ends. Stronger matches from day one.

You received this email because you are subscribed to

Remote News & Offers from Remote Europe Holding B.V

Update your email preferences to choose the types of emails you receive.

Unsubscribe from all future emailsRemote Europe Holding B.V

Copyright © 2025 Remote Europe Holding B.V All rights reserved.

Kraijenhoffstraat 137A 1018RG Amsterdam The Netherlands

by "Remote Team" <hello@talent.remote.com> - 09:51 - 2 May 2025 -

High-Quality Cooling Blankets from Hangzhou Chosen Textile Co., Ltd.

Dear info,

This is Winnie from Hangzhou Chosen Textile Co.,Ltd, a professional blankets manufacturer with more than 20 years‘s experience,with BSCI and OKETEX 100.

For cooling blankets, we make many styles for our customers according to different request and target price .

We can work for you from design, sampling and bulk production .

We promise, HIGH QUALITY LOW PRICE.

Please let us know if you need a SAMPLE for checking details.

by "sales14" <sales14@chosentextile.com> - 05:34 - 2 May 2025 -

Premium Handheld Blenders for Your Product Range

Dear info,

I hope this message finds you well. We are a leading manufacturer specializing in high-quality hand blenders, meat grinders, and stand mixers. With over 13 years of expertise and 120 patents, we offer top-tier OEM/ODM solutions to brands like yours.

Our products are designed for durability, performance, and user-friendliness, making them perfect for your customer base.

We would love the opportunity to discuss potential collaboration opportunities and share more details about how we can support your business with our products.

Looking forward to hearing from you soon.

Thanks & best regards,Cynthia Jiang - Marketing ManagerShenzhen Gainer Electrical Appliances Co., LtdBuilding 5,101-401,6 whole building, Yinjin Technology Industrial Park, Fengjing South Road, Matian street, Guangming District, Shenzhen, Guangdong, CNTel: +86 15818554662Skype & WhatsApp & Wechat: 15818554662Web: szgainer.com

by "weij.sales" <weij.sales@zggenai.com> - 04:39 - 2 May 2025 -

Trusted OEM Medical Dressings Supplier | CE/FDA Certified

Dear info,

I hope this email finds you well.

I’m Jamie from Anji Hongde Medical Products Co., Ltd, a leading Chinese manufacturer of medical dressings including:

Elastic Bandages & Medical Tapes

Gauze Rolls, Cotton Products & Flocked Swabs

Masks, Tongue Depressors, and OEM/ODM solutions.

Why partner with us?

✅ Certified Quality: CE, FDA, and ISO 13485 compliance.

✅ Proven Expertise: Long-term supplier to Medline, McKesson, and Dukal.

✅ Cost Efficiency: Competitive pricing with bulk order discounts.

✅ Global Logistics: Strategic ports (Shanghai/Ningbo) ensure 15-day delivery.As your dedicated account manager, I’d like to tailor solutions for your market. Could you share your current product requirements or target pricing? I’ll provide a quote within 4 hours.

Free samples and catalogs are available upon request. Let’s discuss how we can elevate your supply chain!

Best Regards,

Jamie Ji

Tel/ WhatsApp: +8618006721332

E-mail: sales807@cnhltrade.com

Website: www.hdbandage.com ANJI HONGDE MEDICAL PRODUCTS CO..LTD

Building No.1,3.4,5, Bamboo lndustrial Zone, Xiaofeng Town, Anji County, Zhejiang, P.R. China. 313301

by "sales801" <sales801@ajhdmedical.com> - 03:58 - 2 May 2025 -

The cost of compute: A $7 trillion race to scale data centers

Anticipate demand shifts Brought to you by Brendan Gaffey (Brendan_Gaffey@McKinsey.com) and Michael Park (Michael_Park@McKinsey.com), global leaders of McKinsey’s Technology, Media & Telecommunications Practice

This email contains information about McKinsey’s research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to our McKinsey Quarterly alert list.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey & Company" <publishing@email.mckinsey.com> - 12:50 - 2 May 2025 -

Re: Odoo Learn sales

Hey ,

I wouldn't be wasting my time or yours if I didn't believe Brax would benefit your company.

Here are some case studies of our clients along with some resources.

Would you be interested in learning more?

Regards,

Mark @ Brax

422 Richards St, Suite 170. Vancouver, BC V6B 2Z4

P.S. If you don't want to hear from me again, please let me know.-----Original Message-----

From: Mark Siiten

To: info@learn.odoo.com

Subject: Re: Odoo Learn sales

Hey, just checking to see if you got that?

Mark @ Brax

422 Richards St, Suite 170. Vancouver, BC V6B 2Z4

P.S. If you don't want to hear from me again, please let me know.-----Original Message-----

From: Mark Siiten

To: info@learn.odoo.com

Subject: Odoo Learn sales

Hi

I came across your website and read about your software.

I work at Brax, a lead generation company that helps software development firms find more clients.

We recently launched a marketing campaign for one of our clients and they saw 150 new inquiries in just 21 days.

Can we chat about how we can do the same for you?

Thanks,

Mark @ Brax

422 Richards St, Suite 170. Vancouver, BC V6B 2Z4

P.S. If you don't want to hear from me again, please let me know.

by "Mark Siiten" <marksiiten@getbraxservices.com> - 08:58 - 2 May 2025 -

5 Reasons to Choose Corporate Race Day for Your Next Event

Why Choose Corporate Race Day? Hello,

When it comes to corporate hospitality, Corporate Race Day stands out.

Here’s why:

- Unmatched Access: Gain entry to the UK’s most prestigious horse racing events with exclusive perks.

- Exceptional Hospitality: Enjoy luxury dining, premium seating, and world-class service throughout the day.

- Tailored Experiences: From intimate gatherings to large-scale corporate events, we design packages to suit your needs.

- Flexible Payment Terms: Affordable packages starting at £30 per person with payment options to match your budget.

- Unforgettable Memories: Treat your guests to a day they’ll remember for years to come.

Join hundreds of satisfied clients who’ve trusted us to deliver exceptional race day experiences.

Ready to elevate your corporate entertainment?

Josh Budz

Senior Business Development Manager

joinus@corporateraceday.com

+44 (0)121 2709072

+44 (0)7441 351 701

by "Josh - Corporate Race Day" <joinus@corporateraceday.com> - 08:20 - 2 May 2025 -

Tariffs are still in the news. Here’s what to do.

The Shortlist

Emerging ideas for leaders Curated by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of the CEO Shortlist, a biweekly newsletter of our best ideas for the C-suite. This week, we dive into our latest thinking on the ever-changing global economy. You can reach us with reciprocal ideas and thoughts at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com. Thank you, as ever.

—Alex and Axel

Topsy-turvy time. The recent wave of tariffs and other trade controls has created radical uncertainty for businesses. Here’s how decision-makers can best position their companies to thrive in the evolving landscape, by McKinsey Senior Partners Cindy Levy, Shubham Singhal, and coauthor Zoe Fox. It all starts with understanding your relative advantage compared with rivals, and the changes in demand.

Here we go again. After the global financial crisis, COVID-19, and the war in Ukraine, business leaders have (almost) seen it all. McKinsey Global Managing Partner Bob Sternfels and Senior Partners Michael Birshan and Ishaan Seth suggest that CEOs pull from that body of experience and return to first principles. In times of crisis, some leaders want to hunker down; others want to make all the right defensive moves while also leaning into volatility. These leaders are playing both offense and defense.

We hope you find these ideas inspiring and helpful. See you in a couple of weeks with more McKinsey ideas for the CEO and others in the C-suite.Share these insights

This email contains information about McKinsey’s research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to The CEO Shortlist newsletter.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey CEO Shortlist" <publishing@email.mckinsey.com> - 04:14 - 2 May 2025 -

How to improve public sector productivity

On McKinsey Perspectives

Sizing the productivity opportunity Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Only McKinsey Perspectives. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

•

Potential savings. As the US seeks to redefine the role, scale, and scope of the federal government, productivity is once again in the spotlight. With the US national debt growing to $36 trillion, the renewed push is timely. McKinsey analysis finds that significant savings could be achieved by increasing productivity. According to McKinsey Partners Aly Spencer and Nikhil Sahni, the total productivity opportunity could be up to $1 trillion—almost 15% of the fiscal year 2024 enacted budget of about $6.8 trillion.

—Edited by Belinda Yu, editor, Atlanta

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Only McKinsey Perspectives newsletter, formerly known as Only McKinsey.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "Only McKinsey Perspectives" <publishing@email.mckinsey.com> - 01:35 - 2 May 2025