Archives

- By thread 5360

-

By date

- June 2021 10

- July 2021 6

- August 2021 20

- September 2021 21

- October 2021 48

- November 2021 40

- December 2021 23

- January 2022 46

- February 2022 80

- March 2022 109

- April 2022 100

- May 2022 97

- June 2022 105

- July 2022 82

- August 2022 95

- September 2022 103

- October 2022 117

- November 2022 115

- December 2022 102

- January 2023 88

- February 2023 90

- March 2023 116

- April 2023 97

- May 2023 159

- June 2023 145

- July 2023 120

- August 2023 90

- September 2023 102

- October 2023 106

- November 2023 100

- December 2023 74

- January 2024 75

- February 2024 75

- March 2024 78

- April 2024 74

- May 2024 108

- June 2024 98

- July 2024 116

- August 2024 134

- September 2024 130

- October 2024 141

- November 2024 171

- December 2024 115

- January 2025 216

- February 2025 140

- March 2025 220

- April 2025 233

- May 2025 239

- June 2025 303

- July 2025 173

-

Innovative Partnership Ideas for Your Backpack

Dear Info

Hi, we Fuzhou Vastjoint Union Company, specializing in bag manufacturing for over 15 years.

and had cooperated with multiple European and American chain supermarket customers.

After researching your website,I have discovered that your bags are stylish and high-quality. We are pleased to present new developments in the field of your backpacks.

We control costs from raw materials to the final product. The long-term partnerships with top global suppliers ensure high-quality materials while maintaining cost efficiency. so the price is very competitive.

Our years of customer service experience not only equip us with advantageous design capabilities to help customers better enhance the competitiveness of products, but also with a standardized quality control system and various factory inspection qualifications to ensure the compliance of production and manufacturing for brand orders.

Should you visit China, we warmly invite you to tour our factory.

Best rgds

Luke

by "sale10" <sale10@vastjointbags.com> - 04:26 - 20 May 2025 -

How Facebook Live Scaled to a Billion Users

How Facebook Live Scaled to a Billion Users

In this article, we’ll look at how Facebook Live was built and the kind of challenges they faced.͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ Forwarded this email? Subscribe here for more😘 Kiss bugs goodbye with fully automated end-to-end test coverage (Sponsored)

Bugs sneak out when less than 80% of user flows are tested before shipping. However, getting that kind of coverage (and staying there) is hard and pricey for any team.

QA Wolf’s AI-native service provides high-volume, high-speed test coverage for web and mobile apps, reducing your organizations QA cycle to less than 15 minutes.

They can get you:

24-hour maintenance and on-demand test creation

Zero flakes, guaranteed

Engineering teams move faster, releases stay on track, and testing happens automatically—so developers can focus on building, not debugging.

Drata’s team of 80+ engineers achieved 4x more test cases and 86% faster QA cycles.

⭐ Rated 4.8/5 on G2

Disclaimer: The details in this post have been derived from the articles/videos shared online by the Facebook/Meta engineering team. All credit for the technical details goes to the Facebook/Meta Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Facebook didn’t set out to dominate live video overnight. The platform’s live streaming capability began as a hackathon project with the modest goal of seeing how fast they could push video through a prototype backend. It gave the team a way to measure end-to-end latency under real conditions. That test shaped everything that followed.

Facebook Live moved fast by necessity. From that rooftop prototype, it took just four months to launch an MVP through the Mentions app, aimed at public figures like Dwayne Johnson. Within eight months, the platform rolled out to the entire user base, consisting of billions of users.

The video infrastructure team at Facebook owns the end-to-end path of every video. That includes uploads from mobile phones, distributed encoding in data centers, and real-time playback across the globe. They build for scale by default, not because it sounds good in a deck, but because scale is a constraint. When 1.2 billion users might press play, bad architecture can lead to issues.

The infrastructure needed to make that happen relied on foundational principles: composable systems, predictable patterns, and sharp handling of chaos. Every stream, whether it came from a celebrity or a teenager’s backyard, needed the same guarantees: low latency, high availability, and smooth playback. And every bug, every outage, every unexpected spike forced the team to build smarter, not bigger.

In this article, we’ll look at how Facebook Live was built and the kind of challenges they faced.

How Much Do Remote Engineers Make? (Sponsored)

Engineering hiring is booming again: U.S. companies with revenue of $50 million+ are anticipating a 12% hiring increase compared with 2024.

Employers and candidates are wondering: how do remote software engineer salaries compare across global markets?

Terminal’s Remote Software Engineer Salary Report includes data from 260K+ candidates across Latin America, Canada and Europe. Employers can better inform hiring decisions and candidates can understand their earning potential.

Our hiring expertise runs deep: Terminal is the smarter platform for hiring remote engineers. We help you hire elite engineering talent up to 60% cheaper than U.S. talent.

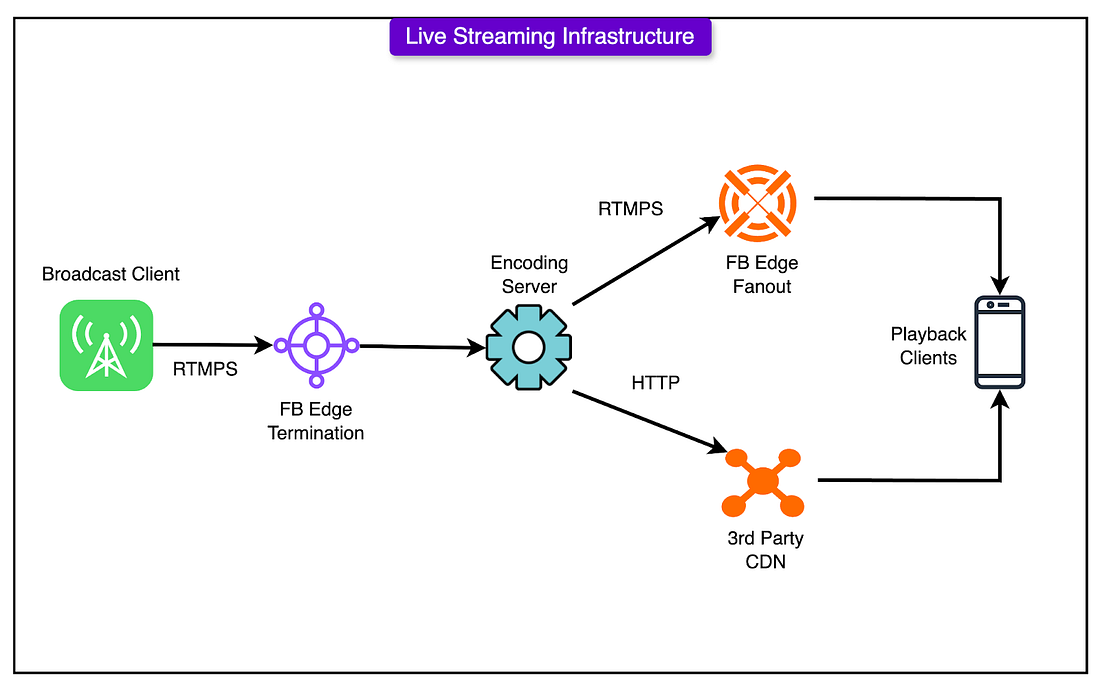

Core Components Behind Facebook Video

At the heart of Facebook’s video strategy lies a sprawling infrastructure. Each component serves a specific role in making sure video content flows smoothly from creators to viewers, no matter where they are or what device they’re using.

See the diagram below that shows a high-level view of this infrastructure:

Fast, Fail-Tolerant Uploads

The upload pipeline is where the video journey begins.

It handles everything from a celebrity’s studio-grade stream to a shaky phone video in a moving car. Uploads must be fast, but more importantly, they must be resilient. Network drops, flaky connections, or device quirks shouldn’t stall the system.

Uploads are chunked to support resumability and reduce retry cost.

Redundant paths and retries protect against partial failures.

Metadata extraction starts during upload, enabling early classification and processing.

Beyond reliability, the system clusters similar videos. This feeds recommendation engines that suggest related content to the users. The grouping happens based on visual and audio similarity, not just titles or tags. That helps surface videos that feel naturally connected, even if their metadata disagrees.

Encoding at Scale

Encoding is a computationally heavy bottleneck if done naively. Facebook splits incoming videos into chunks, encodes them in parallel, and stitches them back together.

This massively reduces latency and allows the system to scale horizontally. Some features are as follows:

Each chunk is independently transcoded across a fleet of servers.

Bitrate ladders are generated dynamically to support adaptive playback.

Reassembly happens quickly without degrading quality or syncing.

This platform prepares content for consumption across every device class and network condition. Mobile users in rural zones, desktop viewers on fiber, everyone gets a version that fits their bandwidth and screen.

Live Video as a First-Class Citizen

Live streams add a layer of complexity. Unlike uploaded videos, live content arrives raw, gets processed on the fly, and must reach viewers with minimal delay. The architecture must absorb the chaos of real-time creation while keeping delivery tight and stable.

Broadcast clients (phones, encoders) connect via secure RTMP to entry points called POPs (Points of Presence).

Streams get routed through data centers, transcoded in real time, and dispatched globally.

Viewers watch through mobile apps, desktop browsers, or APIs.

This is like a two-way street. Comments, reactions, and viewer engagement flow back to the broadcaster, making live content deeply interactive. Building that loop demands real-time coordination across networks, services, and user devices.

Scalability Requirements

Scaling Facebook Live is about building for a reality where “peak traffic” is the norm. With over 1.23 billion people logging in daily, the infrastructure must assume high load as the baseline, not the exception.

Some scaling requirements were as follows:

Scale Is the Starting Point

This wasn’t a typical SaaS model growing linearly. When a product like Facebook Live goes global, it lands in every timezone, device, and network condition simultaneously.

The system must perform across the globe in varying conditions, from rural to urban. And every day, it gets pushed by new users, new behaviors, and new demands. Almost 1.23 billion daily active users formed the base load. Traffic patterns should follow cultural, regional, and global events.

Distributed Presence: POPs and DCs

To keep latency low and reliability high, Facebook uses a combination of Points of Presence (POPs) and Data Centers (DCs).

POPs act as the first line of connection, handling ingestion and local caching. They sit closer to users and reduce the hop count.

DCs handle the heavy lifting: encoding, storing, and dispatching live streams to other POPs and clients.

This architecture allows for regional isolation and graceful degradation. If one POP goes down, others can pick up the slack without a central failure.

Scaling Challenges That Break Things

Here are some key scaling challenges Facebook faced that potentially created issues:

Concurrent Stream Ingestion: Handling thousands of concurrent broadcasters at once is not trivial. Ingesting and encoding live streams requires real-time CPU allocation, predictable bandwidth, and a flexible routing system that avoids bottlenecks.

Unpredictable Viewer Surges: Streams rarely follow a uniform pattern. One moment, a stream has minimal viewers. Next, it's viral with 12 million. Predicting this spike is nearly impossible, and that unpredictability wrecks static provisioning strategies. Bandwidth consumption doesn’t scale linearly. Load balancers, caches, and encoders must adapt in seconds, not minutes.

Hot Streams and Viral Behavior: Some streams, such as political events, breaking news, can go global without warning. These events impact the caching and delivery layers. One stream might suddenly account for 50% of all viewer traffic. The system must replicate stream segments rapidly across POPs and dynamically allocate cache layers based on viewer geography.

Live Video Architecture

Streaming video live is about managing flow across an unpredictable, global network. Every live session kicks off a chain reaction across infrastructure components built to handle speed, scale, and chaos. Facebook Live’s architecture reflects this need for real-time resilience.

Live streams originate from a broad set of sources:

Phones with shaky LTE

Desktops with high-definition cameras

Professional setups using the Live API and hardware encoders

These clients create RTMPS (Real-Time Messaging Protocol Secure) streams. RTMPS carries the video payload with low latency and encryption, making it viable for casual streamers and production-level events.

Points of Presence (POPs)

POPs act as the first entry point into Facebook’s video pipeline. They’re regional clusters of servers optimized for:

Terminating RTMPS connections close to the source

Minimizing round-trip latency for the broadcaster

Forwarding streams securely to the appropriate data center

Each POP is tuned to handle a high volume of simultaneous connections and quickly routes streams using consistent hashing to distribute load evenly.

See the diagram below:

Data Centers

Once a POP forwards a stream, the heavy lifting happens in a Facebook data center. This is where the encoding hosts:

Authenticate incoming streams using stream tokens

Claim ownership of each stream to ensure a single source of truth

Transcode video into multiple bitrates and resolutions

Generate playback formats like DASH and HLS

Archive the stream for replay or on-demand viewing

Each data center operates like a mini CDN node, tailored to Facebook’s specific needs and traffic patterns.

Caching and Distribution

Live video puts pressure on distribution in ways that on-demand video doesn’t.

With pre-recorded content, everything is cacheable ahead of time. But in a live stream, the content is being created while it's being consumed. That shifts the burden from storage to coordination. Facebook’s answer was to design a caching strategy that can support this.

The architecture uses a two-tier caching model:

POPs (Points of Presence): Act as local cache layers near users. They hold recently fetched stream segments and manifest files, keeping viewers out of the data center as much as possible.

DCs (Data Centers): Act as origin caches. If a POP misses, it falls back to a DC to retrieve the segment or manifest. This keeps encoding hosts from being overwhelmed by repeated requests.

This separation allows independent scaling and regional flexibility. As more viewers connect from a region, the corresponding POP scales up, caching hot content locally and shielding central systems.

Managing the Thundering Herd

The first time a stream goes viral, hundreds or thousands of clients might request the same manifest or segment at once. If all those hit the data center directly, the system gets into trouble.

To prevent that, Facebook uses cache-blocking timeouts:

When a POP doesn’t have the requested content, it sends a fetch upstream.

All other requests for that content are held back.

If the first request succeeds, the result populates the cache, and everyone gets a hit.

If it times out, everyone floods the DC, causing a thundering herd.

The balance is tricky:

If the timeout is too short, the herd gets unleashed too often.

If the timeout is too long, viewers start experiencing lag or jitter.

Keeping Manifests Fresh

Live streams rely on manifests: a table of contents that lists available segments. Keeping these up-to-date is crucial for smooth playback.

Facebook uses two techniques:

TTL (Time to Live): Each manifest has a short expiry window, usually a few seconds. Clients re-fetch the manifest when it expires.

HTTP Push: A more advanced option, where updates get pushed to POPs in near real-time. This reduces stale reads and speeds up segment availability.

HTTP Push is preferable when tight latency matters, especially for streams with high interaction or fast-paced content. TTL is simpler but comes with trade-offs in freshness and efficiency.

Live Video Playback

Live playback is about consistency, speed, and adaptability across networks that don’t care about user experience.

Facebook’s live playback pipeline turns a firehose of real-time video into a sequence of reliable HTTP requests, and DASH is the backbone that makes that work.

DASH (Dynamic Adaptive Streaming over HTTP)

DASH breaks live video into two components:

A manifest file that acts like a table of contents.

A sequence of media files, each representing a short segment of video (usually 1 second).

The manifest evolves as the stream continues. New entries are appended, old ones fall off, and clients keep polling to see what’s next. This creates a rolling window, typically a few minutes long, that defines what’s currently watchable.

Clients issue HTTP GET requests for the manifest.

When new entries appear, they fetch the corresponding segments.

Segment quality is chosen based on available bandwidth, avoiding buffering or quality drops.

This model works because it’s simple, stateless, and cache-friendly. And when done right, it delivers video with sub-second delay and high reliability.

Where POPs Come In

Playback clients don’t talk to data centers directly. Instead, they go through POPs: edge servers deployed around the world.

POPs serve cached manifests and segments to minimize latency.

If a client requests something new, the POP fetches it from the nearest data center, caches it, and then returns it.

Repeat requests from nearby users hit the POP cache instead of hammering the DC.

This two-tier caching model (POPs and DCs) keeps things fast and scalable:

It reduces the load on encoding hosts, which are expensive to scale.

It localizes traffic, meaning regional outages or spikes don’t propagate upstream.

It handles unpredictable viral traffic with grace, not panic.

Conclusion

Facebook Live didn’t reach a billion users by accident. It got there through deliberate, pragmatic engineering. The architecture was designed to survive chaos in production.

The story begins with a clock stream on a rooftop, but it quickly shifts to decisions under pressure: picking RTMP because it worked, chunking uploads to survive flaky networks, and caching manifests to sidestep thundering herds.

A few lessons cut through all the technical layers:

Start small, iterate fast: The first version of Live aimed to be shippable. That decision accelerated learning and forced architectural clarity early.

Design for scale from day one: Systems built without scale in mind often need to be rebuilt. Live was architected to handle billions, even before the first billion arrived.

Bake reliability into architecture: Redundancy, caching, failover had to be part of the core system. Bolting them on later wouldn’t have worked.

Plan for flexibility in features: From celebrity streams to 360° video, the infrastructure had to adapt quickly. Static systems would’ve blocked product innovation.

Expect the unexpected: Viral content, celebrity spikes, and global outages aren’t edge cases but inevitable. Systems that can’t handle unpredictability don’t last long.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Like

Comment

Restack

© 2025 ByteByteGo

548 Market Street PMB 72296, San Francisco, CA 94104

Unsubscribe

by "ByteByteGo" <bytebytego@substack.com> - 11:36 - 20 May 2025 -

[FREE REPLAY] McCall Jones dropped serious value!

She used this charisma hack to launch $100K in 3 weeksIf you haven’t watched the ClickFunnels Connect Replay yet… You're seriously missing out!

One of the most talked-about moments…?

McCall Jones!

She showed exactly how to master the “Hook, Story, Offer” framework… With real charisma… Can completely change your results!

WATCH MCCALL’S CHARISMA HACK + HOOK, STORY, OFFER TRAINING REPLAY HERE >>

McCall broke it down in a way that finally made it all click…

✅ Why some webinars land and others fall flat…

✅ Why some creators connect instantly and others get skipped…

✅ And how charisma isn’t something you’re born with… It's something you build!

She even shared the exact charisma tweak that helped launch a six-figure offer in just three weeks!

The best part… It was step-by-step, watch-it-work-today stuff.

AND DON’T FORGET…. Russell made a big announcement at this event, too!! You have to hear what he dropped!!

WATCH THE REPLAY + RUSSELL’S ANNOUNCEMENT + SEE McCALL’S CHARISMA HACK IN ACTION >>

And remember…

The replay’s coming down Friday at 11:59PM!

Watch it before it disappears.

See you on the replay!

© Etison LLC

By reading this, you agree to all of the following: You understand this to be an expression of opinions and not professional advice. You are solely responsible for the use of any content and hold Etison LLC and all members and affiliates harmless in any event or claim.

If you purchase anything through a link in this email, you should assume that we have an affiliate relationship with the company providing the product or service that you purchase, and that we will be paid in some way. We recommend that you do your own independent research before purchasing anything.

Copyright © 2018+ Etison LLC. All Rights Reserved.

To make sure you keep getting these emails, please add us to your address book or whitelist us. If you don't want to receive any other emails, click on the unsubscribe link below.

Etison LLC

3443 W Bavaria St

Eagle, ID 83616

United States

by "Todd Dickerson" <noreply@clickfunnelsnotifications.com> - 10:27 - 20 May 2025 -

Bibliomania Ep 2: why I wired $500K to make a movie!

And you’ll never guess what the guy said…What did you think about my FREE docu-series yet??

If you got busy and didn’t click on my last email… I’ll just say this:

You’re missing out on Prime Time Television my friend!!

Ep. 2 - Oceans 7… This is good stuff!

Just a little sneak peak…

I was dead set on making a movie around Napoleon Hill’s most controversial book:

Outwitting The Devil

Derral Eves (AKA one of the executive producers behind the massively popular TV series, The Chosen) and I were talking about it…

And The Napoleon Hill Foundation seemed willing…

But A LOT of unexpected roadblocks dramatically took this journey from a straight-forward path and transformed it into a harrowing uphill climb I didn’t know if we could summit…

Even after wiring half a million dollars on a HOPE and a PRAYER…

You won’t guess what the guy said to me… 😬

CHECK OUT BIBLIOMANIA EPISODE #2: OCEAN’S 7 NOW! >>

Let’s just say what I thought was a simple negotiation…

…turned into a middle-of-the-night mission where 6 Inner Circle members and myself sneak out after a mastermind meeting to fly to Virginia.

It’ll all make sense when you watch episode #2 here >>

Hold your breath!

Russell Brunson

P.S. – Don’t forget, you’re just ONE funnel away…

© Prime Mover LLC

By reading this, you agree to all of the following: You understand this to be an expression of opinions and not professional advice. You are solely responsible for the use of any content and hold Prime Mover LLC and all members and affiliates harmless in any event or claim.

If you purchase anything through a link in this email, you should assume that we have an affiliate relationship with the company providing the product or service that you purchase, and that we will be paid in some way. We recommend that you do your own independent research before purchasing anything.

Copyright © 2025 Prime Mover LLC. All Rights Reserved.

To make sure you keep getting these emails, please add us to your address book or whitelist us. If you don't want to receive any other emails, click on the unsubscribe link below.

Prime Mover LLC

3443 W Bavaria St

Eagle, ID 83616

United States

by "Russell Brunson" <newsletter@marketingsecrets.com> - 10:07 - 20 May 2025 -

OIL/GAS

Good-day, We are pleased to inform you that our Refinery currently has a range of petroleum products available in Rotterdam and Houston Ports at competitive market prices. Our transaction procedures are efficient and transparent, ensuring a smooth process for our clients. Our product offerings include: Aviation Turbine Jet Fuel A1 Aviation Kerosene Colonial Grade 54 (JP54) Diesel EN590 (10 PPM) D2 GAS OIL GOST 305-82 Petrol 93 /95 OCT/RON UNLEADED PETROL RON D6 Virgin Fuel oil Mazut M100 Please feel free to contact us if you have any requirements for the above products. We are looking forward to the opportunity to work with you. Email: manoilgroupcompanies@outlook.com Tel /Whatsapp : +77752174758 With kind regards, -- This email has been checked for viruses by Avast antivirus software. www.avast.com

by "Man Oil" <ave.tinambunan@orion.net.id> - 08:39 - 20 May 2025 -

You're Invited! Product Expert Series: Intelligent Observability for Security Management

New Relic

Dive into security management, streamlined by observability data.

Are you spending too much time tackling irrelevant vulnerabilities while critical threats linger?

Join us for Product Expert Series, Episode 4 on 28 May at 10 AM BST and learn how to make security smarter and faster. Our experts will demo groundbreaking tools that automatically prioritise runtime vulnerabilities posing actual risks and share best practices for saving DevOps teams invaluable time.

The live demo and Q&A session will focus on how to:

- Minimise interruptions with prioritised and actionable security findings from Security RX.

- Gain precision insights from existing APM and infra agents, eliminating time wasted on vulnerabilities that don’t matter.

- Answer your specific questions and unique use cases.

This session will empower you to streamline your security actions and elevate your team’s efficiency.

Save your spot today and take a smarter approach to enhance your security posture.

Register Now View in browser

This email was sent to info@learn.odoo.com. Update your email preferences.For information about our privacy practices, see our Privacy Policy.

Need to contact New Relic? You can chat or call us at +44 20 3859 9190

Strand Bridge House, 138-142 Strand, London WC2R 1HH

© 2025 New Relic, Inc. All rights reserved. New Relic logo are trademarks of New Relic, Inc.

by "New Relic" <emeamarketing@newrelic.com> - 05:09 - 20 May 2025 -

Enhance Your Product Performance with WINDUS Exhaust Manifolds

Dear Info,

Hope you're doing well.

This is Jane from WINDUS ENTERPRISES INC., a trusted partner for custom exhaust solutions.

Our cast iron and stainless steel exhaust manifolds are built for durability, superior heat resistance, and performance optimization. We offer full engineering support and can manufacture according to your drawings or specific requirements.

Would you be open to a brief discussion about how WINDUS can support your upcoming projects?

Thank you for your time.

Best regards,

Jane

by "manager37" <manager37@windus.biz> - 04:37 - 20 May 2025 -

How can businesses navigate tariff-related uncertainty?

On McKinsey Perspectives

A guide for CEOs

by "Only McKinsey Perspectives" <publishing@email.mckinsey.com> - 01:29 - 20 May 2025 -

🔴 LIVE Today: Leadership decoded in a VUCA world

🔴 LIVE Today: Leadership decoded in a VUCA world

Hello, What happens when a battlefield leader, a global CIO, and a tech COO walk into a keynote? You get a session that redefines leadership in the age of chaos. We’re going LIVE from #ShieldNXG, Bangalore with: • Lt Col Savin Sam – Indian Army officer, AI strategist, and a global thought leader who’s lived leadership under real fire. • Preeti Singh – Global COO of 7Infinity, driving innovation and transformation at scale. • Shamsheer Khan – Global CIO, security visionary, and the architect of cyber resilience at fnCyber. Together, they’ll explore: • Leadership in a VUCA world • Cybersecurity, AI & emerging tech as enablers of trus • Human resilience when the stakes are highest This is more than a session. It’s a playbook for tomorrow’s leaders. Join the LIVE session now Cheers,

Thamizh

ManageEngineThis email was sent by thamizh@manageengine.com to info@learn.odoo.comDon't want to receive emails like this again? Unsubscribe | Manage PreferenceManageEngine, A division of Zoho Corporation. | 4141 Hacienda Drive Pleasanton, CA 94588, USA

by "Thamizh | ManageEngine" <thamizh@manageengine.com> - 12:18 - 20 May 2025 -

[REPLAY AVAILABLE] See what you missed in Utah!

Russell made a really big announcement…If you didn’t hear already… Utah was awesome!

Survey Funnels, Charisma Hacking, and Russell’s big announcement. (You gotta see this!)

If you missed the ClickFunnels Connect livestream… Don't worry!

We’ve got the full replay waiting for you right now!

WATCH THE CF CONNECT UTAH EVENT REPLAY AND HEAR RUSSELL’S ANNOUNCEMENT HERE >>

This was such a fun, high-value stream!

Here’s a sneak peek at what’s on the replay:

✅ Jake Leslie and Ben Moote revealed how to build Survey Funnels that guide people naturally into your offer

✅ McCall Jones taught how to use the “Hook, Story, Offer” formula with charisma that actually connects

✅ Bridger Pennington shared a simple SEO tweak that boosted one of his old funnel pages to the top of Google

✅ And yes… Russell shared ___________________________! (Nice try, haha! I’m not spilling the beans!! You have to watch to see the big announcement!)

WATCH THE CF CONNECT UTAH EVENT REPLAY AND HEAR RUSSELL’S ANNOUNCEMENT HERE >>

No pitches. No offers. Just real training, ideas, and behind-the-scenes strategies from people in the trenches.

But here’s the catch:

The replay is only up until Friday at 11:59PM… After that, it goes into the vault!

So don’t miss it a second time!

WATCH THE CF CONNECT UTAH EVENT REPLAY AND HEAR RUSSELL’S ANNOUNCEMENT HERE >>

See you on the replay!

© Etison LLC

By reading this, you agree to all of the following: You understand this to be an expression of opinions and not professional advice. You are solely responsible for the use of any content and hold Etison LLC and all members and affiliates harmless in any event or claim.

If you purchase anything through a link in this email, you should assume that we have an affiliate relationship with the company providing the product or service that you purchase, and that we will be paid in some way. We recommend that you do your own independent research before purchasing anything.

Copyright © 2018+ Etison LLC. All Rights Reserved.

To make sure you keep getting these emails, please add us to your address book or whitelist us. If you don't want to receive any other emails, click on the unsubscribe link below.

Etison LLC

3443 W Bavaria St

Eagle, ID 83616

United States

by "Todd Dickerson" <noreply@clickfunnelsnotifications.com> - 11:18 - 19 May 2025 -

I wired half a million dollars… BLIND?! (video reveal)

Bibliomania episode #2 just dropped!OK… Not sure if you’ve been following along with my FREE docu-series: Bibliomania…?

But, if you haven’t… You totally should be! You’ll have a blast seeing how much I geek out over old books…

(Yea, I’m kind of a nerd when it comes to historical marketing and success books… but you probably already knew this about me ;)

Now, if you don’t already know… I’ve already invested MILLIONS of dollars in old/rare books surrounding the New Thought movement that can’t be found anywhere else, including one of the all-time greats, Napoleon Hill…

BUT there was one thing I was missing, and I HAD to have it…

The goal? Make a movie around one of Hill’s most controversial works EVER.

And that’s what I reveal in the second episode of Bibliomania… called Ocean’s 7!!

You can go watch it for FREE right now (when you tap or click the link below)...

WATCH BIBLIOMANIA EPISODE #2: OCEANS 7 HERE >>

(BTW… If you missed episode one… You can watch that too!)

In the second (heart-pounding) episode, I cover the entire battle for this RARE book… including the crazy late night jet flight… and it just launched this morning!

Oh man… let’s just say I’ve had to haggle for books before, but this was unlike anything I had ever experienced in my life, and things did NOT turn out as planned.

Watch Episode #2 ASAP to watch how it all goes down AND get the latest peek at what’s coming next for Secrets of Success! (Hint: It’s insane!!!)

WATCH BIBLIOMANIA EPISODE #2: OCEANS 7 HERE >>

Talk soon,

Russell Brunson

P.S. – Don’t forget, you’re just ONE funnel away…

© Prime Mover LLC

By reading this, you agree to all of the following: You understand this to be an expression of opinions and not professional advice. You are solely responsible for the use of any content and hold Prime Mover LLC and all members and affiliates harmless in any event or claim.

If you purchase anything through a link in this email, you should assume that we have an affiliate relationship with the company providing the product or service that you purchase, and that we will be paid in some way. We recommend that you do your own independent research before purchasing anything.

Copyright © 2025 Prime Mover LLC. All Rights Reserved.

To make sure you keep getting these emails, please add us to your address book or whitelist us. If you don't want to receive any other emails, click on the unsubscribe link below.

Prime Mover LLC

3443 W Bavaria St

Eagle, ID 83616

United States

by "Russell Brunson" <newsletter@marketingsecrets.com> - 09:12 - 19 May 2025 -

Fujian Yongyi Textile: Your ideal functional fabric partner

Dear Info,

Hello!

Hope you are well after receiving this email. We are Fujian Yongyi Textile Technology Co., Ltd., a leading manufacturer specializing in high-performance fabrics for global brands.

We understand today's market demand for durable, functional and affordable textiles. Our key strengths include:

1. Professional sports performance: sports fabrics have functions such as moisture absorption and perspiration, quick drying, antibacterial and UV resistance, meeting the high standards of international sports brands.

2. Elasticity and durability: Knitted fabrics are highly elastic and wear-resistant, suitable for high-intensity sports scenes.

3. Rapid customized service: from basic knitted/chemical fiber fabrics to functional finishing (such as waterproof, flame retardant), reducing customers' coordination costs with multiple suppliers.

4. High cost-effectiveness: large production capacity of chemical fiber and knitted fabrics, strong price competitiveness, own factories or mature cooperative supply chains.Our product range includes:

Knitted fabrics, chemical fiber fabrics, sports fabrics, sports functional fabrics, environmentally friendly recycled fabrics, printed fabrics, Velcro.We would be happy to share a product catalog and discuss with you how we can meet your sourcing needs, and we look forward to your feedback!

Best wishes for your business!

Fujian Yongyi Textile Technology Co., Ltd.

by "Lsm" <Lsm@yokia-knitted.com> - 06:44 - 19 May 2025 -

Enhance comfort with quality bedding and curtain fabrics

Dear Customer

Hello! I am a well-known supplier of bedding fabrics production and sales in China, our company is a trusted manufacturer of high performance home textiles, products include

Polyester and cotton bedding fabrics (wrinkle resistant, OEKO-TEX certified).

Customisable sofa and curtain fabrics (UV resistant, flame retardant).

Waterproof technical textiles (ideal for outdoor/maritime use).

Many of our customers have reduced their costs by up to 15 per cent with our fabrics, while increasing the durability of their products. Can you tell us about your scope of operations and discuss your sourcing needs?

Why work with us?

✅ ISO 9001 certified manufacturing.

✅ Over 10 years of experience in OEM/ODM solutions.

✅ Free samples for qualified enquiries.

Let's explore co-operation!

Best regards,

Jerry

General Manager

WhatsApp:+8613615215166

by "Muse" <Muse@snobiehts.com> - 06:16 - 19 May 2025 -

Elevate Your Kitchen with Top-quality Stainless Steel Solutions

Dear Info,

I hope this email finds you well. We are reaching out to introduce you to a leader in stainless steel product processing with over a decade of experience, renowned for our dedication to quality and innovation in commercial kitchen equipment production.

At Brandon, we specialize in crafting high-grade stainless steel solutions tailored for commercial kitchens. Our extensive product lineup includes kitchen workbenches, washbasins, kitchen cabinets, cabinet shelves, carts, and more. Furthermore, we produce essential commercial hotel equipment like mop pools and washbasins, ensuring that your kitchen operations run smoothly and efficiently.

Recognizing the surge in courtyard barbecues and outdoor gatherings, we have expanded our expertise to include a diverse range of outdoor kitchen products. Our newly launched product line features robust and stylish outdoor essentials such as cart racks, ovens, folding tables, built-in doors, and cabinets. These versatile additions are designed to elevate your outdoor kitchen space, offering functionality and aesthetic appeal, perfectly tailored for any event or gathering.

With our commitment to quality craftsmanship and customer satisfaction, [Your Company Name] guarantees products that withstand the demands of busy kitchen environments while enhancing the overall aesthetic and functionality of your space.

We invite you to explore our range of stainless steel solutions and discover the perfect fit for your kitchen needs, be it commercial or outdoor. Let us partner with you in creating a seamless, efficient, and visually appealing kitchen environment.

Thank you for considering [Your Company Name] for your kitchen equipment requirements. For inquiries or to schedule a consultation, please do not hesitate to contact us.

by "sales5" <sales5@brandon-fabrication.com> - 06:06 - 19 May 2025 -

Re: Innovative AI Dash Cam Features for Business Applications

Dear Info,

This is Mavis from TOPWO Technology Co. Ltd.

TOPWO is a professional commercial telematics vendor with 8 years experience.

In today’s fast-paced world, safety on the road is more important than ever. That's why we’re excited to introduce you our 6 Channel Smart AI Dash cam with ADAS, DMS and BSD.

1. Stable Quality: works for 3-5 years on mining trucks with 0 defective.

2. Support ADAS, DMS and BSD

3. Full 4G Communication + WiFi Module

4. Acc Off Delay Record + Power Down Delay Record

5. Fashion Design for commercial trucks

Whether you're on a daily commute or managing a fleet of vehicles, this AI 6-Channel Dash cam offers unparalleled protection, both for your car and for your peace of mind.

by "Jezzy Durey" <jezzydurey@gmail.com> - 04:45 - 19 May 2025 -

Let the electric surfboard take you to endless water fun!

Hello Friend.

Wishing you have a nice day!

This is Marie from Hoverstar Flight Technology Co.,Ltd. We are main in water sports and water rescue for more than 10 years.

Glad to know that you are interested in our electric surfboard products.

I'm here want to share with you more details about our electric surfboard, our H5-F was selling well in USA, Canada, Germany etc. We have run them in rivers, oceans and lakes and have customers around the globe.

It can rapid acceleration in 2 seconds and the max speed could be 58km/h.

Under the max speed running, it could last for 30-40min.

Under normal surfing speed, it could last for 60-90 min. Satisfy the user experience of different levels of customers.

Here are some details on the device: China Battery Powered/Motorised/Electric Surfboard Supplies For Sale | Havospark

Our classic design is the the black one with carbon fiber material as below the pic showed. No MOQ limited and competitive price.

Also, all the boards could accept custom design in 1pc, like colors, patterns, logo etc.

Please kindly let me know if you have any questions.We would greatly appreciate it if we could have further communication.

Thanks and Warm Regards

Marie Lee

Shenzhen Hoverstar Flight Technology Co.,Ltd

email: marie@hoverstar.com

Contact/Wechat/Whatsapp: +8613527761074

Website:www.hoverstar.com

by "marie01" <marie01@hoverstarjet.com> - 04:06 - 19 May 2025 -

How Pinterest Scaled Its Architecture to Support 500 Million Users

How Pinterest Scaled Its Architecture to Support 500 Million Users

In this article, we’ll look at how Pinterest scaled its architecture to handle the scale and the challenges they faced along the way.͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ Forwarded this email? Subscribe here for moreAvoid the widening AI engineering skills gap (Sponsored)

Most AI hype today is about developer productivity and augmentation. This overshadows a more important opportunity: AI as the foundation for products and features that weren’t previously possible.

But, it’s a big jump from building Web 2.0 applications to AI-native products, and most engineers aren’t prepared. That’s why Maven is hosting 50+ short, free live lessons with tactical guidance and demos from AI engineers actively working within the new paradigm.

This is your opportunity to upskill fast and free with Maven’s expert instructors. We suggest you start with these six:

Evaluating Agentic AI Applications - Aish Reganti (Tech Lead, AWS) & Kiriti Badam (Applied AI, OpenAI)

Optimize Structured Data Retrievals with Evals - Hamel Husain (AI Engineer, ex-Github, Airbnb)

CTO Playbook for Agentic RAG - Doug Turnbull (Principal ML Engineer, ex-Reddit, Shopify)

Build Your First Agentic AI App with MCP - Rafael Pierre (Principal AI Engineer, ex-Hugging Face, Databricks)

Embedding Performance through Generative Evals - Jason Liu (ML Engineer, ex-Stitch Fix, Meta)

Design Vertical AI Agents - Hamza Farooq (Stanford instructor, ex-Google researcher)

To go deeper with these experts, use code BYTEBYTEGO to get $100 off their featured AI courses - ends June 30th.

Disclaimer: The details in this post have been derived from the articles/videos shared online by the Pinterest engineering team. All credit for the technical details goes to the Pinterest Engineering Team. The links to the original articles and videos are present in the references section at the end of the post. We’ve attempted to analyze the details and provide our input about them. If you find any inaccuracies or omissions, please leave a comment, and we will do our best to fix them.

Pinterest launched in March 2010 with a typical early-stage setup: a few founders, one engineer, and limited infrastructure. The team worked out of a small apartment. Resources were constrained, and priorities were clear—ship features fast and figure out scalability later.

The app didn’t start as a scale problem but as a side project. A couple of founders, a basic web stack, and an engineer stitching together Python scripts in a shared apartment. No one was thinking about distributed databases when the product might not survive the week.

The early tech decisions reflected this mindset. The stack included:

Python for the application layer

NGINX as a front-end proxy

MySQL with a read replica

MongoDB for counters

A basic task queue for sending emails and social updates

But the scale increased rapidly. One moment it’s a few thousand users poking around images of food and wedding dresses. Then traffic doubles, and suddenly, every service is struggling to maintain performance, logs are unreadable, and engineers are somehow adding new infrastructure in production.

This isn’t a rare story. The path from minimum viable product to full-blown platform often involves growing pains that architectural diagrams never show. Systems that worked fine for 10,000 users collapse at 100,000.

In this article, we’ll look at how Pinterest scaled its architecture to handle the scale and the challenges they faced along the way.

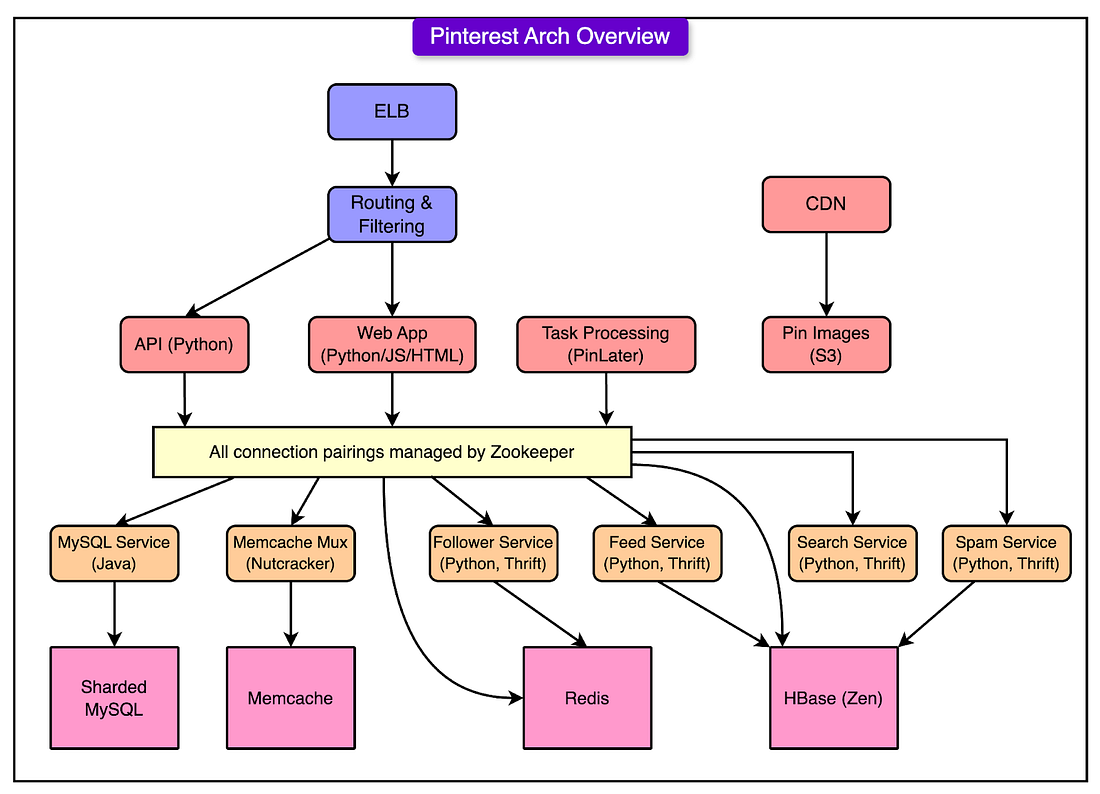

The Initial Architecture

Pinterest’s early architecture reflected its stage: minimal headcount, fast iteration cycles, and a stack assembled more for momentum than long-term sustainability.

When the platform began to gain traction, the team moved quickly to AWS. The choice wasn’t the result of an extensive evaluation. AWS offered enough flexibility, credits were available, and the team could avoid the friction of setting up physical infrastructure.

The initial architecture looked like this:

The technical foundation included:

NGINX is the front-end HTTP server. It handled incoming requests and routed them to application servers. NGINX was chosen for its simplicity and performance, and it required little configuration to get working reliably.

Python-based web engines, four in total, processed application logic. Python offered a high development speed and decent ecosystem support. For a small team, being productive in the language mattered more than raw runtime performance.

MySQL, with a read slave, served as the primary data store. The read slave allowed some level of horizontal scaling by distributing read operations, which helped reduce load on the primary database. At this point, the schema and data model were still evolving rapidly.

MongoDB was added to handle counters. These were likely used for tracking metrics like likes, follows, or pins. MongoDB’s document model and ease of setup made it a quick solution. It wasn’t deeply integrated or tuned.

A simple task queue system was used to decouple time-consuming operations like sending emails and posting to third-party platforms (for example, Facebook, Twitter). The queue was critical for avoiding performance bottlenecks during user interactions.

This stack wasn’t optimized for scale or durability. It was assembled to keep the product running while the team figured out what the product needed to become.

Rapid Growth and Chaos

As Pinterest’s popularity grew, traffic doubled every six weeks. This kind of growth puts a great strain on the infrastructure.

Pinterest hit this scale with a team of just three engineers. In response, the team added technologies reactively. Each new bottleneck triggered the introduction of a new system:

MySQL remained the core data store, but began to strain under concurrent reads and writes.

MongoDB handled counters.

Cassandra was used to handle distributed data needs.

MBase was introduced—less for fit, more because it was promoted as a quick fix.

Redis entered the stack for caching and fast key-value access.

The result was architectural entropy. Multiple databases, each with different operational behaviors and failure modes, created complexity faster than the team could manage.

Each new database seemed like a solution at first until its own set of limitations emerged. This pattern repeated: an initial phase, followed by operational pain, followed by another tool. By the time the team realized the cost, they were maintaining a fragile web of technologies they barely had time to understand.

This isn’t rare. Growth exposes every shortcut. What works for a smaller-scale project can’t always handle production traffic. Adding tools might buy time, but without operational clarity and internal expertise, it also buys new failure modes.

By late 2011, the team recognized a hard truth: complexity wasn’t worth it. They didn’t need more tools. They needed fewer, more reliable ones.

Post-Rearchitecture Stack

After enduring repeated failures and operational overload, Pinterest stripped the stack down to its essentials.

The architecture stabilized around three core components: MySQL, Redis, and Memcached (MIMC). Everything else (MongoDB, Cassandra, MBase) was removed or isolated.

Let’s look at each in more detail.

MySQL

MySQL returned to the center of the system.

It stored all core user data: boards, pins, comments, and domains. It also became the system of record for legal and compliance data, where durability and auditability were non-negotiable. The team leaned on MySQL’s maturity: decades of tooling, robust failover strategies, and a large pool of operational expertise.

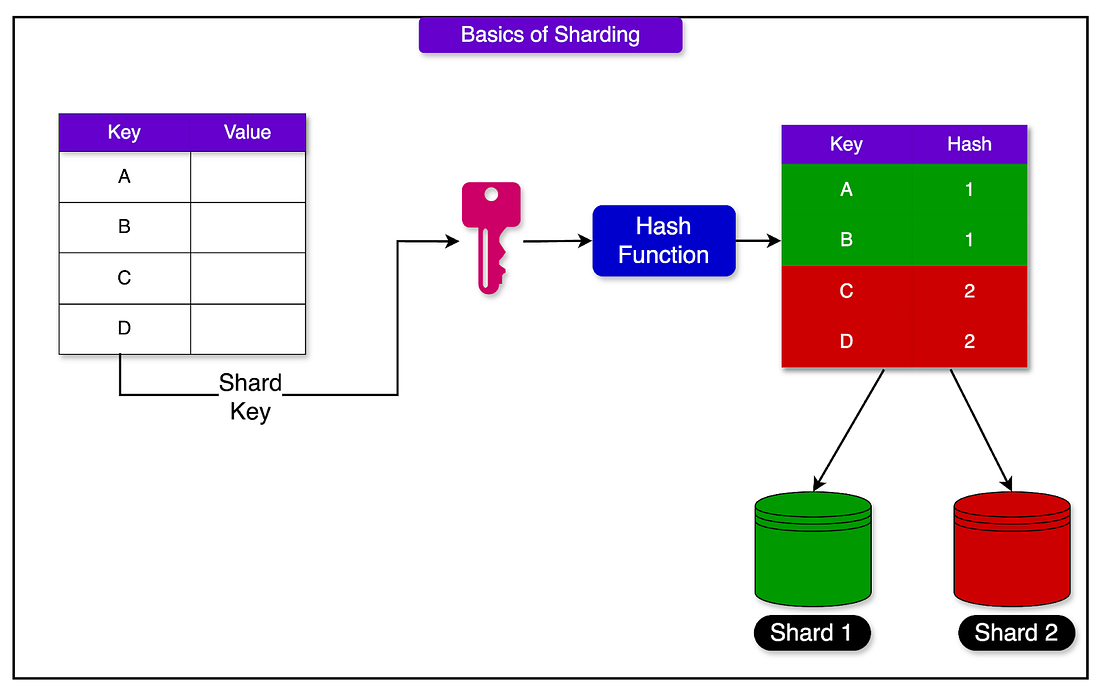

However, MySQL had one critical limitation: it didn’t scale horizontally out of the box. Pinterest addressed this by sharding and, more importantly, designing systems to tolerate that limitation. Scaling became a question of capacity planning and box provisioning, not adopting new technologies.

The diagram below shows how sharding works in general:

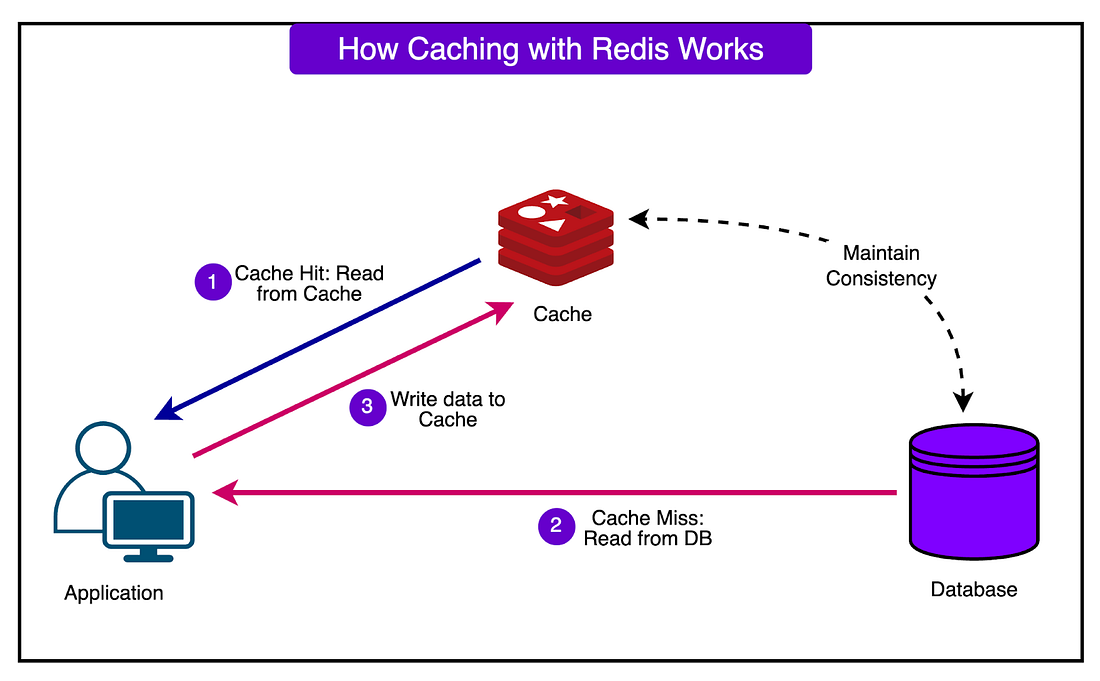

Redis

Redis handled problems that MySQL couldn’t solve cleanly:

Feeds: Pushing updates in real-time requires low latency and fast access patterns.

Follower graph: The complexity of user-board relationships demanded a more dynamic, memory-resident structure.

Public feeds: Redis provided a true list structure with O(1) inserts and fast range reads, ideal for rendering content timelines.

Redis was easier to operate than many of its NoSQL competitors. It was fast, simple to understand, and predictable, at least when kept within RAM limits.

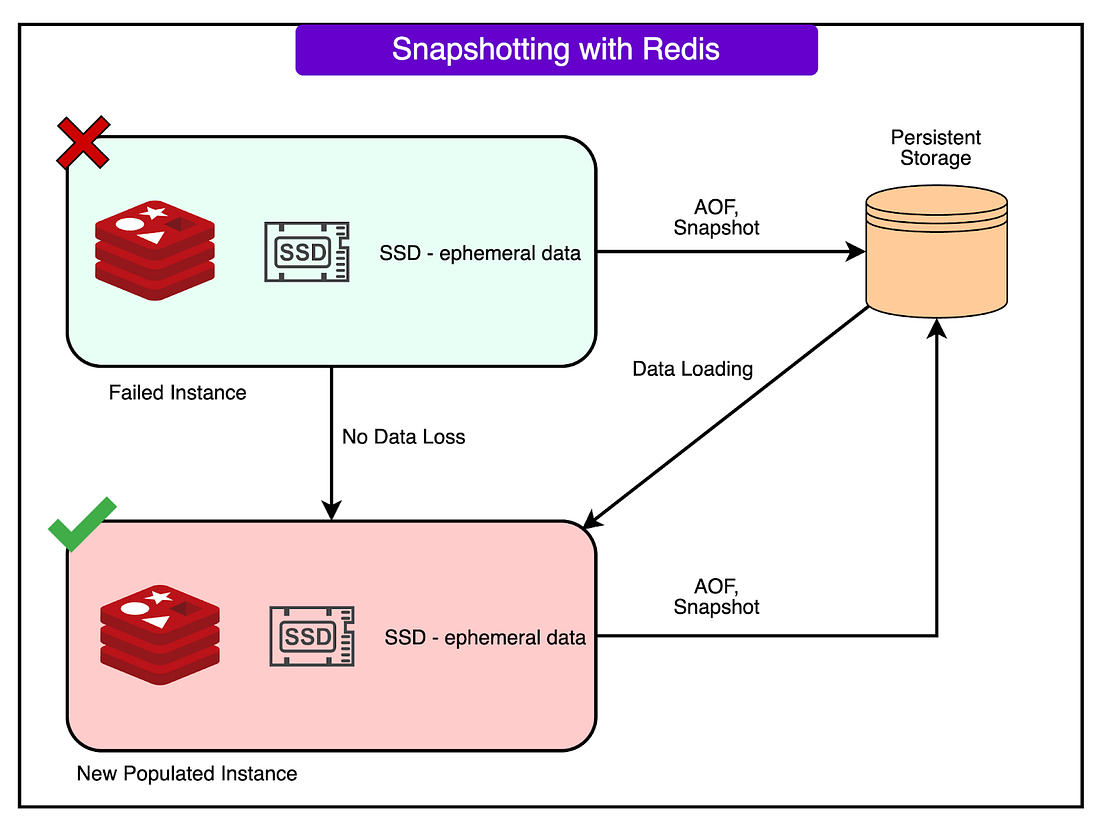

Durability Modes: Choosing Trade-offs Explicitly

Redis offers several persistence modes, each with clear implications:

No persistence: Everything lives in RAM and disappears on reboot. It’s fast and simple, but risky for anything critical.

Snapshotting (RDB): Periodically saves a binary dump of the dataset. If a node fails, it can recover from the last snapshot. This mode balances performance with recoverability.

Append-only file (AOF): Logs every write operation. More durable, but with higher I/O overhead.

Pinterest leaned heavily on Redis snapshotting. It wasn’t bulletproof, but for systems like the follower graph or content feeds, the trade-off worked: if a node died, data from the last few hours could be rebuilt from upstream sources. This avoided the latency penalties of full durability without sacrificing recoverability.

The diagram below shows snapshotting with Redis.

Why Redis Over MySQL?

MySQL remained Pinterest’s source of truth, but for real-time applications, it fell short:

Write latency increased with volume, especially under high concurrency.

Tree-based structures (for example, B-trees) make inserts and updates slower and harder to optimize for queue-like workloads.

Query flexibility came at the cost of performance predictability.

Redis offered a better fit for these cases:

Feeds: Users expect content updates instantly. Redis handled high-throughput, low-latency inserts with predictable performance.

Follower graph: Pinterest’s model allowed users to follow boards, users, or combinations. Redis stores this complex relationship graph as in-memory structures with near-zero access time.

Caching: Redis served as a fast-access layer for frequently requested data like profile overviews, trending pins, or related content.

Memcached (MIMC): Cache Layer Stability

MIMC served as a pure cache layer. It didn’t try to be more than that, and that worked in its favor.

It offloaded repetitive queries, reduced latency, and helped absorb traffic spikes. Its role was simple but essential: act as a buffer between user traffic and persistent storage.

Microservices and Infrastructure Abstraction

As Pinterest matured, scaling wasn’t just about systems. It was also about the separation of concerns.

The team began pulling tightly coupled components into services, isolating core functionality into defined boundaries with clear APIs.

Service Boundaries That Mattered

Certain parts of the architecture naturally became services because they carried high operational risk or required specialized logic:

Search Service: Handled query parsing, indexing, and result ranking. Internally, it became a complex engine, dealing with user signals, topic clustering, and content retrieval. From the outside, it exposed a simple interface: send a query, get relevant results. This abstraction insulated the rest of the system from the complexity behind it.

Feed Service: Managed the distribution of content updates. When a user pinned something, the feed service handled propagation to followers. It also enforced delivery guarantees and ordering semantics, which were tricky to get right at scale.

MySQL Service: Became one of the first hardened services. It sat between applications and the underlying database shards. This layer implemented safety checks, access controls, and sharding logic. By locking down direct access, it avoided mistakes that previously caused corrupted writes, like saving data to the wrong shard.

PinLater: Asynchronous Task Processing

Background jobs were offloaded to a system called PinLater. The model was simple: tasks were written to a MySQL-backed queue with a name, payload, and priority. Worker pools pulled from this queue and executed jobs.

This design had key advantages:

Tasks were durable.

Failure recovery was straightforward.

Prioritization was tunable without major system redesign.

PinLater replaced ad hoc queues and inconsistent task execution patterns. It introduced reliability and consistency into Pinterest’s background job landscape.

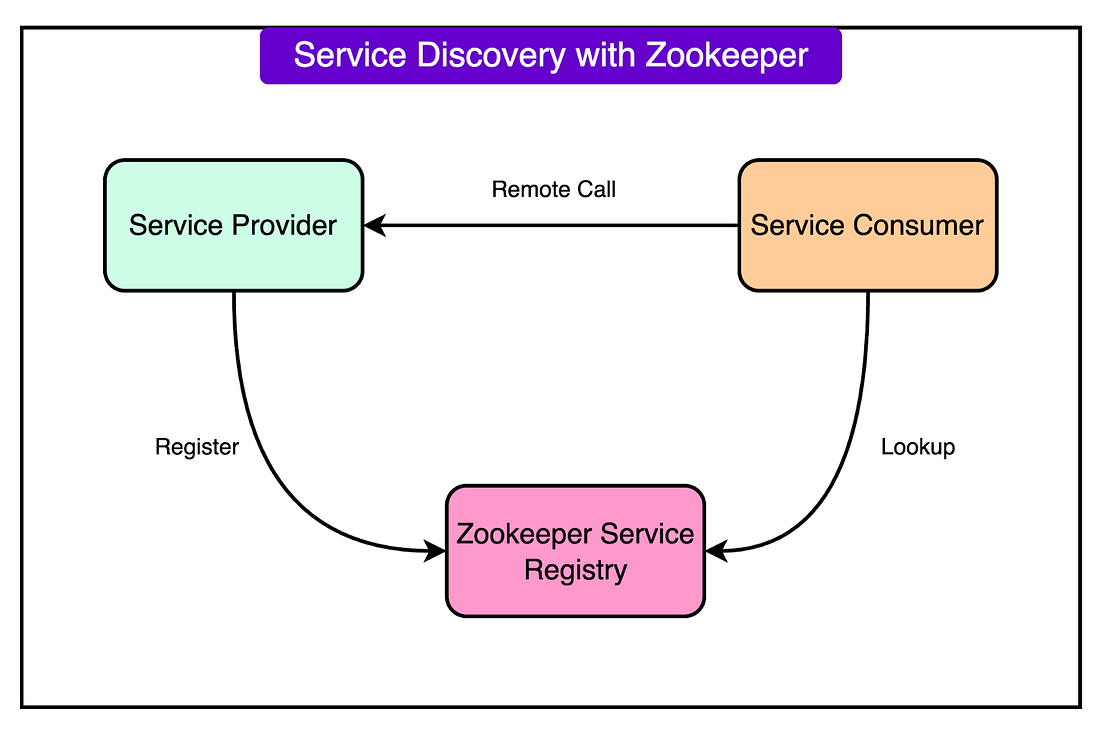

Service Discovery with Zookeeper

To avoid hardcoded service dependencies and brittle connection logic, the team used Zookeeper as a service registry. When an application needed to talk to a service, it queried Zookeeper to find available instances.

This offered a few critical benefits:

Resilience: Services could go down and come back up without breaking clients.

Elasticity: New instances could join the cluster automatically.

Connection management: Load balancing became less about middleware and more about real-time awareness.

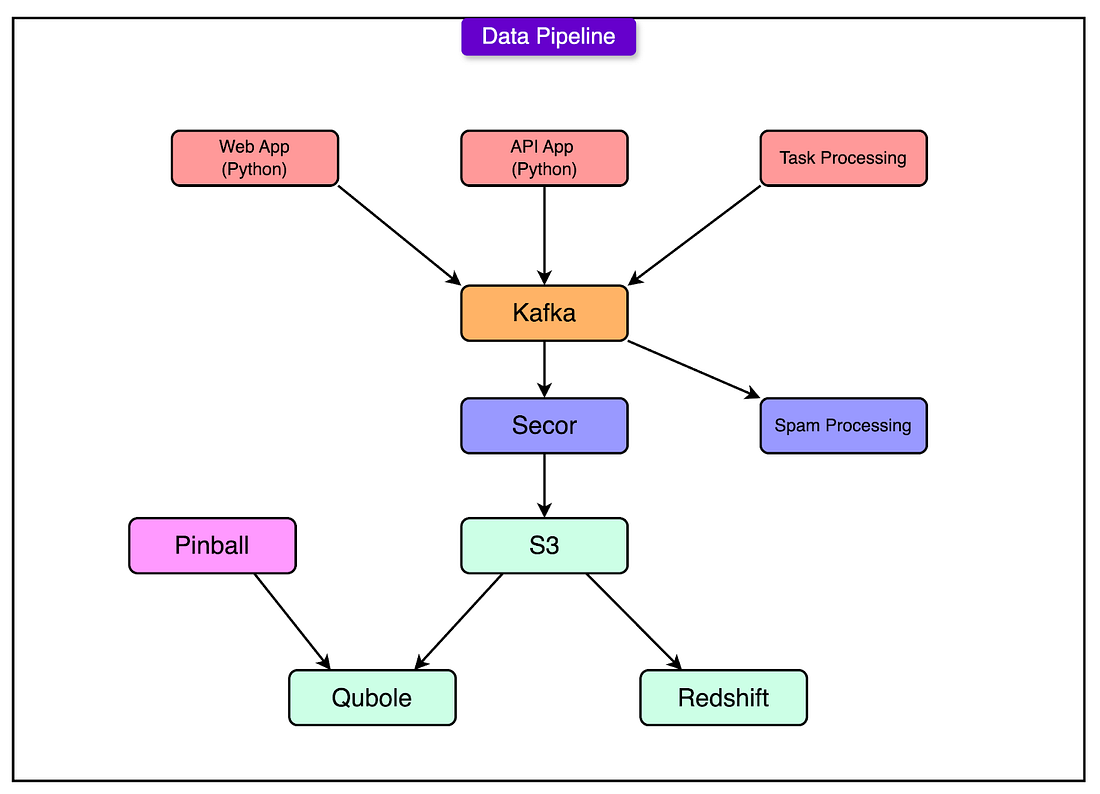

Data Pipeline and Monitoring

As Pinterest scaled, visibility became non-negotiable. The team needed to know what was happening across the system in real-time. Logging and metrics weren’t optional but part of the core infrastructure.

Kafka at the Core

The logging backbone started with Kafka, a high-throughput, distributed message broker. Every action on the site (pins, likes, follows, errors) pushed data into Kafka. Think of it as a firehose: everything flows through, nothing is lost unless explicitly discarded.

Kafka solved a few key problems:

It decoupled producers from consumers. Application servers didn’t need to know who would process the data or how.

It buffered spikes in traffic without dropping events.

It created a single source of truth for event data.

Secor + S3: Durable, Queryable Logs

Once the data hit Kafka, it flowed into Secor, an internal tool that parsed and transformed logs. Seor broke log entries into structured formats, tagged them with metadata, and wrote them into AWS S3.

This architecture had a critical property: durability. S3 served as a long-term archive. Once the data landed there, it was safe. Even if downstream systems failed, logs could be replayed or reprocessed later.

The team used this pipeline not just for debugging, but for analytics, feature tracking, and fraud detection. The system was designed to be extensible. Any new use case could hook into Kafka or read from S3 without affecting the rest of the stack.

Real-Time Monitoring

Kafka wasn’t only about log storage. It enabled near-real-time monitoring. Stream processors consumed Kafka topics and powered dashboards, alerts, and anomaly detection tools. The moment something strange happened, such as as spike in login failures, a drop in feed loads, it showed up immediately.

This feedback loop was essential. Pinterest didn’t just want to understand what happened after a failure. They wanted to catch it as it began.

Conclusion

Pinterest’s path from early chaos to operational stability left behind a clear set of hard-earned lessons, most of which apply to any system scaling beyond its initial design.

First, log everything from day one. Early versions of Pinterest logged to MySQL, which quickly became a bottleneck. Moving to a pipeline of Kafka to Seor to S3 changed the game. Logs became durable, queryable, and reusable. Recovery, debugging, analytics, everything improved.

Second, know how to process data at scale. Basic MapReduce skills went a long way. Once logs landed in S3, teams used MapReduce jobs to analyze trends, identify regressions, and support product decisions. SQL-like abstractions made the work accessible even for teams without deep data engineering expertise.

Third, instrument everything that matters. Pinterest adopted StatsD to track performance metrics without adding friction. Counters, timers, and gauges flowed through UDP packets, avoiding coupling between the application and the metrics backend. Lightweight, asynchronous instrumentation helped spot anomalies early, before users noticed.

Fourth, don’t start with complexity. Overcomplicating architecture early on, especially by adopting too many tools too fast, guarantees long-term operational pain.

Finally, pick mature, well-supported technologies. MySQL and Memcached weren’t flashy, but they worked. They were stable, documented, and surrounded by deep communities. When something broke, answers were easy to find.

Pinterest didn’t scale because it adopted cutting-edge technology. It scaled because it survived complexity and invested in durability, simplicity, and visibility. For engineering leaders and architects, the takeaways are pragmatic:

Design systems to fail visibly, not silently.

Favor tools with proven track records over tools with bold promises.

Assume growth will outpace expectations, and build margins accordingly.

References:

SPONSOR US

Get your product in front of more than 1,000,000 tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters - hundreds of thousands of engineering leaders and senior engineers - who have influence over significant tech decisions and big purchases.

Space Fills Up Fast - Reserve Today

Ad spots typically sell out about 4 weeks in advance. To ensure your ad reaches this influential audience, reserve your space now by emailing sponsorship@bytebytego.com.

Like

Comment

Restack

© 2025 ByteByteGo

548 Market Street PMB 72296, San Francisco, CA 94104

Unsubscribe

by "ByteByteGo" <bytebytego@substack.com> - 11:37 - 19 May 2025 -

A leader’s guide to a prosperous economy

Leading Off

Identify the indicators Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Leading Off. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

So where is the global economy headed? This question remains top of mind for corporate leaders as they continue to monitor the potential business impacts of tariffs and other trade-related developments. The answer depends on whether the world chooses a path that will increase cooperation and stability among countries and within societies. This week, we look at scenarios for future global growth and how they might unfold.

For economies to prosper, they need to foster stable and secure investment environments and reliable partnerships: in other words, a strong foundation of balance and trust. That’s according to McKinsey Senior Partners Cindy Levy, Olivia White, Shubham Singhal, and Sven Smit and their coauthors, who advise business leaders to look beyond current trade and budget deficit debates for five signs of where economies are on the path to prosperity. “Business leaders can’t, of course, change the macroeconomic environment,” the authors say. “What they can do is seek to understand the range of plausible outcomes of the new dynamics in the global economy and identify decisions to take in advance or contingent on how uncertainty resolves.” Among the indicators of economic acceleration that can inform corporate strategy decisions: decreasing trade frictions, low inflation supported by central bank action, and improving consumer sentiment. To learn more from McKinsey experts about the business impact of tariffs and global trade, register now for a McKinsey Live virtual event on Thursday, May 22.

That’s the percentage of executives who expect global economic conditions to become substantially or moderately worse over the next six months, according to a March survey on economic sentiment. This figure compares with 30 percent of respondents who gave the same answer in the previous quarter. However, McKinsey’s Sven Smit and his coauthors note that respondents were more optimistic about their own economies than the world economy, and expect to see improving—rather than declining—conditions in their countries in the next six months.

That’s McKinsey’s James Kaplan, Jan Shelly Brown, and Tucker Bailey on why chief information officers must reevaluate their current geopolitical-risk practices. The authors suggest that technology leaders consider five key issues related to global instability, including the risk exposure of their most business-critical assets and people, as well as tech failure modes that stem from geopolitical risk. “Thoughtful action can also provide companies with first-mover advantage by securing data, location, or talent options when pricing is likely to be better than it will be at the time of a risk event when companies are scrambling to compete for scarce resources,” they say.

From geopolitical uncertainty to supply chain disruptions to regulatory changes, global companies are facing headwinds from many directions. Jacob Aarup-Andersen, CEO of the global beverage giant Carlsberg, says these challenges call for building resilience across the organization. Carlsberg’s efforts to do so include empowering decision-making at all levels, creating a trusting culture so people speak up when they see problems, and testing multiple risk scenarios when expanding the company’s geographic or product portfolio. “We’ve learned that, typically, it is not the gradual crises that are the most dangerous, but the unforeseen ones,” Aarup-Andersen says in an interview with McKinsey Senior Partner Kim Baroudy. “Those are the moments when resilience is tested—when you really see whether you have the kind of organization that can successfully analyze, adapt, recover, and emerge stronger.”

The job of corporate boards has become more complex and demanding in recent years. Leadership teams are seeking more and more guidance on how to manage fast-evolving issues, such as geopolitics, cybersecurity, and gen AI. While geopolitical risk was not historically a top concern for boards as a stand-alone topic, it now infuses many key areas of the business, including growth, innovation, technology, and people, notes McKinsey Partner and Global Director of Geopolitics Ziad Haider. “It is fundamentally a new muscle for many boards, who came of age in a very different era where they weren’t having to think about geopolitical risk, segmentation, and fragmentation,” he says in an episode of McKinsey’s Inside the Strategy Room podcast. Senior Partner Frithjof Lund adds that many organizations are discussing whether their boards have the right expertise to tackle geopolitical risk—with some conducting trainings and bringing on external experts as directors to boost their capabilities. “It’s the same question around some of the technology trends [and] some of the macroeconomic trends. How do we ensure that we have a composition that reflects the strategic needs of the companies?” Lund says.

Lead by working toward balance and trust.

— Edited by Eric Quiñones, senior editor, New York

Share these insights

Did you enjoy this newsletter? Forward it to colleagues and friends so they can subscribe too. Was this issue forwarded to you? Sign up for it and sample our 40+ other free email subscriptions here.

This email contains information about McKinsey’s research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Leading Off newsletter.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "McKinsey Leading Off" <publishing@email.mckinsey.com> - 04:29 - 19 May 2025 -

⏰ Our LIVE show begins in 3-2-1…

…tomorrow! Registration is FREE. Watch live or later – you decide!

Our live show starts tomorrow!

Whether you watch live or watch later, register NOW to get exclusive

access to live sessions, on-demand recordings, and more!

Get the “best of” SAP Sapphire!

SAP (Legal Disclosure | SAP)

This e-mail may contain trade secrets or privileged, undisclosed, or otherwise confidential information. If you have received this e-mail in error, you are hereby notified that any review, copying, or distribution of it is strictly prohibited. Please inform us immediately and destroy the original transmittal. Thank you for your cooperation.You are receiving this e-mail for one or more of the following reasons: you are an SAP customer, you were an SAP customer, SAP was asked to contact you by one of your colleagues, you expressed interest in one or more of our products or services, or you participated in or expressed interest to participate in a webinar, seminar, or event. SAP Privacy Statement

This e-mail was sent to you on behalf of the SAP Group with which you have a business relationship. If you would like to have more information about your Data Controller(s) please click here to contact webmaster@sap.com.

This promotional e-mail was sent to you by SAP Global Marketing and provides information on SAP's products and services that may be of interest to you. If you would prefer not to receive such e-mails from SAP in the future, please click on the Unsubscribe link.

To ensure you continue to receive SAP related information properly please add sap@mailsap.com to your address book or safe senders list.

by "SAP Sapphire" <sap@mailsap.com> - 03:47 - 19 May 2025 -

How are corporate boards handling geopolitical risk?

On McKinsey Perspectives

It’s complicated Brought to you by Alex Panas, global leader of industries, & Axel Karlsson, global leader of functional practices and growth platforms

Welcome to the latest edition of Only McKinsey Perspectives. We hope you find our insights useful. Let us know what you think at Alex_Panas@McKinsey.com and Axel_Karlsson@McKinsey.com.

—Alex and Axel

•

Rising complexity. Corporate boards face increased complexity, with heightened geopolitical risk among the main concerns they must consider. Yet even though boards acknowledge their expanding role in guiding organizations, less than half of surveyed board directors say geopolitical and macroeconomic risks are currently on their agendas, McKinsey Senior Partner Frithjof Lund reveals in a recent episode of the Inside the Strategy Room podcast. Developing a tool kit to manage geopolitical risk is a new muscle for many boards to build, McKinsey Partner Ziad Haider adds.

—Edited by Belinda Yu, editor, Atlanta

This email contains information about McKinsey's research, insights, services, or events. By opening our emails or clicking on links, you agree to our use of cookies and web tracking technology. For more information on how we use and protect your information, please review our privacy policy.

You received this email because you subscribed to the Only McKinsey Perspectives newsletter, formerly known as Only McKinsey.

Copyright © 2025 | McKinsey & Company, 3 World Trade Center, 175 Greenwich Street, New York, NY 10007

by "Only McKinsey Perspectives" <publishing@email.mckinsey.com> - 01:24 - 19 May 2025